In computing, multitasking is the concurrent execution of multiple tasks over a certain period of time. New tasks can interrupt already started ones before they finish, instead of waiting for them to end. As a result, a computer executes segments of multiple tasks in an interleaved manner, while the tasks share common processing resources such as central processing units (CPUs) and main memory. Multitasking automatically interrupts the running program, saving its state and loading the saved state of another program and transferring control to it. This "context switch" may be initiated at fixed time intervals, or the running program may be coded to signal to the supervisory software when it can be interrupted.

An operating system (OS) is system software that manages computer hardware, software resources, and provides common services for computer programs.

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. Multiple threads can exist within one process, executing concurrently and sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non-thread-local global variables at any given time.

NTLDR is the boot loader for all releases of Windows NT operating system up to and including Windows XP and Windows Server 2003. NTLDR is typically run from the primary hard disk drive, but it can also run from portable storage devices such as a CD-ROM, USB flash drive, or floppy disk. NTLDR can also load a non NT-based operating system given the appropriate boot sector in a file.

IBM CICS is a family of mixed language application servers that provide online transaction management and connectivity for applications on IBM mainframe systems under z/OS and z/VSE.

Cooperative Linux, abbreviated as coLinux, is software which allows Microsoft Windows and the Linux kernel to run simultaneously in parallel on the same machine.

In computing, a file system or filesystem controls how data is stored and retrieved. Without a file system, data placed in a storage medium would be one large body of data with no way to tell where one piece of data stops and the next begins. By separating the data into pieces and giving each piece a name, the data is easily isolated and identified. Taking its name from the way paper-based data management system is named, each group of data is called a "file." The structure and logic rules used to manage the groups of data and their names is called a "file system."

A hypervisor is computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called a host machine, and each virtual machine is called a guest machine. The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems. Multiple instances of a variety of operating systems may share the virtualized hardware resources: for example, Linux, Windows, and macOS instances can all run on a single physical x86 machine. This contrasts with operating-system-level virtualization, where all instances must share a single kernel, though the guest operating systems can differ in user space, such as different Linux distributions with the same kernel.

The architecture of Windows NT, a line of operating systems produced and sold by Microsoft, is a layered design that consists of two main components, user mode and kernel mode. It is a preemptive, reentrant multitasking operating system, which has been designed to work with uniprocessor and symmetrical multiprocessor (SMP)-based computers. To process input/output (I/O) requests, they use packet-driven I/O, which utilizes I/O request packets (IRPs) and asynchronous I/O. Starting with Windows XP, Microsoft began making 64-bit versions of Windows available; before this, there were only 32-bit versions of these operating systems.

In computing, a benchmark is the act of running a computer program, a set of programs, or other operations, in order to assess the relative performance of an object, normally by running a number of standard tests and trials against it. The term benchmark is also commonly utilized for the purposes of elaborately designed benchmarking programs themselves.

OS-level virtualization is an operating system paradigm in which the kernel allows the existence of multiple isolated user space instances. Such instances, called containers, Zones, virtual private servers (OpenVZ), partitions, virtual environments (VEs), virtual kernels, or jails, may look like real computers from the point of view of programs running in them. A computer program running on an ordinary operating system can see all resources of that computer. However, programs running inside of a container can only see the container's contents and devices assigned to the container.

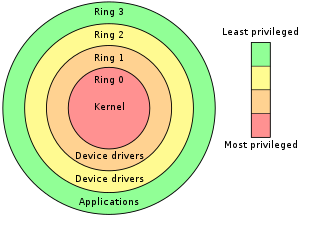

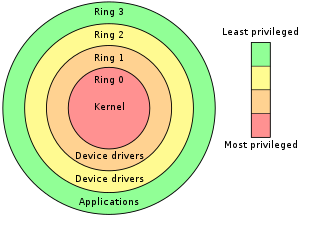

In computer science, hierarchical protection domains, often called protection rings, are mechanisms to protect data and functionality from faults and malicious behavior. This approach is diametrically opposite to that of capability-based security.

Kernel Transaction Manager (KTM) is a component of the Windows operating system kernel in Windows Vista and Windows Server 2008 that enables applications to use atomic transactions on resources by making them available as kernel objects.

OpenVZ is an operating-system-level virtualization technology for Linux. It allows a physical server to run multiple isolated operating system instances, called containers, virtual private servers (VPSs), or virtual environments (VEs). OpenVZ is similar to Solaris Containers and LXC.

The following is a timeline of virtualization development. In computing, virtualization is the use of a computer to simulate another computer. Through virtualization, a host simulates a guest by exposing virtual hardware devices, which may be done through software or by allowing access to a physical device connected to the machine.

In computing, virtualization refers to the act of creating a virtual version of something, including virtual computer hardware platforms, storage devices, and computer network resources.

The kernel is a computer program at the core of a computer's operating system that has complete control over everything in the system. It is the "portion of the operating system code that is always resident in memory", and facilitates interactions between hardware and software components. On most systems, the kernel is one of the first programs loaded on startup. It handles the rest of startup as well as memory, peripherals, and input/output (I/O) requests from software, translating them into data-processing instructions for the central processing unit.

An embedded hypervisor is a hypervisor that supports the requirements of embedded systems.

OS 2200 is the operating system for the Unisys ClearPath Dorado family of mainframe systems. The operating system kernel of OS 2200 is a lineal descendant of Exec 8 for the UNIVAC 1108. Documentation and other information on current and past Unisys systems can be found on the Unisys public support website.

In computing, a system virtual machine is a virtual machine that provides a complete system platform and supports the execution of a complete operating system (OS). These usually emulate an existing architecture, and are built with the purpose of either providing a platform to run programs where the real hardware is not available for use, or of having multiple instances of virtual machines leading to more efficient use of computing resources, both in terms of energy consumption and cost effectiveness, or both. A VM was originally defined by Popek and Goldberg as "an efficient, isolated duplicate of a real machine".