In mathematics and statistics, the arithmetic mean, arithmetic average, or just the mean or average is the sum of a collection of numbers divided by the count of numbers in the collection. The collection is often a set of results from an experiment, an observational study, or a survey. The term "arithmetic mean" is preferred in some mathematics and statistics contexts because it helps distinguish it from other types of means, such as geometric and harmonic.

A histogram is a visual representation of the distribution of quantitative data. To construct a histogram, the first step is to "bin" the range of values— divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping intervals of a variable. The bins (intervals) are adjacent and are typically of equal size.

In statistics, the standard deviation is a measure of the amount of variation of the values of a variable about its mean. A low standard deviation indicates that the values tend to be close to the mean of the set, while a high standard deviation indicates that the values are spread out over a wider range. The standard deviation is commonly used in the determination of what constitutes an outlier and what does not.

In statistics, the standard score is the number of standard deviations by which the value of a raw score is above or below the mean value of what is being observed or measured. Raw scores above the mean have positive standard scores, while those below the mean have negative standard scores.

A Z-test is any statistical test for which the distribution of the test statistic under the null hypothesis can be approximated by a normal distribution. Z-test tests the mean of a distribution. For each significance level in the confidence interval, the Z-test has a single critical value which makes it more convenient than the Student's t-test whose critical values are defined by the sample size. Both the Z-test and Student's t-test have similarities in that they both help determine the significance of a set of data. However, the z-test is rarely used in practice because the population deviation is difficult to determine.

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the value of a parameter for a hypothetical population, or to the equation that operationalizes how statistics or parameters lead to the effect size value. Examples of effect sizes include the correlation between two variables, the regression coefficient in a regression, the mean difference, or the risk of a particular event happening. Effect sizes are a complement tool for statistical hypothesis testing, and play an important role in power analyses to assess the sample size required for new experiments. Effect size are fundamental in meta-analyses which aim to provide the combined effect size based on data from multiple studies. The cluster of data-analysis methods concerning effect sizes is referred to as estimation statistics.

Engineering tolerance is the permissible limit or limits of variation in:

- a physical dimension;

- a measured value or physical property of a material, manufactured object, system, or service;

- other measured values ;

- in engineering and safety, a physical distance or space (tolerance), as in a truck (lorry), train or boat under a bridge as well as a train in a tunnel ;

- in mechanical engineering, the space between a bolt and a nut or a hole, etc.

Student's t-test is a statistical test used to test whether the difference between the response of two groups is statistically significant or not. It is any statistical hypothesis test in which the test statistic follows a Student's t-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of a scaling term in the test statistic were known. When the scaling term is estimated based on the data, the test statistic—under certain conditions—follows a Student's t distribution. The t-test's most common application is to test whether the means of two populations are significantly different. In many cases, a Z-test will yield very similar results to a t-test because the latter converges to the former as the size of the dataset increases.

The standard error (SE) of a statistic is the standard deviation of its sampling distribution or an estimate of that standard deviation. If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals.

In probability theory and statistics, the coefficient of variation (CV), also known as normalized root-mean-square deviation (NRMSD), percent RMS, and relative standard deviation (RSD), is a standardized measure of dispersion of a probability distribution or frequency distribution. It is defined as the ratio of the standard deviation to the mean , and often expressed as a percentage ("%RSD"). The CV or RSD is widely used in analytical chemistry to express the precision and repeatability of an assay. It is also commonly used in fields such as engineering or physics when doing quality assurance studies and ANOVA gauge R&R, by economists and investors in economic models, and in psychology/neuroscience.

Sample size determination or estimation is the act of choosing the number of observations or replicates to include in a statistical sample. The sample size is an important feature of any empirical study in which the goal is to make inferences about a population from a sample. In practice, the sample size used in a study is usually determined based on the cost, time, or convenience of collecting the data, and the need for it to offer sufficient statistical power. In complex studies, different sample sizes may be allocated, such as in stratified surveys or experimental designs with multiple treatment groups. In a census, data is sought for an entire population, hence the intended sample size is equal to the population. In experimental design, where a study may be divided into different treatment groups, there may be different sample sizes for each group.

In statistical process control (SPC), the and R chart is a type of scheme, popularly known as control chart, used to monitor the mean and range of a normally distributed variables simultaneously, when samples are collected at regular intervals from a business or industrial process. It is often used to monitor the variables data but the performance of the and R chart may suffer when the normality assumption is not valid.

ANOVA gage repeatability and reproducibility is a measurement systems analysis technique that uses an analysis of variance (ANOVA) random effects model to assess a measurement system.

Natural process variation, sometimes just called process variation, is the statistical description of natural fluctuations in process outputs.

The root mean square deviation (RMSD) or root mean square error (RMSE) is either one of two closely related and frequently used measures of the differences between true or predicted values on the one hand and observed values or an estimator on the other. The deviation is typically simply a differences of scalars; it can also be generalized to the vector lengths of a displacement, as in the bioinformatics concept of root mean square deviation of atomic positions.

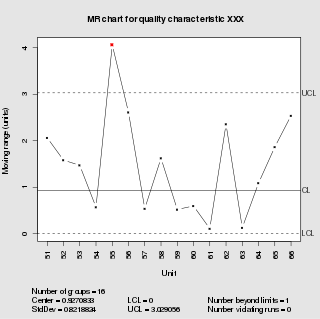

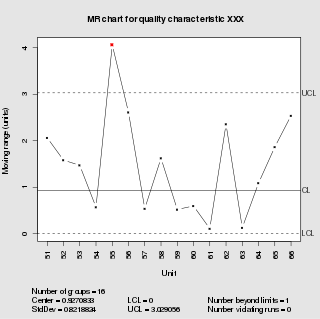

In statistical quality control, the individual/moving-range chart is a type of control chart used to monitor variables data from a business or industrial process for which it is impractical to use rational subgroups.

Tukey's range test, also known as Tukey's test, Tukey method, Tukey's honest significance test, or Tukey's HSDtest, is a single-step multiple comparison procedure and statistical test. It can be used to correctly interpret the statistical significance of the difference between means that have been selected for comparison because of their extreme values.

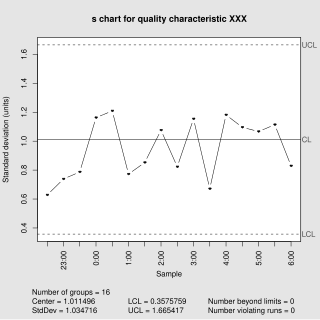

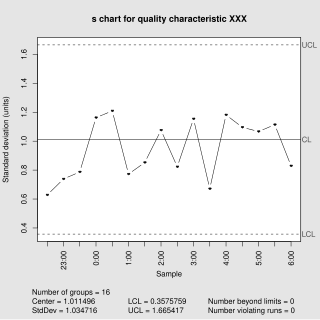

In statistical quality control, the and s chart is a type of control chart used to monitor variables data when samples are collected at regular intervals from a business or industrial process. This is connected to traditional statistical quality control (SQC) and statistical process control (SPC). However, Woodall noted that "I believe that the use of control charts and other monitoring methods should be referred to as “statistical process monitoring,” not “statistical process control (SPC).”"

Experimental uncertainty analysis is a technique that analyses a derived quantity, based on the uncertainties in the experimentally measured quantities that are used in some form of mathematical relationship ("model") to calculate that derived quantity. The model used to convert the measurements into the derived quantity is usually based on fundamental principles of a science or engineering discipline.

In statistical quality control, the EWMA chart is a type of control chart used to monitor either variables or attributes-type data using the monitored business or industrial process's entire history of output. While other control charts treat rational subgroups of samples individually, the EWMA chart tracks the exponentially-weighted moving average of all prior sample means. EWMA weights samples in geometrically decreasing order so that the most recent samples are weighted most highly while the most distant samples contribute very little.