Linear algebra is the branch of mathematics concerning linear equations such as:

In linear algebra, an orthogonal matrix, or orthonormal matrix, is a real square matrix whose columns and rows are orthonormal vectors.

In linear algebra, the singular value decomposition (SVD) is a factorization of a real or complex matrix. It generalizes the eigendecomposition of a square normal matrix with an orthonormal eigenbasis to any matrix. It is related to the polar decomposition.

In mathematics, a complex square matrix A is normal if it commutes with its conjugate transpose A*:

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is , while an example of a 3×3 diagonal matrix is. An identity matrix of any size, or any multiple of it, is a diagonal matrix.

In linear algebra, the adjugate or classical adjoint of a square matrix is the transpose of its cofactor matrix. It is also occasionally known as adjunct matrix, though this nomenclature appears to have decreased in usage. The adjugate has sometimes been called the "adjoint", but today the "adjoint" of a matrix normally refers to its corresponding adjoint operator, which is its conjugate transpose.

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements is sometimes referred to as the sparsity of the matrix.

In power engineering, the power-flow study, or load-flow study, is a numerical analysis of the flow of electric power in an interconnected system. A power-flow study usually uses simplified notations such as a one-line diagram and per-unit system, and focuses on various aspects of AC power parameters, such as voltages, voltage angles, real power and reactive power. It analyzes the power systems in normal steady-state operation.

In mathematics, the kernel of a linear map, also known as the null space or nullspace, is the linear subspace of the domain of the map which is mapped to the zero vector. That is, given a linear map L : V → W between two vector spaces V and W, the kernel of L is the vector space of all elements v of V such that L(v) = 0, where 0 denotes the zero vector in W, or more symbolically:

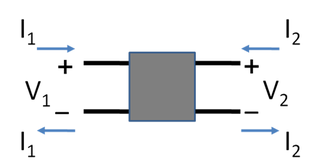

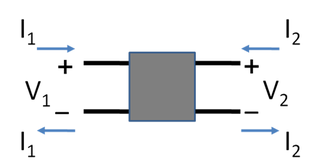

A two-port network is an electrical network (circuit) or device with two pairs of terminals to connect to external circuits. Two terminals constitute a port if the currents applied to them satisfy the essential requirement known as the port condition: the electric current entering one terminal must equal the current emerging from the other terminal on the same port. The ports constitute interfaces where the network connects to other networks, the points where signals are applied or outputs are taken. In a two-port network, often port 1 is considered the input port and port 2 is considered the output port.

In mathematics, particularly matrix theory, a band matrix or banded matrix is a sparse matrix whose non-zero entries are confined to a diagonal band, comprising the main diagonal and zero or more diagonals on either side.

In linear algebra, an eigenvector or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by , is the factor by which the eigenvector is scaled.

In mathematics, a logarithm of a matrix is another matrix such that the matrix exponential of the latter matrix equals the original matrix. It is thus a generalization of the scalar logarithm and in some sense an inverse function of the matrix exponential. Not all matrices have a logarithm and those matrices that do have a logarithm may have more than one logarithm. The study of logarithms of matrices leads to Lie theory since when a matrix has a logarithm then it is in an element of a Lie group and the logarithm is the corresponding element of the vector space of the Lie algebra.

In power engineering, nodal admittance matrix or Y Matrix or Ybus is an N x N matrix describing a linear power system with N buses. It represents the nodal admittance of the buses in a power system. In realistic systems which contain thousands of buses, the Y matrix is quite sparse. Each bus in a real power system is usually connected to only a few other buses through the transmission lines. The Y Matrix is also one of the data requirements needed to formulate a power flow study.

In numerical analysis and linear algebra, lower–upper (LU) decomposition or factorization factors a matrix as the product of a lower triangular matrix and an upper triangular matrix. The product sometimes includes a permutation matrix as well. LU decomposition can be viewed as the matrix form of Gaussian elimination. Computers usually solve square systems of linear equations using LU decomposition, and it is also a key step when inverting a matrix or computing the determinant of a matrix. The LU decomposition was introduced by the Polish mathematician Tadeusz Banachiewicz in 1938.

In linear algebra, eigendecomposition is the factorization of a matrix into a canonical form, whereby the matrix is represented in terms of its eigenvalues and eigenvectors. Only diagonalizable matrices can be factorized in this way. When the matrix being factorized is a normal or real symmetric matrix, the decomposition is called "spectral decomposition", derived from the spectral theorem.

In the mathematical field of linear algebra, an arrowhead matrix is a square matrix containing zeros in all entries except for the first row, first column, and main diagonal, these entries can be any number. In other words, the matrix has the form

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object.

In control system theory, and various branches of engineering, a transfer function matrix, or just transfer matrix is a generalisation of the transfer functions of single-input single-output (SISO) systems to multiple-input and multiple-output (MIMO) systems. The matrix relates the outputs of the system to its inputs. It is a particularly useful construction for linear time-invariant (LTI) systems because it can be expressed in terms of the s-plane.

Dynamic Substructuring (DS) is an engineering tool used to model and analyse the dynamics of mechanical systems by means of its components or substructures. Using the dynamic substructuring approach one is able to analyse the dynamic behaviour of substructures separately and to later on calculate the assembled dynamics using coupling procedures. Dynamic substructuring has several advantages over the analysis of the fully assembled system: