Hugo de Garis is a retired researcher in the sub-field of artificial intelligence (AI) known as evolvable hardware. He became known in the 1990s for his research on the use of genetic algorithms to evolve artificial neural networks using three-dimensional cellular automata inside field programmable gate arrays. He claimed that this approach would enable the creation of what he terms "artificial brains" which would quickly surpass human levels of intelligence.

The technological singularity—or simply the singularity—is a hypothetical point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable changes to human civilization. According to the most popular version of the singularity hypothesis, called intelligence explosion, an upgradable intelligent agent will eventually enter a "runaway reaction" of self-improvement cycles, each new and more intelligent generation appearing more and more rapidly, causing an "explosion" in intelligence and resulting in a powerful superintelligence that qualitatively far surpasses all human intelligence.

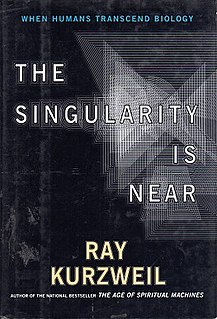

The Age of Spiritual Machines: When Computers Exceed Human Intelligence is a non-fiction book by inventor and futurist Ray Kurzweil about artificial intelligence and the future course of humanity. First published in hardcover on January 1, 1999 by Viking, it has received attention from The New York Times, The New York Review of Books and The Atlantic. In the book Kurzweil outlines his vision for how technology will progress during the 21st century.

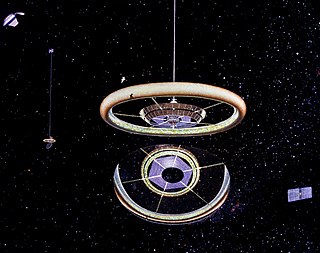

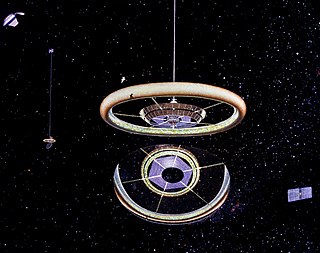

A space habitat is a more advanced form of living quarters than a space station or habitation module, in that it is intended as a permanent settlement or green habitat rather than as a simple way-station or other specialized facility. No space habitat has been constructed yet, but many design concepts, with varying degrees of realism, have come both from engineers and from science-fiction authors.

The Stanford torus is a proposed NASA design for a space habitat capable of housing 10,000 to 140,000 permanent residents.

Hans Peter Moravec is an adjunct faculty member at the Robotics Institute of Carnegie Mellon University in Pittsburgh, USA. He is known for his work on robotics, artificial intelligence, and writings on the impact of technology. Moravec also is a futurist with many of his publications and predictions focusing on transhumanism. Moravec developed techniques in computer vision for determining the region of interest (ROI) in a scene.

Friendly artificial intelligence refers to hypothetical artificial general intelligence (AGI) that would have a positive (benign) effect on humanity or at least align with human interests or contribute to foster the improvement of the human species. It is a part of the ethics of artificial intelligence and is closely related to machine ethics. While machine ethics is concerned with how an artificially intelligent agent should behave, friendly artificial intelligence research is focused on how to practically bring about this behaviour and ensuring it is adequately constrained.

Singularitarianism is a movement defined by the belief that a technological singularity—the creation of superintelligence—will likely happen in the medium future, and that deliberate action ought to be taken to ensure that the singularity benefits humans.

A superintelligence is a hypothetical agent that possesses intelligence far surpassing that of the brightest and most gifted human minds. "Superintelligence" may also refer to a property of problem-solving systems whether or not these high-level intellectual competencies are embodied in agents that act in the world. A superintelligence may or may not be created by an intelligence explosion and associated with a technological singularity.

Gerard Kitchen O'Neill was an American physicist and space activist. As a faculty member of Princeton University, he invented a device called the particle storage ring for high-energy physics experiments. Later, he invented a magnetic launcher called the mass driver. In the 1970s, he developed a plan to build human settlements in outer space, including a space habitat design known as the O'Neill cylinder. He founded the Space Studies Institute, an organization devoted to funding research into space manufacturing and colonization.

The Singularity Is Near: When Humans Transcend Biology is a 2005 non-fiction book about artificial intelligence and the future of humanity by inventor and futurist Ray Kurzweil.

An AI takeover is a real-world scenario in which computer's artificial intelligence (AI) becomes the dominant form of intelligence on Earth, as computer programs or robots effectively take the control of the planet away from the human species. Possible scenarios include replacement of the entire human workforce, takeover by a superintelligent AI, and the popular notion of a robot uprising. Some public figures, such as Stephen Hawking and Elon Musk, have advocated research into precautionary measures to ensure future superintelligent machines remain under human control.

In futures studies and the history of technology, accelerating change is a perceived increase in the rate of technological change throughout history, which may suggest faster and more profound change in the future and may or may not be accompanied by equally profound social and cultural change.

The High Frontier: Human Colonies in Space is a 1976 book by Gerard K. O'Neill, a road map for what the United States might do in outer space after the Apollo program, the drive to place a human on the Moon and beyond. It envisions large human occupied habitats in the Earth-Moon system, especially near stable Lagrangian points. Three designs are proposed: Island one, Island two, and Island 3. These would be constructed using raw materials from the lunar surface launched into space using a mass driver and from near-Earth asteroids. The habitats were to spin for simulated gravity and be illuminated and powered by the Sun. Solar power satellites were proposed as a possible industry to support the habitats.

"Good Taste" is a science fiction short story by American writer Isaac Asimov. It first appeared in a limited edition book of the same name by Apocalypse Press in 1976. It subsequently appeared in Asimov's Science Fiction and in the 1983 collection The Winds of Change and Other Stories.

Physics of the Future: How Science Will Shape Human Destiny and Our Daily Lives by the Year 2100 is a 2011 book by theoretical physicist Michio Kaku, author of Hyperspace and Physics of the Impossible. In it Kaku speculates about possible future technological development over the next 100 years. He interviews notable scientists about their fields of research and lays out his vision of coming developments in medicine, computing, artificial intelligence, nanotechnology, and energy production. The book was on the New York Times Bestseller List for five weeks.

Machine ethics is a part of the ethics of artificial intelligence concerned with adding or ensuring moral behaviors of man-made machines that use artificial intelligence, otherwise known as artificial intelligent agents. Machine ethics differs from other ethical fields related to engineering and technology. Machine ethics should not be confused with computer ethics, which focuses on human use of computers. It should also be distinguished from the philosophy of technology, which concerns itself with the grander social effects of technology.

An O'Neill cylinder is a space settlement concept proposed by American physicist Gerard K. O'Neill in his 1976 book The High Frontier: Human Colonies in Space. O'Neill proposed the colonization of space for the 21st century, using materials extracted from the Moon and later from asteroids.

Existential risk from artificial general intelligence is the hypothesis that substantial progress in artificial general intelligence (AGI) could someday result in human extinction or some other unrecoverable global catastrophe. It is argued that the human species currently dominates other species because the human brain has some distinctive capabilities that other animals lack. If AI surpasses humanity in general intelligence and becomes "superintelligent", then it could become difficult or impossible for humans to control. Just as the fate of the mountain gorilla depends on human goodwill, so might the fate of humanity depend on the actions of a future machine superintelligence.