Related Research Articles

Marquis reagent is used as a simple spot-test to presumptively identify alkaloids as well as other compounds. It is composed of a mixture of formaldehyde and concentrated sulfuric acid, which is dripped onto the substance being tested. The United States Department of Justice method for producing the reagent is the addition of 100 mL of concentrated (95–98%) sulfuric acid to 5 mL of 40% formaldehyde. Different compounds produce different color reactions. Methanol may be added to slow down the reaction process to allow better observation of the colour change.

Chemometrics is the science of extracting information from chemical systems by data-driven means. Chemometrics is inherently interdisciplinary, using methods frequently employed in core data-analytic disciplines such as multivariate statistics, applied mathematics, and computer science, in order to address problems in chemistry, biochemistry, medicine, biology and chemical engineering. In this way, it mirrors other interdisciplinary fields, such as psychometrics and econometrics.

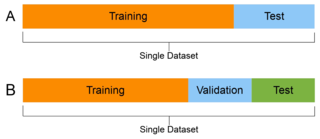

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistical analysis will generalize to an independent data set. It is mainly used in settings where the goal is prediction, and one wants to estimate how accurately a predictive model will perform in practice. In a prediction problem, a model is usually given a dataset of known data on which training is run, and a dataset of unknown data against which the model is tested. The goal of cross-validation is to test the model's ability to predict new data that was not used in estimating it, in order to flag problems like overfitting or selection bias and to give an insight on how the model will generalize to an independent dataset.

Quantitative structure–activity relationship models are regression or classification models used in the chemical and biological sciences and engineering. Like other regression models, QSAR regression models relate a set of "predictor" variables (X) to the potency of the response variable (Y), while classification QSAR models relate the predictor variables to a categorical value of the response variable.

High-throughput screening (HTS) is a method for scientific experimentation especially used in drug discovery and relevant to the fields of biology and chemistry. Using robotics, data processing/control software, liquid handling devices, and sensitive detectors, high-throughput screening allows a researcher to quickly conduct millions of chemical, genetic, or pharmacological tests. Through this process one can rapidly identify active compounds, antibodies, or genes that modulate a particular biomolecular pathway. The results of these experiments provide starting points for drug design and for understanding the noninteraction or role of a particular location.

Forensic toxicology is the use of toxicology and disciplines such as analytical chemistry, pharmacology and clinical chemistry to aid medical or legal investigation of death, poisoning, and drug use. The primary concern for forensic toxicology is not the legal outcome of the toxicological investigation or the technology utilized, but rather the obtainment and interpretation of results. A toxicological analysis can be done to various kinds of samples. A forensic toxicologist must consider the context of an investigation, in particular any physical symptoms recorded, and any evidence collected at a crime scene that may narrow the search, such as pill bottles, powders, trace residue, and any available chemicals. Provided with this information and samples with which to work, the forensic toxicologist must determine which toxic substances are present, in what concentrations, and the probable effect of those chemicals on the person.

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from input data.

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively.

Metaxalone, sold under the brand name Skelaxin, is a muscle relaxant medication used to relax muscles and relieve pain caused by strains, sprains, and other musculoskeletal conditions. Its exact mechanism of action is not known, but it may be due to general central nervous system depression. It is considered to be a moderately strong muscle relaxant, with relatively low incidence of side effects.

AOAC International is a 501(c) non-profit scientific association with headquarters in Rockville, Maryland. It was founded in 1884 as the Association of Official Agricultural Chemists (AOAC) and became AOAC International in 1991. It publishes standardized, chemical analysis methods designed to increase confidence in the results of chemical and microbiologic analyses. Government agencies and civil organizations often require that laboratories use official AOAC methods.

Cleaning validation is the methodology used to assure that a cleaning process removes chemical and microbial residues of the active, inactive or detergent ingredients of the product manufactured in a piece of equipment, the cleaning aids utilized in the cleaning process and the microbial attributes. All residues are removed to predetermined levels to ensure the quality of the next product manufactured is not compromised by waste from the previous product and the quality of future products using the equipment, to prevent cross-contamination and as a good manufacturing practice requirement.

Data collection is the process of gathering and measuring information on targeted variables in an established system, which then enables one to answer relevant questions and evaluate outcomes. Data collection is a research component in all study fields, including physical and social sciences, humanities, and business. While methods vary by discipline, the emphasis on ensuring accurate and honest collection remains the same. The goal for all data collection is to capture quality evidence that allows analysis to lead to the formulation of convincing and credible answers to the questions that have been posed.Data collection and validation consists of four steps when it involves taking a census and seven steps when it involves sampling

An occupational exposure limit is an upper limit on the acceptable concentration of a hazardous substance in workplace air for a particular material or class of materials. It is typically set by competent national authorities and enforced by legislation to protect occupational safety and health. It is an important tool in risk assessment and in the management of activities involving handling of dangerous substances. There are many dangerous substances for which there are no formal occupational exposure limits. In these cases, hazard banding or control banding strategies can be used to ensure safe handling.

Verification and validation are independent procedures that are used together for checking that a product, service, or system meets requirements and specifications and that it fulfills its intended purpose. These are critical components of a quality management system such as ISO 9000. The words "verification" and "validation" are sometimes preceded with "independent", indicating that the verification and validation is to be performed by a disinterested third party. "Independent verification and validation" can be abbreviated as "IV&V".

In statistics, regression validation is the process of deciding whether the numerical results quantifying hypothesized relationships between variables, obtained from regression analysis, are acceptable as descriptions of the data. The validation process can involve analyzing the goodness of fit of the regression, analyzing whether the regression residuals are random, and checking whether the model's predictive performance deteriorates substantially when applied to data that were not used in model estimation.

Quality by design (QbD) is a concept first outlined by quality expert Joseph M. Juran in publications, most notably Juran on Quality by Design. Designing for quality and innovation is one of the three universal processes of the Juran Trilogy, in which Juran describes what is required to achieve breakthroughs in new products, services, and processes. Juran believed that quality could be planned, and that most quality crises and problems relate to the way in which quality was planned.

A variable pathlength cell is a sample holder used for ultraviolet–visible spectroscopy or infrared spectroscopy that has a path length that can be varied to change the absorbance without changing the sample concentration.

Analytical quality control, commonly shortened to AQC, refers to all those processes and procedures designed to ensure that the results of laboratory analysis are consistent, comparable, accurate and within specified limits of precision. Constituents submitted to the analytical laboratory must be accurately described to avoid faulty interpretations, approximations, or incorrect results. The qualitative and quantitative data generated from the laboratory can then be used for decision making. In the chemical sense, quantitative analysis refers to the measurement of the amount or concentration of an element or chemical compound in a matrix that differs from the element or compound. Fields such as industry, medicine, and law enforcement can make use of AQC.

Receiver Operating Characteristic Curve Explorer and Tester (ROCCET) is an open-access web server for performing biomarker analysis using ROC curve analyses on metabolomic data sets. ROCCET is designed specifically for performing and assessing a standard binary classification test. ROCCET accepts metabolite data tables, with or without clinical/observational variables, as input and performs extensive biomarker analysis and biomarker identification using these input data. It operates through a menu-based navigation system that allows users to identify or assess those clinical variables and/or metabolites that contain the maximal diagnostic or class-predictive information. ROCCET supports both manual and semi-automated feature selection and is able to automatically generate a variety of mathematical models that maximize the sensitivity and specificity of the biomarker(s) while minimizing the number of biomarkers used in the biomarker model. ROCCET also supports the rigorous assessment of the quality and robustness of newly discovered biomarkers using permutation testing, hold-out testing and cross-validation.

Process validation is the analysis of data gathered throughout the design and manufacturing of a product in order to confirm that the process can reliably output products of a determined standard. Regulatory authorities like EMA and FDA have published guidelines relating to process validation. The purpose of process validation is to ensure varied inputs lead to consistent and high quality outputs. Process validation is an ongoing process that must be frequently adapted as manufacturing feedback is gathered. End-to-end validation of production processes is essential in determining product quality because quality cannot always be determined by finished-product inspection. Process validation can be broken down into 3 steps: process design, process qualification, and continued process verification.

References

- 1 2 Food and Drug Administration (May 2001). "Guidance for Industry: Bioanalytical Method Validation" (PDF). Retrieved 13 June 2009.