Image registration is the process of transforming different sets of data into one coordinate system. Data may be multiple photographs, data from different sensors, times, depths, or viewpoints. It is used in computer vision, medical imaging, military automatic target recognition, and compiling and analyzing images and data from satellites. Registration is necessary in order to be able to compare or integrate the data obtained from these different measurements.

Simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. While this initially appears to be a chicken or the egg problem, there are several algorithms known to solve it in, at least approximately, tractable time for certain environments. Popular approximate solution methods include the particle filter, extended Kalman filter, covariance intersection, and GraphSLAM. SLAM algorithms are based on concepts in computational geometry and computer vision, and are used in robot navigation, robotic mapping and odometry for virtual reality or augmented reality.

Wireless sensor networks (WSNs) refer to networks of spatially dispersed and dedicated sensors that monitor and record the physical conditions of the environment and forward the collected data to a central location. WSNs can measure environmental conditions such as temperature, sound, pollution levels, humidity and wind.

Prognostics is an engineering discipline focused on predicting the time at which a system or a component will no longer perform its intended function. This lack of performance is most often a failure beyond which the system can no longer be used to meet desired performance. The predicted time then becomes the remaining useful life (RUL), which is an important concept in decision making for contingency mitigation. Prognostics predicts the future performance of a component by assessing the extent of deviation or degradation of a system from its expected normal operating conditions. The science of prognostics is based on the analysis of failure modes, detection of early signs of wear and aging, and fault conditions. An effective prognostics solution is implemented when there is sound knowledge of the failure mechanisms that are likely to cause the degradations leading to eventual failures in the system. It is therefore necessary to have initial information on the possible failures in a product. Such knowledge is important to identify the system parameters that are to be monitored. Potential uses for prognostics is in condition-based maintenance. The discipline that links studies of failure mechanisms to system lifecycle management is often referred to as prognostics and health management (PHM), sometimes also system health management (SHM) or—in transportation applications—vehicle health management (VHM) or engine health management (EHM). Technical approaches to building models in prognostics can be categorized broadly into data-driven approaches, model-based approaches, and hybrid approaches.

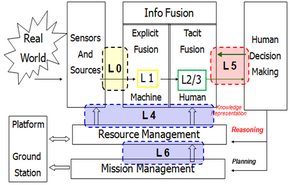

Sensor fusion is the process of combining sensor data or data derived from disparate sources such that the resulting information has less uncertainty than would be possible when these sources were used individually. For instance, one could potentially obtain a more accurate location estimate of an indoor object by combining multiple data sources such as video cameras and WiFi localization signals. The term uncertainty reduction in this case can mean more accurate, more complete, or more dependable, or refer to the result of an emerging view, such as stereoscopic vision.

Multimodal interaction provides the user with multiple modes of interacting with a system. A multimodal interface provides several distinct tools for input and output of data.

Video quality is a characteristic of a video passed through a video transmission or processing system that describes perceived video degradation. Video processing systems may introduce some amount of distortion or artifacts in the video signal that negatively impact the user's perception of the system. For many stakeholders in video production and distribution, ensuring video quality is an important task.

Information integration (II) is the merging of information from heterogeneous sources with differing conceptual, contextual and typographical representations. It is used in data mining and consolidation of data from unstructured or semi-structured resources. Typically, information integration refers to textual representations of knowledge but is sometimes applied to rich-media content. Information fusion, which is a related term, involves the combination of information into a new set of information towards reducing redundancy and uncertainty.

The image fusion process is defined as gathering all the important information from multiple images, and their inclusion into fewer images, usually a single one. This single image is more informative and accurate than any single source image, and it consists of all the necessary information. The purpose of image fusion is not only to reduce the amount of data but also to construct images that are more appropriate and understandable for the human and machine perception. In computer vision, multisensor image fusion is the process of combining relevant information from two or more images into a single image. The resulting image will be more informative than any of the input images.

Requirements traceability is a sub-discipline of requirements management within software development and systems engineering. Traceability as a general term is defined by the IEEE Systems and Software Engineering Vocabulary as (1) the degree to which a relationship can be established between two or more products of the development process, especially products having a predecessor-successor or primary-subordinate relationship to one another; (2) the identification and documentation of derivation paths (upward) and allocation or flowdown paths (downward) of work products in the work product hierarchy; (3) the degree to which each element in a software development product establishes its reason for existing; and (4) discernible association among two or more logical entities, such as requirements, system elements, verifications, or tasks.

For air traffic control, SASS-C is an acronym for "Surveillance Analysis Support System for ATC-Centre". SASS-C Service is part of Eurocontrol Communications, navigation and surveillance.

An indoor positioning system (IPS) is a network of devices used to locate people or objects where GPS and other satellite technologies lack precision or fail entirely, such as inside multistory buildings, airports, alleys, parking garages, and underground locations.

The joint probabilistic data-association filter (JPDAF) is a statistical approach to the problem of plot association in a target tracking algorithm. Like the probabilistic data association filter (PDAF), rather than choosing the most likely assignment of measurements to a target, the PDAF takes an expected value, which is the minimum mean square error (MMSE) estimate for the state of each target. At each time, it maintains its estimate of the target state as the mean and covariance matrix of a multivariate normal distribution. However, unlike the PDAF, which is only meant for tracking a single target in the presence of false alarms and missed detections, the JPDAF can handle multiple target tracking scenarios. A derivation of the JPDAF is given in.

The Semantic Sensor Web (SSW) is a marriage of sensor web and semantic Web technologies. The encoding of sensor descriptions and sensor observation data with Semantic Web languages enables more expressive representation, advanced access, and formal analysis of sensor resources. The SSW annotates sensor data with spatial, temporal, and thematic semantic metadata. This technique builds on current standardization efforts within the Open Geospatial Consortium's Sensor Web Enablement (SWE) and extends them with Semantic Web technologies to provide enhanced descriptions and access to sensor data.

Covariance intersection (CI) is an algorithm for combining two or more estimates of state variables in a Kalman filter when the correlation between them is unknown.

A resilient control system is one that maintains state awareness and an accepted level of operational normalcy in response to disturbances, including threats of an unexpected and malicious nature".

Adaptive collaborative control is the decision-making approach used in hybrid models consisting of finite-state machines with functional models as subcomponents to simulate behavior of systems formed through the partnerships of multiple agents for the execution of tasks and the development of work products. The term “collaborative control” originated from work developed in the late 1990s and early 2000 by Fong, Thorpe, and Baur (1999). It is important to note that according to Fong et al. in order for robots to function in collaborative control, they must be self-reliant, aware, and adaptive. In literature, the adjective “adaptive” is not always shown but is noted in the official sense as it is an important element of collaborative control. The adaptation of traditional applications of control theory in teleoperations sought initially to reduce the sovereignty of “humans as controllers/robots as tools” and had humans and robots working as peers, collaborating to perform tasks and to achieve common goals. Early implementations of adaptive collaborative control centered on vehicle teleoperation. Recent uses of adaptive collaborative control cover training, analysis, and engineering applications in teleoperations between humans and multiple robots, multiple robots collaborating among themselves, unmanned vehicle control, and fault tolerant controller design.

Fusion adaptive resonance theory (fusion ART) is a generalization of self-organizing neural networks known as the original Adaptive Resonance Theory models for learning recognition categories across multiple pattern channels. There is a separate stream of work on fusion ARTMAP, that extends fuzzy ARTMAP consisting of two fuzzy ART modules connected by an inter-ART map field to an extended architecture consisting of multiple ART modules.

In virtual reality (VR) and augmented reality (AR), a pose tracking system detects the precise pose of head-mounted displays, controllers, other objects or body parts within Euclidean space. Pose tracking is often referred to as 6DOF tracking, for the six degrees of freedom in which the pose is often tracked.

Image destriping is the process of removing stripes or streaks from images and videos without disrupting the original image/video. These artifacts plague a range of fields in scientific imaging including atomic force microscopy, light sheet fluorescence microscopy, and planetary satellite imaging.