A computer mouse is a hand-held pointing device that detects two-dimensional motion relative to a surface. This motion is typically translated into the motion of a pointer on a display, which allows a smooth control of the graphical user interface of a computer.

In computing, a pointing device gesture or mouse gesture is a way of combining pointing device or finger movements and clicks that the software recognizes as a specific computer event and responds to accordingly. They can be useful for people who have difficulties typing on a keyboard. For example, in a web browser, a user can navigate to the previously viewed page by pressing the right pointing device button, moving the pointing device briefly to the left, then releasing the button.

A pointing device is a human interface device that allows a user to input spatial data to a computer. CAD systems and graphical user interfaces (GUI) allow the user to control and provide data to the computer using physical gestures by moving a hand-held mouse or similar device across the surface of the physical desktop and activating switches on the mouse. Movements of the pointing device are echoed on the screen by movements of the pointer and other visual changes. Common gestures are point and click and drag and drop.

The X Window System is a windowing system for bitmap displays, common on Unix-like operating systems.

A graphics tablet is a computer input device that enables a user to hand-draw images, animations and graphics, with a special pen-like stylus, similar to the way a person draws images with a pencil and paper. These tablets may also be used to capture data or handwritten signatures. It can also be used to trace an image from a piece of paper that is taped or otherwise secured to the tablet surface. Capturing data in this way, by tracing or entering the corners of linear polylines or shapes, is called digitizing.

A pointing stick is a small analog stick used as a pointing device typically mounted centrally in a computer keyboard. Like other pointing devices such as mice, touchpads or trackballs, operating system software translates manipulation of the device into movements of the pointer or cursor on the monitor. Unlike other pointing devices, it reacts to sustained force or strain rather than to gross movement, so it is called an "isometric" pointing device. IBM introduced it commercially in 1992 on its laptops under the name "TrackPoint", patented in 1997.

A touchpad or trackpad is a pointing device featuring a tactile sensor, a specialized surface that can translate the motion and position of a user's fingers to a relative position on the operating system that is made output to the screen. Touchpads are a common feature of laptop computers as opposed to using a mouse on a desktop, and are also used as a substitute for a mouse where desk space is scarce. Because they vary in size, they can also be found on personal digital assistants (PDAs) and some portable media players. Wireless touchpads are also available as detached accessories.

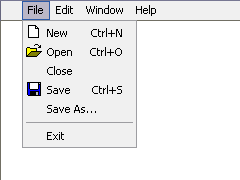

In user interface design, a menu is a list of options or commands presented to the user of a computer or embedded system. A menu may either be a system's entire user interface, or only part of a more complex one.

A touchscreen or touch screen is the assembly of both an input and output ('display') device. The touch panel is normally layered on the top of an electronic visual display of an electronic device.

Synaptics is a publicly owned San Jose, California-based developer of human interface (HMI) hardware and software, including touchpads for computer laptops; touch, display driver, and fingerprint biometrics technology for smartphones; and touch, video and far-field voice technology for smart home devices and automotives. Synaptics sells its products to original equipment manufacturers (OEMs) and display manufacturers.

Multi-monitor, also called multi-display and multi-head, is the use of multiple physical display devices, such as monitors, televisions, and projectors, in order to increase the area available for computer programs running on a single computer system. Research studies show that, depending on the type of work, multi-head may increase the productivity by 50–70%.

In human–computer interaction, a cursor is an indicator used to show the current position on a computer monitor or other display device that will respond to text input.

A virtual keyboard is a software component that allows the input of characters without the need for physical keys. The interaction with the virtual keyboard happens mostly via a touchscreen interface, but can also take place in a different form in virtual or augmented reality.

In computing, 10-foot user interface,10-foot UI or 3-meter user interface is a graphical user interface designed for televisions. Compared to desktop computer and smartphone user interfaces, it uses text and other interface elements which are much larger in order to accommodate a typical television viewing distance of 10 feet. Additionally, the limitations of a television's remote control necessitate extra user experience considerations to minimize user effort.

In computing, multi-touch is technology that enables a surface to recognize the presence of more than one point of contact with the surface at the same time. The origins of multitouch began at CERN, MIT, University of Toronto, Carnegie Mellon University and Bell Labs in the 1970s. CERN started using multi-touch screens as early as 1976 for the controls of the Super Proton Synchrotron. Capacitive multi-touch displays were popularized by Apple's iPhone in 2007. Plural-point awareness may be used to implement additional functionality, such as pinch to zoom or to activate certain subroutines attached to predefined gestures.

Pen computing refers to any computer user-interface using a pen or stylus and tablet, over input devices such as a keyboard or a mouse.

Microsoft PixelSense was an interactive surface computing platform that allowed one or more people to use and touch real-world objects, and share digital content at the same time. The PixelSense platform consists of software and hardware products that combine vision based multitouch PC hardware, 360-degree multiuser application design, and Windows software to create a natural user interface (NUI).

A text entry interface or text entry device is an interface that is used to enter text information in an electronic device. A commonly used device is a mechanical computer keyboard. Most laptop computers have an integrated mechanical keyboard, and desktop computers are usually operated primarily using a keyboard and mouse. Devices such as smartphones and tablets mean that interfaces such as virtual keyboards and voice recognition are becoming more popular as text entry systems.

In computing, an input device is a piece of equipment used to provide data and control signals to an information processing system, such as a computer or information appliance. Examples of input devices include keyboards, mouse, scanners, cameras, joysticks, and microphones.

Microsoft Tablet PC is a term coined by Microsoft for tablet computers conforming to a set of specifications announced in 2001 by Microsoft, for a pen-enabled personal computer, conforming to hardware specifications devised by Microsoft and running a licensed copy of Windows XP Tablet PC Edition operating system or a derivative thereof.