Related Research Articles

Junk science is spurious or fraudulent scientific data, research, or analysis. The concept is often invoked in political and legal contexts where facts and scientific results have a great amount of weight in making a determination. It usually conveys a pejorative connotation that the research has been untowardly driven by political, ideological, financial, or otherwise unscientific motives.

A cognitive bias is a systematic pattern of deviation from norm or rationality in judgment. Individuals create their own "subjective reality" from their perception of the input. An individual's construction of reality, not the objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, and irrationality.

Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one's prior beliefs or values. People display this bias when they select information that supports their views, ignoring contrary information, or when they interpret ambiguous evidence as supporting their existing attitudes. The effect is strongest for desired outcomes, for emotionally charged issues, and for deeply entrenched beliefs. Confirmation bias is insuperable for most people, but they can manage it, for example, by education and training in critical thinking skills.

In psychology, decision-making is regarded as the cognitive process resulting in the selection of a belief or a course of action among several possible alternative options. It could be either rational or irrational. The decision-making process is a reasoning process based on assumptions of values, preferences and beliefs of the decision-maker. Every decision-making process produces a final choice, which may or may not prompt action.

Popular science is an interpretation of science intended for a general audience. While science journalism focuses on recent scientific developments, popular science is more broad ranging. It may be written by professional science journalists or by scientists themselves. It is presented in many forms, including books, film and television documentaries, magazine articles, and web pages.

In psychology, the false consensus effect, also known as consensus bias, is a pervasive cognitive bias that causes people to "see their own behavioral choices and judgments as relatively common and appropriate to existing circumstances". In other words, they assume that their personal qualities, characteristics, beliefs, and actions are relatively widespread through the general population.

Together, legal psychology and forensic psychology form the field more generally recognized as "psychology and law". Following earlier efforts by psychologists to address legal issues, psychology and law became a field of study in the 1960s as part of an effort to enhance justice, though that originating concern has lessened over time. The multidisciplinary American Psychological Association's Division 41, the American Psychology–Law Society, is active with the goal of promoting the contributions of psychology to the understanding of law and legal systems through research, as well as providing education to psychologists in legal issues and providing education to legal personnel on psychological issues. Further, its mandate is to inform the psychological and legal communities and the public at large of current research, educational, and service in the area of psychology and law. There are similar societies in Britain and Europe.

Pascal Robert Boyer is an American cognitive anthropologist and evolutionary psychologist of French origin, mostly known for his work in the cognitive science of religion. He taught at the University of Cambridge for eight years, before taking up the position of Henry Luce Professor of Individual and Collective Memory at Washington University in St. Louis, where he teaches classes on evolutionary psychology and anthropology. He was a Guggenheim Fellow and a visiting professor at the University of California, Santa Barbara and the University of Lyon, France. He studied philosophy and anthropology at University of Paris and Cambridge, with Jack Goody, working on memory constraints on the transmission of oral literature. Boyer is a Member of the American Academy of Arts and Sciences.

An opinion is a judgment, viewpoint, or statement that is not conclusive, rather than facts, which are true statements.

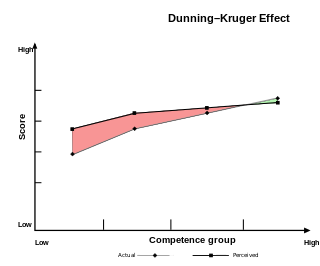

The Dunning–Kruger effect is a cognitive bias in which people with limited competence in a particular domain overestimate their abilities. It was first described by Justin Kruger and David Dunning in 1999. Some researchers also include the opposite effect for high performers: their tendency to underestimate their skills. In popular culture, the Dunning–Kruger effect is often misunderstood as a claim about general overconfidence of people with low intelligence instead of specific overconfidence of people unskilled at a particular task.

Confidence is the feeling of belief or trust that a person or thing is reliable. Self-confidence is trust in oneself. Self-confidence involves a positive belief that one can generally accomplish what one wishes to do in the future. Self-confidence is not the same as self-esteem, which is an evaluation of one's worth. Self-confidence is related to self-efficacy—belief in one's ability to accomplish a specific task or goal. Confidence can be a self-fulfilling prophecy, as those without it may fail because they lack it, and those with it may succeed because they have it rather than because of an innate ability or skill.

The overconfidence effect is a well-established bias in which a person's subjective confidence in their judgments is reliably greater than the objective accuracy of those judgments, especially when confidence is relatively high. Overconfidence is one example of a miscalibration of subjective probabilities. Throughout the research literature, overconfidence has been defined in three distinct ways: (1) overestimation of one's actual performance; (2) overplacement of one's performance relative to others; and (3) overprecision in expressing unwarranted certainty in the accuracy of one's beliefs.

The IQ Controversy, the Media and Public Policy is a book published by Smith College professor emeritus Stanley Rothman and Harvard researcher Mark Snyderman in 1988. Claiming to document liberal bias in media coverage of scientific findings regarding intelligence quotient (IQ), the book builds on a survey of the opinions of hundreds of North American psychologists, sociologists and educationalists conducted by the authors in 1984. The book also includes an analysis of the reporting on intelligence testing by the press and television in the US for the period 1969–1983, as well as an opinion poll of 207 journalists and 86 science editors about IQ testing.

In psychology, the human mind is considered to be a cognitive miser due to the tendency of humans to think and solve problems in simpler and less effortful ways rather than in more sophisticated and effortful ways, regardless of intelligence. Just as a miser seeks to avoid spending money, the human mind often seeks to avoid spending cognitive effort. The cognitive miser theory is an umbrella theory of cognition that brings together previous research on heuristics and attributional biases to explain when and why people are cognitive misers.

Science communication encompasses a wide range of activities that connect science and society. Common goals of science communication include informing non-experts about scientific findings, raising the public awareness of and interest in science, influencing people's attitudes and behaviors, informing public policy, and engaging with diverse communities to address societal problems. The term "science communication" generally refers to settings in which audiences are not experts on the scientific topic being discussed (outreach), though some authors categorize expert-to-expert communication as a type of science communication. Examples of outreach include science journalism and health communication. Since science has political, moral, and legal implications, science communication can help bridge gaps between different stakeholders in public policy, industry, and civil society.

Heuristics is the process by which humans use mental shortcuts to arrive at decisions. Heuristics are simple strategies that humans, animals, organizations, and even machines use to quickly form judgments, make decisions, and find solutions to complex problems. Often this involves focusing on the most relevant aspects of a problem or situation to formulate a solution. While heuristic processes are used to find the answers and solutions that are most likely to work or be correct, they are not always right or the most accurate. Judgments and decisions based on heuristics are simply good enough to satisfy a pressing need in situations of uncertainty, where information is incomplete. In that sense they can differ from answers given by logic and probability.

Thinking, Fast and Slow is a 2011 popular science book by psychologist Daniel Kahneman. The book's main thesis is a differentiation between two modes of thought: "System 1" is fast, instinctive and emotional; "System 2" is slower, more deliberative, and more logical.

The hard–easy effect is a cognitive bias that manifests itself as a tendency to overestimate the probability of one's success at a task perceived as hard, and to underestimate the likelihood of one's success at a task perceived as easy. The hard-easy effect takes place, for example, when individuals exhibit a degree of underconfidence in answering relatively easy questions and a degree of overconfidence in answering relatively difficult questions. "Hard tasks tend to produce overconfidence but worse-than-average perceptions," reported Katherine A. Burson, Richard P. Larrick, and Jack B. Soll in a 2005 study, "whereas easy tasks tend to produce underconfidence and better-than-average effects."

The gateway belief model (GBM) suggests that public perception of the degree of expert or scientific consensus on an issue functions as a so-called "gateway" cognition. Perception of scientific agreement is suggested to be a key step towards acceptance of related beliefs. Increasing the perception that there is normative agreement within the scientific community can increase individual support for an issue. A perception of disagreement may decrease support for an issue.

References

- 1 2 3 4 5 Scharrer, Lisa; Rupieper, Yvonne; Stadtler, Marc; Bromme, Rainer (30 November 2016). "When science becomes too easy: Science popularization inclines laypeople to underrate their dependence on experts". Public Understanding of Science. 26 (8). SAGE Publications: 1003–1018. doi:10.1177/0963662516680311. ISSN 0963-6625. PMID 27899471.

- ↑ Bromme, Rainer; Goldman, Susan R. (3 April 2014). "The Public's Bounded Understanding of Science". Educational Psychologist. 49 (2). Informa UK Limited: 59–69. doi:10.1080/00461520.2014.921572. ISSN 0046-1520.

- 1 2 Hendricks, Scotty. "By Demanding Too Much from Science, We Became a Post-Truth Society". bigthink.com. Retrieved 6 August 2018.

- ↑ "Facts are the reason science is losing during the current war on reason". The Guardian. 2017-02-01. ISSN 0261-3077. Archived from the original on 2017-07-22. Retrieved 2017-02-06.