Related Research Articles

RSA (Rivest–Shamir–Adleman) is a public-key cryptosystem, one of the oldest widely used for secure data transmission. The initialism "RSA" comes from the surnames of Ron Rivest, Adi Shamir and Leonard Adleman, who publicly described the algorithm in 1977. An equivalent system was developed secretly in 1973 at Government Communications Headquarters (GCHQ), the British signals intelligence agency, by the English mathematician Clifford Cocks. That system was declassified in 1997.

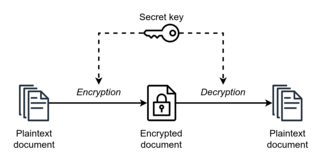

Symmetric-key algorithms are algorithms for cryptography that use the same cryptographic keys for both the encryption of plaintext and the decryption of ciphertext. The keys may be identical, or there may be a simple transformation to go between the two keys. The keys, in practice, represent a shared secret between two or more parties that can be used to maintain a private information link. The requirement that both parties have access to the secret key is one of the main drawbacks of symmetric-key encryption, in comparison to public-key encryption. However, symmetric-key encryption algorithms are usually better for bulk encryption. With exception of the one-time pad they have a smaller key size, which means less storage space and faster transmission. Due to this, asymmetric-key encryption is often used to exchange the secret key for symmetric-key encryption.

In cryptography, the ElGamal encryption system is an asymmetric key encryption algorithm for public-key cryptography which is based on the Diffie–Hellman key exchange. It was described by Taher Elgamal in 1985. ElGamal encryption is used in the free GNU Privacy Guard software, recent versions of PGP, and other cryptosystems. The Digital Signature Algorithm (DSA) is a variant of the ElGamal signature scheme, which should not be confused with ElGamal encryption.

Ronald Linn Rivest is a cryptographer and computer scientist whose work has spanned the fields of algorithms and combinatorics, cryptography, machine learning, and election integrity. He is an Institute Professor at the Massachusetts Institute of Technology (MIT), and a member of MIT's Department of Electrical Engineering and Computer Science and its Computer Science and Artificial Intelligence Laboratory.

Articles related to cryptography include:

The Paillier cryptosystem, invented by and named after Pascal Paillier in 1999, is a probabilistic asymmetric algorithm for public key cryptography. The problem of computing n-th residue classes is believed to be computationally difficult. The decisional composite residuosity assumption is the intractability hypothesis upon which this cryptosystem is based.

Cryptography, the use of codes and ciphers to protect secrets, began thousands of years ago. Until recent decades, it has been the story of what might be called classical cryptography — that is, of methods of encryption that use pen and paper, or perhaps simple mechanical aids. In the early 20th century, the invention of complex mechanical and electromechanical machines, such as the Enigma rotor machine, provided more sophisticated and efficient means of encryption; and the subsequent introduction of electronics and computing has allowed elaborate schemes of still greater complexity, most of which are entirely unsuited to pen and paper.

Probabilistic encryption is the use of randomness in an encryption algorithm, so that when encrypting the same message several times it will, in general, yield different ciphertexts. The term "probabilistic encryption" is typically used in reference to public key encryption algorithms; however various symmetric key encryption algorithms achieve a similar property, and stream ciphers such as Freestyle which are inherently random. To be semantically secure, that is, to hide even partial information about the plaintext, an encryption algorithm must be probabilistic.

The Cramer–Shoup system is an asymmetric key encryption algorithm, and was the first efficient scheme proven to be secure against adaptive chosen ciphertext attack using standard cryptographic assumptions. Its security is based on the computational intractability of the Decisional Diffie–Hellman assumption. Developed by Ronald Cramer and Victor Shoup in 1998, it is an extension of the ElGamal cryptosystem. In contrast to ElGamal, which is extremely malleable, Cramer–Shoup adds other elements to ensure non-malleability even against a resourceful attacker. This non-malleability is achieved through the use of a universal one-way hash function and additional computations, resulting in a ciphertext which is twice as large as in ElGamal.

In cryptography, Optimal Asymmetric Encryption Padding (OAEP) is a padding scheme often used together with RSA encryption. OAEP was introduced by Bellare and Rogaway, and subsequently standardized in PKCS#1 v2 and RFC 2437.

A deterministic encryption scheme is a cryptosystem which always produces the same ciphertext for a given plaintext and key, even over separate executions of the encryption algorithm. Examples of deterministic encryption algorithms include RSA cryptosystem, and many block ciphers when used in ECB mode or with a constant initialization vector.

In cryptography a universal one-way hash function is a type of universal hash function of particular importance to cryptography. UOWHFs are proposed as an alternative to collision-resistant hash functions (CRHFs). CRHFs have a strong collision-resistance property: that it is hard, given randomly chosen hash function parameters, to find any collision of the hash function. In contrast, UOWHFs require that it be hard to find a collision where one preimage is chosen independently of the hash function parameters. The primitive was suggested by Moni Naor and Moti Yung and is also known as "target collision resistant" hash functions; it was employed to construct general digital signature schemes without trapdoor functions, and also within chosen-ciphertext secure public key encryption schemes.

Cryptographic primitives are well-established, low-level cryptographic algorithms that are frequently used to build cryptographic protocols for computer security systems. These routines include, but are not limited to, one-way hash functions and encryption functions.

In cryptographic protocols, a key encapsulation mechanism (KEM) or key encapsulation method is used to secure symmetric key material for transmission using asymmetric (public-key) algorithms. It is commonly used in hybrid cryptosystems. In practice, public key systems are clumsy to use in transmitting long messages. Instead they are often used to exchange symmetric keys, which are relatively short. The symmetric key is then used to encrypt the longer message. The traditional approach to sending a symmetric key with public key systems is to first generate a random symmetric key and then encrypt it using the chosen public key algorithm. The recipient then decrypts the public key message to recover the symmetric key. As the symmetric key is generally short, padding is required for full security and proofs of security for padding schemes are often less than complete. KEMs simplify the process by generating a random element in the finite group underlying the public key system and deriving the symmetric key by hashing that element, eliminating the need for padding.

Selected Areas in Cryptography (SAC) is an international cryptography conference held every August in Canada since 1994. The first workshop was organized by Carlisle Adams, Henk Meijer, Stafford Tavares and Paul van Oorschot. Through 1999, SAC was hosted at either Queen's University or Carleton University, but starting in 2000, locations have ranged across Canada. SAC has featured research presentations on many cryptographic topics, with a traditional focus on the design and analysis of block ciphers. SAC is regarded as a high-quality venue for presenting cryptographic results, and is the only cryptography conference held annually in Canada. Since 2003, SAC has included an invited lecture called the Stafford Tavares Lecture, in honor of one of its original organizers and strongest supporters.

Cryptography, or cryptology, is the practice and study of techniques for secure communication in the presence of adversarial behavior. More generally, cryptography is about constructing and analyzing protocols that prevent third parties or the public from reading private messages. Modern cryptography exists at the intersection of the disciplines of mathematics, computer science, information security, electrical engineering, digital signal processing, physics, and others. Core concepts related to information security are also central to cryptography. Practical applications of cryptography include electronic commerce, chip-based payment cards, digital currencies, computer passwords, and military communications.

The following outline is provided as an overview of and topical guide to cryptography:

Post-quantum cryptography (PQC), sometimes referred to as quantum-proof, quantum-safe, or quantum-resistant, is the development of cryptographic algorithms that are thought to be secure against a cryptanalytic attack by a quantum computer. The problem with popular algorithms currently used in the market is that their security relies on one of three hard mathematical problems: the integer factorization problem, the discrete logarithm problem or the elliptic-curve discrete logarithm problem. All of these problems could be easily solved on a sufficiently powerful quantum computer running Shor's algorithm or even faster and less demanding alternatives.

Mordechai M. "Moti" Yung is a cryptographer and computer scientist known for his work on cryptovirology and kleptography.

Chaotic cryptology is the application of mathematical chaos theory to the practice of cryptography, the study or techniques used to privately and securely transmit information with the presence of a third-party or adversary. Since first being investigated by Robert Matthews in 1989, the use of chaos in cryptography has attracted much interest. However, long-standing concerns about its security and implementation speed continue to limit its implementation.

References

- T. Okamoto, S. Uchiyama and E. Fujisaki (1999). "EPOC: Efficient Probabilistic Public-Key Encryption Archived 2005-11-30 at the Wayback Machine ", Contribution to IEEE – describes EPOC-1 and EPOC-2.

- T. Okamoto and D. Pointcheval (2000). "EPOC-3: Efficient Probabilistic Public-Key Encryption (Version 2) Archived 2005-11-30 at the Wayback Machine ", Contribution to IEEE – describes EPOC-3.

- T. Okamoto; S. Uchiyama (1998). "A new public-key cryptosystem as secure as factoring". Advances in Cryptology — EUROCRYPT'98. Lecture Notes in Computer Science. Vol. 1403. pp. 308–318. doi:10.1007/BFb0054135. ISBN 978-3-540-64518-4.

{{cite book}}: CS1 maint: multiple names: authors list (link)