In computational complexity theory, bounded-error quantum polynomial time (BQP) is the class of decision problems solvable by a quantum computer in polynomial time, with an error probability of at most 1/3 for all instances. It is the quantum analogue to the complexity class BPP.

In mathematical logic, the Peano axioms, also known as the Dedekind–Peano axioms or the Peano postulates, are axioms for the natural numbers presented by the 19th-century Italian mathematician Giuseppe Peano. These axioms have been used nearly unchanged in a number of metamathematical investigations, including research into fundamental questions of whether number theory is consistent and complete.

In the mathematical discipline of set theory, forcing is a technique for proving consistency and independence results. Intuitively, forcing can be thought of as a technique to expand the set theoretical universe to a larger universe by introducing a new "generic" object .

In mathematics, constructive analysis is mathematical analysis done according to some principles of constructive mathematics.

A formula of the predicate calculus is in prenex normal form (PNF) if it is written as a string of quantifiers and bound variables, called the prefix, followed by a quantifier-free part, called the matrix. Together with the normal forms in propositional logic, it provides a canonical normal form useful in automated theorem proving.

System F is a typed lambda calculus that introduces, to simply typed lambda calculus, a mechanism of universal quantification over types. System F formalizes parametric polymorphism in programming languages, thus forming a theoretical basis for languages such as Haskell and ML. It was discovered independently by logician Jean-Yves Girard (1972) and computer scientist John C. Reynolds.

In mathematics, a symmetric bilinear form on a vector space is a bilinear map from two copies of the vector space to the field of scalars such that the order of the two vectors does not affect the value of the map. In other words, it is a bilinear function that maps every pair of elements of the vector space to the underlying field such that for every and in . They are also referred to more briefly as just symmetric forms when "bilinear" is understood.

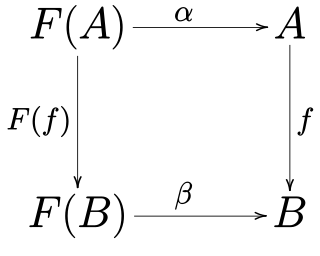

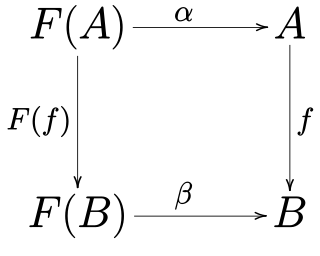

In mathematics, specifically in category theory, F-algebras generalize the notion of algebraic structure. Rewriting the algebraic laws in terms of morphisms eliminates all references to quantified elements from the axioms, and these algebraic laws may then be glued together in terms of a single functor F, the signature.

In set theory, -induction, also called epsilon-induction or set-induction, is a principle that can be used to prove that all sets satisfy a given property. Considered as an axiomatic principle, it is called the axiom schema of set induction.

In the foundations of mathematics, Morse–Kelley set theory (MK), Kelley–Morse set theory (KM), Morse–Tarski set theory (MT), Quine–Morse set theory (QM) or the system of Quine and Morse is a first-order axiomatic set theory that is closely related to von Neumann–Bernays–Gödel set theory (NBG). While von Neumann–Bernays–Gödel set theory restricts the bound variables in the schematic formula appearing in the axiom schema of Class Comprehension to range over sets alone, Morse–Kelley set theory allows these bound variables to range over proper classes as well as sets, as first suggested by Quine in 1940 for his system ML.

In programming languages and type theory, parametric polymorphism allows a single piece of code to be given a "generic" type, using variables in place of actual types, and then instantiated with particular types as needed. Parametrically polymorphic functions and data types are sometimes called generic functions and generic datatypes, respectively, and they form the basis of generic programming.

In mathematical logic, second-order arithmetic is a collection of axiomatic systems that formalize the natural numbers and their subsets. It is an alternative to axiomatic set theory as a foundation for much, but not all, of mathematics.

Axiomatic constructive set theory is an approach to mathematical constructivism following the program of axiomatic set theory. The same first-order language with "" and "" of classical set theory is usually used, so this is not to be confused with a constructive types approach. On the other hand, some constructive theories are indeed motivated by their interpretability in type theories.

In constructive mathematics, Church's thesis is an axiom stating that all total functions are computable functions.

In proof theory, ordinal analysis assigns ordinals to mathematical theories as a measure of their strength. If theories have the same proof-theoretic ordinal they are often equiconsistent, and if one theory has a larger proof-theoretic ordinal than another it can often prove the consistency of the second theory.

In type theory, a system has inductive types if it has facilities for creating a new type from constants and functions that create terms of that type. The feature serves a role similar to data structures in a programming language and allows a type theory to add concepts like numbers, relations, and trees. As the name suggests, inductive types can be self-referential, but usually only in a way that permits structural recursion.

In first-order arithmetic, the induction principles, bounding principles, and least number principles are three related families of first-order principles, which may or may not hold in nonstandard models of arithmetic. These principles are often used in reverse mathematics to calibrate the axiomatic strength of theorems.

In set theory and logic, Buchholz's ID hierarchy is a hierarchy of subsystems of first-order arithmetic. The systems/theories are referred to as "the formal theories of ν-times iterated inductive definitions". IDν extends PA by ν iterated least fixed points of monotone operators.

The finite promise games are a collection of mathematical games developed by American mathematician Harvey Friedman in 2009 which are used to develop a family of fast-growing functions , and . The greedy clique sequence is a graph theory concept, also developed by Friedman in 2010, which are used to develop fast-growing functions , and .