Related Research Articles

In computer science, the time complexity is the computational complexity that describes the amount of time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to differ by at most a constant factor.

In operations research, applied mathematics and theoretical computer science, combinatorial optimization is a topic that consists of finding an optimal object from a finite set of objects. In many such problems, exhaustive search is not tractable. It operates on the domain of those optimization problems in which the set of feasible solutions is discrete or can be reduced to discrete, and in which the goal is to find the best solution. Some common problems involving combinatorial optimization are the travelling salesman problem ("TSP"), the minimum spanning tree problem ("MST"), and the knapsack problem.

The Steiner tree problem, or minimum Steiner tree problem, named after Jakob Steiner, is an umbrella term for a class of problems in combinatorial optimization. While Steiner tree problems may be formulated in a number of settings, they all require an optimal interconnect for a given set of objects and a predefined objective function. One well-known variant, which is often used synonymously with the term Steiner tree problem, is the Steiner tree problem in graphs. Given an undirected graph with non-negative edge weights and a subset of vertices, usually referred to as terminals, the Steiner tree problem in graphs requires a tree of minimum weight that contains all terminals. Further well-known variants are the Euclidean Steiner tree problem and the rectilinear minimum Steiner tree problem.

In graph theory, an independent set, stable set, coclique or anticlique is a set of vertices in a graph, no two of which are adjacent. That is, it is a set of vertices such that for every two vertices in , there is no edge connecting the two. Equivalently, each edge in the graph has at most one endpoint in . The size of an independent set is the number of vertices it contains. Independent sets have also been called internally stable sets.

In the mathematical discipline of graph theory, a vertex cover of a graph is a set of vertices that includes at least one endpoint of every edge of the graph. The problem of finding a minimum vertex cover is a classical optimization problem in computer science and is a typical example of an NP-hard optimization problem that has an approximation algorithm. Its decision version, the vertex cover problem, was one of Karp's 21 NP-complete problems and is therefore a classical NP-complete problem in computational complexity theory. Furthermore, the vertex cover problem is fixed-parameter tractable and a central problem in parameterized complexity theory.

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are also many approximation algorithms that provide an additive guarantee on the quality of the returned solution. A notable example of an approximation algorithm that provides both is the classic approximation algorithm of Lenstra, Shmoys and Tardos for scheduling on unrelated parallel machines.

In computer science, parameterized complexity is a branch of computational complexity theory that focuses on classifying computational problems according to their inherent difficulty with respect to multiple parameters of the input or output. The complexity of a problem is then measured as a function of those parameters. This allows the classification of NP-hard problems on a finer scale than in the classical setting, where the complexity of a problem is only measured as a function of the number of bits in the input. The first systematic work on parameterized complexity was done by Downey & Fellows (1999).

In computer science, a polynomial-time approximation scheme (PTAS) is a type of approximation algorithm for optimization problems.

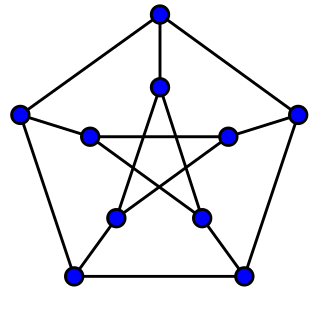

In the mathematical field of graph theory, a cubic graph is a graph in which all vertices have degree three. In other words, a cubic graph is a 3-regular graph. Cubic graphs are also called trivalent graphs.

In graph theory, a dominating set for a graph G = (V, E) is a subset D of V such that every vertex not in D is adjacent to at least one member of D. The domination number γ(G) is the number of vertices in a smallest dominating set for G.

In the mathematical discipline of graph theory, a feedback vertex set of a graph is a set of vertices whose removal leaves a graph without cycles. In other words, each feedback vertex set contains at least one vertex of any cycle in the graph. The feedback vertex set problem is an NP-complete problem in computational complexity theory. It was among the first problems shown to be NP-complete. It has wide applications in operating systems, database systems, and VLSI chip design.

In graph theory, a directed graph may contain directed cycles, a one-way loop of edges. In some applications, such cycles are undesirable, and we wish to eliminate them and obtain a directed acyclic graph (DAG). One way to do this is simply to drop edges from the graph to break the cycles. A feedback arc set (FAS) or feedback edge set is a set of edges which, when removed from the graph, leave a DAG. Put another way, it's a set containing at least one edge of every cycle in the graph.

In computational complexity theory, the class APX is the set of NP optimization problems that allow polynomial-time approximation algorithms with approximation ratio bounded by a constant. In simple terms, problems in this class have efficient algorithms that can find an answer within some fixed multiplicative factor of the optimal answer.

In graph theory, the clique-width of a graph is a parameter that describes the structural complexity of the graph; it is closely related to treewidth, but unlike treewidth it can be bounded even for dense graphs. It is defined as the minimum number of labels needed to construct by means of the following 4 operations :

- Creation of a new vertex v with label i

- Disjoint union of two labeled graphs G and H

- Joining by an edge every vertex labeled i to every vertex labeled j, where

- Renaming label i to label j

In graph theory and theoretical computer science, the longest path problem is the problem of finding a simple path of maximum length in a given graph. A path is called simple if it does not have any repeated vertices; the length of a path may either be measured by its number of edges, or by the sum of the weights of its edges. In contrast to the shortest path problem, which can be solved in polynomial time in graphs without negative-weight cycles, the longest path problem is NP-hard and the decision version of the problem, which asks whether a path exists of at least some given length, is NP-complete. This means that the decision problem cannot be solved in polynomial time for arbitrary graphs unless P = NP. Stronger hardness results are also known showing that it is difficult to approximate. However, it has a linear time solution for directed acyclic graphs, which has important applications in finding the critical path in scheduling problems.

In computational complexity theory, a problem is NP-complete when it can be solved by a restricted class of brute force search algorithms and it can be used to simulate any other problem with a similar algorithm. More precisely, each input to the problem should be associated with a set of solutions of polynomial length, whose validity can be tested quickly, such that the output for any input is "yes" if the solution set is non-empty and "no" if it is empty. The complexity class of problems of this form is called NP, an abbreviation for "nondeterministic polynomial time". A problem is said to be NP-hard if everything in NP can be transformed in polynomial time into it, and a problem is NP-complete if it is both in NP and NP-hard. The NP-complete problems represent the hardest problems in NP. If any NP-complete problem has a polynomial time algorithm, all problems in NP do. The set of NP-complete problems is often denoted by NP-C or NPC.

In computational complexity theory, the exponential time hypothesis is an unproven computational hardness assumption that was formulated by Impagliazzo & Paturi (1999). The hypothesis states that 3-SAT cannot be solved in subexponential time in the worst case. The exponential time hypothesis, if true, would imply that P ≠ NP, but it is a stronger statement. It can be used to show that many computational problems are equivalent in complexity, in the sense that if one of them has a subexponential time algorithm then they all do.

In computer science, iterative compression is an algorithmic technique for the design of fixed-parameter tractable algorithms, in which one element is added to the problem in each step, and a small solution for the problem prior to the addition is used to help find a small solution to the problem after the step.

In graph theory, a branch of mathematics, a chordal completion of a given undirected graph G is a chordal graph, on the same vertex set, that has G as a subgraph. A minimal chordal completion is a chordal completion such that any graph formed by removing an edge would no longer be a chordal completion. A minimum chordal completion is a chordal completion with as few edges as possible.

Fedor V. Fomin is a professor of Computer Science at the University of Bergen. He is known for his work in algorithms and graph theory.

References

- ↑ Fomin, Fedor V.; Kaski, Petteri (March 2013), "Exact Exponential Algorithms", Communications of the ACM , 56 (3): 80–88, doi:10.1145/2428556.2428575 .

- ↑ Fomin, Fedor V.; Kratsch, Dieter (2010). Exact Exponential Algorithms . Springer. p. 203. ISBN 978-3-642-16532-0.