Definitions

Let be a universe of discourse, be a class of subsets of , and . A function where

is called a fuzzy measure. A fuzzy measure is called normalized or regular if .

In mathematics, fuzzy measure theory considers generalized measures in which the additive property is replaced by the weaker property of monotonicity. The central concept of fuzzy measure theory is the fuzzy measure (also capacity, see [1] ), which was introduced by Choquet in 1953 and independently defined by Sugeno in 1974 in the context of fuzzy integrals. There exists a number of different classes of fuzzy measures including plausibility/belief measures, possibility/necessity measures, and probability measures, which are a subset of classical measures.

Let be a universe of discourse, be a class of subsets of , and . A function where

is called a fuzzy measure. A fuzzy measure is called normalized or regular if .

A fuzzy measure is:

Understanding the properties of fuzzy measures is useful in application. When a fuzzy measure is used to define a function such as the Sugeno integral or Choquet integral, these properties will be crucial in understanding the function's behavior. For instance, the Choquet integral with respect to an additive fuzzy measure reduces to the Lebesgue integral. In discrete cases, a symmetric fuzzy measure will result in the ordered weighted averaging (OWA) operator. Submodular fuzzy measures result in convex functions, while supermodular fuzzy measures result in concave functions when used to define a Choquet integral.

Let g be a fuzzy measure. The Möbius representation of g is given by the set function M, where for every ,

The equivalent axioms in Möbius representation are:

A fuzzy measure in Möbius representation M is called normalized if

Möbius representation can be used to give an indication of which subsets of X interact with one another. For instance, an additive fuzzy measure has Möbius values all equal to zero except for singletons. The fuzzy measure g in standard representation can be recovered from the Möbius form using the Zeta transform:

Fuzzy measures are defined on a semiring of sets or monotone class, which may be as granular as the power set of X, and even in discrete cases the number of variables can be as large as 2|X|. For this reason, in the context of multi-criteria decision analysis and other disciplines, simplification assumptions on the fuzzy measure have been introduced so that it is less computationally expensive to determine and use. For instance, when it is assumed the fuzzy measure is additive, it will hold that and the values of the fuzzy measure can be evaluated from the values on X. Similarly, a symmetric fuzzy measure is defined uniquely by |X| values. Two important fuzzy measures that can be used are the Sugeno- or -fuzzy measure and k-additive measures, introduced by Sugeno [2] and Grabisch [3] respectively.

The Sugeno -measure is a special case of fuzzy measures defined iteratively. It has the following definition:

Let be a finite set and let . A Sugeno -measure is a function such that

As a convention, the value of g at a singleton set is called a density and is denoted by . In addition, we have that satisfies the property

Tahani and Keller [4] as well as Wang and Klir have showed that once the densities are known, it is possible to use the previous polynomial to obtain the values of uniquely.

The k-additive fuzzy measure limits the interaction between the subsets to size . This drastically reduces the number of variables needed to define the fuzzy measure, and as k can be anything from 1 (in which case the fuzzy measure is additive) to X, it allows for a compromise between modelling ability and simplicity.

A discrete fuzzy measure g on a set X is called k-additive () if its Möbius representation verifies , whenever for any , and there exists a subset F with k elements such that .

In game theory, the Shapley value or Shapley index is used to indicate the weight of a game. Shapley values can be calculated for fuzzy measures in order to give some indication of the importance of each singleton. In the case of additive fuzzy measures, the Shapley value will be the same as each singleton.

For a given fuzzy measure g, and , the Shapley index for every is:

The Shapley value is the vector

In measure theory, a branch of mathematics, the Lebesgue measure, named after French mathematician Henri Lebesgue, is the standard way of assigning a measure to subsets of n-dimensional Euclidean space. For n = 1, 2, or 3, it coincides with the standard measure of length, area, or volume. In general, it is also called n-dimensional volume, n-volume, or simply volume. It is used throughout real analysis, in particular to define Lebesgue integration. Sets that can be assigned a Lebesgue measure are called Lebesgue-measurable; the measure of the Lebesgue-measurable set A is here denoted by λ(A).

In mathematics, the concept of a measure is a generalization and formalization of geometrical measures and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations of measure are widely used in quantum physics and physics in general.

In mathematics, a quadric or quadric surface (quadric hypersurface in higher dimensions), is a generalization of conic sections (ellipses, parabolas, and hyperbolas). It is a hypersurface (of dimension D) in a (D + 1)-dimensional space, and it is defined as the zero set of an irreducible polynomial of degree two in D + 1 variables; for example, D = 1 in the case of conic sections. When the defining polynomial is not absolutely irreducible, the zero set is generally not considered a quadric, although it is often called a degenerate quadric or a reducible quadric.

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equation constraints. It is named after the mathematician Joseph-Louis Lagrange.

In mathematics, a self-adjoint operator on an infinite-dimensional complex vector space V with inner product is a linear map A that is its own adjoint. If V is finite-dimensional with a given orthonormal basis, this is equivalent to the condition that the matrix of A is a Hermitian matrix, i.e., equal to its conjugate transpose A∗. By the finite-dimensional spectral theorem, V has an orthonormal basis such that the matrix of A relative to this basis is a diagonal matrix with entries in the real numbers. This article deals with applying generalizations of this concept to operators on Hilbert spaces of arbitrary dimension.

In mathematics, an indicator function or a characteristic function of a subset of a set is a function that maps elements of the subset to one, and all other elements to zero. That is, if A is a subset of some set X, then if and otherwise, where is a common notation for the indicator function. Other common notations are and

In topology and related branches of mathematics, the Kuratowski closure axioms are a set of axioms that can be used to define a topological structure on a set. They are equivalent to the more commonly used open set definition. They were first formalized by Kazimierz Kuratowski, and the idea was further studied by mathematicians such as Wacław Sierpiński and António Monteiro, among others.

In functional analysis, a branch of mathematics, a compact operator is a linear operator , where are normed vector spaces, with the property that maps bounded subsets of to relatively compact subsets of . Such an operator is necessarily a bounded operator, and so continuous. Some authors require that are Banach, but the definition can be extended to more general spaces.

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets. Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard.

The isothermal–isobaric ensemble is a statistical mechanical ensemble that maintains constant temperature and constant pressure applied. It is also called the -ensemble, where the number of particles is also kept as a constant. This ensemble plays an important role in chemistry as chemical reactions are usually carried out under constant pressure condition. The NPT ensemble is also useful for measuring the equation of state of model systems whose virial expansion for pressure cannot be evaluated, or systems near first-order phase transitions.

In the fields of actuarial science and financial economics there are a number of ways that risk can be defined; to clarify the concept theoreticians have described a number of properties that a risk measure might or might not have. A coherent risk measure is a function that satisfies properties of monotonicity, sub-additivity, homogeneity, and translational invariance.

In mathematics, in particular in measure theory, a content is a real-valued function defined on a collection of subsets such that

In mathematics, the Vitali covering lemma is a combinatorial and geometric result commonly used in measure theory of Euclidean spaces. This lemma is an intermediate step, of independent interest, in the proof of the Vitali covering theorem. The covering theorem is credited to the Italian mathematician Giuseppe Vitali. The theorem states that it is possible to cover, up to a Lebesgue-negligible set, a given subset E of Rd by a disjoint family extracted from a Vitali covering of E.

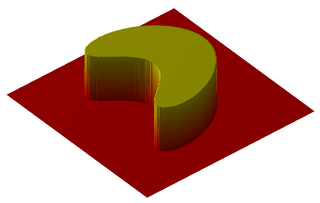

A Choquet integral is a subadditive or superadditive integral created by the French mathematician Gustave Choquet in 1953. It was initially used in statistical mechanics and potential theory, but found its way into decision theory in the 1980s, where it is used as a way of measuring the expected utility of an uncertain event. It is applied specifically to membership functions and capacities. In imprecise probability theory, the Choquet integral is also used to calculate the lower expectation induced by a 2-monotone lower probability, or the upper expectation induced by a 2-alternating upper probability.

In probability theory, a Markov kernel is a map that in the general theory of Markov processes plays the role that the transition matrix does in the theory of Markov processes with a finite state space.

In mathematics, especially measure theory, a set function is a function whose domain is a family of subsets of some given set and that (usually) takes its values in the extended real number line which consists of the real numbers and

Within bayesian statistics for machine learning, kernel methods arise from the assumption of an inner product space or similarity structure on inputs. For some such methods, such as support vector machines (SVMs), the original formulation and its regularization were not Bayesian in nature. It is helpful to understand them from a Bayesian perspective. Because the kernels are not necessarily positive semidefinite, the underlying structure may not be inner product spaces, but instead more general reproducing kernel Hilbert spaces. In Bayesian probability kernel methods are a key component of Gaussian processes, where the kernel function is known as the covariance function. Kernel methods have traditionally been used in supervised learning problems where the input space is usually a space of vectors while the output space is a space of scalars. More recently these methods have been extended to problems that deal with multiple outputs such as in multi-task learning.

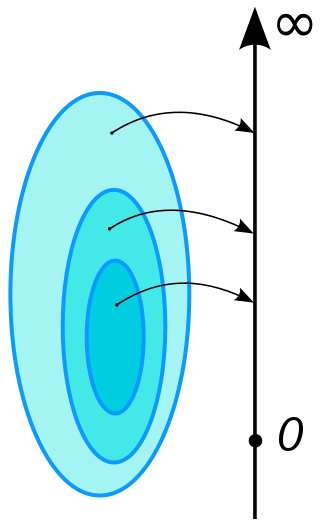

In mathematics, the Sugeno integral, named after M. Sugeno, is a type of integral with respect to a fuzzy measure.

In mathematics, a polyadic space is a topological space that is the image under a continuous function of a topological power of an Alexandroff one-point compactification of a discrete space.

Tau functions are an important ingredient in the modern mathematical theory of integrable systems, and have numerous applications in a variety of other domains. They were originally introduced by Ryogo Hirota in his direct method approach to soliton equations, based on expressing them in an equivalent bilinear form.