An F-test is any statistical test used to compare the variances of two samples or the ratio of variances between multiple samples. The test statistic, random variable F, is used to determine if the tested data has an F-distribution under the true null hypothesis, and true customary assumptions about the error term (ε). It is most often used when comparing statistical models that have been fitted to a data set, in order to identify the model that best fits the population from which the data were sampled. Exact "F-tests" mainly arise when the models have been fitted to the data using least squares. The name was coined by George W. Snedecor, in honour of Ronald Fisher. Fisher initially developed the statistic as the variance ratio in the 1920s.

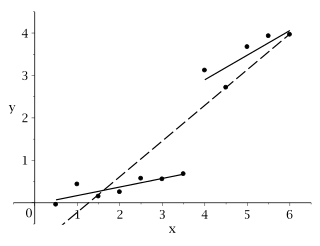

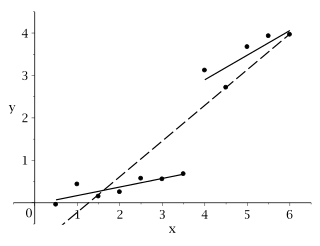

In statistics and optimization, errors and residuals are two closely related and easily confused measures of the deviation of an observed value of an element of a statistical sample from its "true value". The error of an observation is the deviation of the observed value from the true value of a quantity of interest. The residual is the difference between the observed value and the estimated value of the quantity of interest. The distinction is most important in regression analysis, where the concepts are sometimes called the regression errors and regression residuals and where they lead to the concept of studentized residuals. In econometrics, "errors" are also called disturbances.

Econometrica is a peer-reviewed academic journal of economics, publishing articles in many areas of economics, especially econometrics. It is published by Wiley-Blackwell on behalf of the Econometric Society. The current editor-in-chief is Guido Imbens.

In econometrics, the autoregressive conditional heteroskedasticity (ARCH) model is a statistical model for time series data that describes the variance of the current error term or innovation as a function of the actual sizes of the previous time periods' error terms; often the variance is related to the squares of the previous innovations. The ARCH model is appropriate when the error variance in a time series follows an autoregressive (AR) model; if an autoregressive moving average (ARMA) model is assumed for the error variance, the model is a generalized autoregressive conditional heteroskedasticity (GARCH) model.

Robert Fry Engle III is an American economist and statistician. He won the 2003 Nobel Memorial Prize in Economic Sciences, sharing the award with Clive Granger, "for methods of analyzing economic time series with time-varying volatility (ARCH)".

In statistics, the Breusch–Pagan test, developed in 1979 by Trevor Breusch and Adrian Pagan, is used to test for heteroskedasticity in a linear regression model. It was independently suggested with some extension by R. Dennis Cook and Sanford Weisberg in 1983. Derived from the Lagrange multiplier test principle, it tests whether the variance of the errors from a regression is dependent on the values of the independent variables. In that case, heteroskedasticity is present.

In statistics, generalized least squares (GLS) is a method used to estimate the unknown parameters in a linear regression model. It is used when there is a non-zero amount of correlation between the residuals in the regression model. GLS is employed to improve statistical efficiency and reduce the risk of drawing erroneous inferences, as compared to conventional least squares and weighted least squares methods. It was first described by Alexander Aitken in 1935.

White test is a statistical test that establishes whether the variance of the errors in a regression model is constant: that is for homoskedasticity.

In statistics, the Durbin–Watson statistic is a test statistic used to detect the presence of autocorrelation at lag 1 in the residuals from a regression analysis. It is named after James Durbin and Geoffrey Watson. The small sample distribution of this ratio was derived by John von Neumann. Durbin and Watson applied this statistic to the residuals from least squares regressions, and developed bounds tests for the null hypothesis that the errors are serially uncorrelated against the alternative that they follow a first order autoregressive process. Note that the distribution of this test statistic does not depend on the estimated regression coefficients and the variance of the errors.

The topic of heteroskedasticity-consistent (HC) standard errors arises in statistics and econometrics in the context of linear regression and time series analysis. These are also known as heteroskedasticity-robust standard errors, Eicker–Huber–White standard errors, to recognize the contributions of Friedhelm Eicker, Peter J. Huber, and Halbert White.

In econometrics and statistics, a structural break is an unexpected change over time in the parameters of regression models, which can lead to huge forecasting errors and unreliability of the model in general. This issue was popularised by David Hendry, who argued that lack of stability of coefficients frequently caused forecast failure, and therefore we must routinely test for structural stability. Structural stability − i.e., the time-invariance of regression coefficients − is a central issue in all applications of linear regression models.

In statistics, the Breusch–Godfrey test is used to assess the validity of some of the modelling assumptions inherent in applying regression-like models to observed data series. In particular, it tests for the presence of serial correlation that has not been included in a proposed model structure and which, if present, would mean that incorrect conclusions would be drawn from other tests or that sub-optimal estimates of model parameters would be obtained.

In statistics, the Goldfeld–Quandt test checks for heteroscedasticity in regression analyses. It does this by dividing a dataset into two parts or groups, and hence the test is sometimes called a two-group test. The Goldfeld–Quandt test is one of two tests proposed in a 1965 paper by Stephen Goldfeld and Richard Quandt. Both a parametric and nonparametric test are described in the paper, but the term "Goldfeld–Quandt test" is usually associated only with the former.

A Newey–West estimator is used in statistics and econometrics to provide an estimate of the covariance matrix of the parameters of a regression-type model where the standard assumptions of regression analysis do not apply. It was devised by Whitney K. Newey and Kenneth D. West in 1987, although there are a number of later variants. The estimator is used to try to overcome autocorrelation, and heteroskedasticity in the error terms in the models, often for regressions applied to time series data. The abbreviation "HAC," sometimes used for the estimator, stands for "heteroskedasticity and autocorrelation consistent." There are a number of HAC estimators described in, and HAC estimator does not refer uniquely to Newey–West. One version of Newey–West Bartlett requires the user to specify the bandwidth and usage of the Bartlett kernel from Kernel density estimation

The following outline is provided as an overview of and topical guide to regression analysis:

Anil K. Bera is an Indian-American econometrician. He is Professor of Economics at University of Illinois at Urbana–Champaign's Department of Economics. He is most noted for his work with Carlos Jarque on the Jarque–Bera test.

In econometrics, the Park test is a test for heteroscedasticity. The test is based on the method proposed by Rolla Edward Park for estimating linear regression parameters in the presence of heteroscedastic error terms.

In statistics, the Glejser test for heteroscedasticity, developed in 1969 by Herbert Glejser, regresses the residuals on the explanatory variable that is thought to be related to the heteroscedastic variance. After it was found not to be asymptotically valid under asymmetric disturbances, similar improvements have been independently suggested by Im, and Machado and Santos Silva.

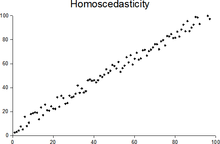

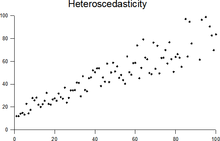

In statistics, a sequence of random variables is homoscedastic if all its random variables have the same finite variance; this is also known as homogeneity of variance. The complementary notion is called heteroscedasticity, also known as heterogeneity of variance. The spellings homoskedasticity and heteroskedasticity are also frequently used. Assuming a variable is homoscedastic when in reality it is heteroscedastic results in unbiased but inefficient point estimates and in biased estimates of standard errors, and may result in overestimating the goodness of fit as measured by the Pearson coefficient.