Optical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a scene photo or from subtitle text superimposed on an image.

Handwriting recognition (HWR), also known as handwritten text recognition (HTR), is the ability of a computer to receive and interpret intelligible handwritten input from sources such as paper documents, photographs, touch-screens and other devices. The image of the written text may be sensed "off line" from a piece of paper by optical scanning or intelligent word recognition. Alternatively, the movements of the pen tip may be sensed "on line", for example by a pen-based computer screen surface, a generally easier task as there are more clues available. A handwriting recognition system handles formatting, performs correct segmentation into characters, and finds the most possible words.

In computer vision or natural language processing, document layout analysis is the process of identifying and categorizing the regions of interest in the scanned image of a text document. A reading system requires the segmentation of text zones from non-textual ones and the arrangement in their correct reading order. Detection and labeling of the different zones as text body, illustrations, math symbols, and tables embedded in a document is called geometric layout analysis. But text zones play different logical roles inside the document and this kind of semantic labeling is the scope of the logical layout analysis.

Document processing is a field of research and a set of production processes aimed at making an analog document digital. Document processing does not simply aim to photograph or scan a document to obtain a digital image, but also to make it digitally intelligible. This includes extracting the structure of the document or the layout and then the content, which can take the form of text or images. The process can involve traditional computer vision algorithms, convolutional neural networks or manual labor. The problems addressed are related to semantic segmentation, object detection, optical character recognition (OCR), handwritten text recognition (HTR) and, more broadly, transcription, whether automatic or not. The term can also include the phase of digitizing the document using a scanner and the phase of interpreting the document, for example using natural language processing (NLP) or image classification technologies. It is applied in many industrial and scientific fields for the optimization of administrative processes, mail processing and the digitization of analog archives and historical documents.

In handwriting research, the concept of stroke is used in various ways. In engineering and computer science, there is a tendency to use the term stroke for a single connected component of ink or a complete pen-down trace. Thus, such stroke may be a complete character or a part of a character. However, in this definition, a complete word written as connected cursive script should also be called a stroke. This is in conflict with the suggested unitary nature of stroke as a relatively simple shape.

Optical music recognition (OMR) is a field of research that investigates how to computationally read musical notation in documents. The goal of OMR is to teach the computer to read and interpret sheet music and produce a machine-readable version of the written music score. Once captured digitally, the music can be saved in commonly used file formats, e.g. MIDI and MusicXML . In the past it has, misleadingly, also been called "music optical character recognition". Due to significant differences, this term should no longer be used.

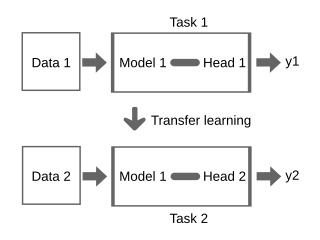

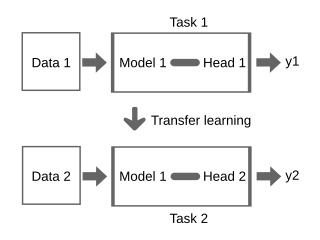

Transfer learning (TL) is a technique in machine learning (ML) in which knowledge learned from a task is re-used in order to boost performance on a related task. For example, for image classification, knowledge gained while learning to recognize cars could be applied when trying to recognize trucks. This topic is related to the psychological literature on transfer of learning, although practical ties between the two fields are limited. Reusing/transferring information from previously learned tasks to new tasks has the potential to significantly improve learning efficiency.

OCRopus is a free document analysis and optical character recognition (OCR) system released under the Apache License v2.0 with a very modular design using command-line interfaces.

FADO is a European image-archiving system that was set up to help combat illegal immigration and organised crime. It was established by a Joint Action of the Council of the European Union enacted in 1998.

Sargur Narasimhamurthy Srihari was an Indian and American computer scientist and educator who made contributions to the field of pattern recognition. The principal impact of his work has been in handwritten address reading systems and in computer forensics. He was a SUNY Distinguished Professor in the School of Engineering and Applied Sciences at the University at Buffalo, Buffalo, New York, USA.

Text, Speech and Dialogue (TSD) is an annual conference involving topics on natural language processing and computational linguistics. The meeting is held every September alternating in Brno and Plzeň, Czech Republic.

Handwritten biometric recognition is the process of identifying the author of a given text from the handwriting style. Handwritten biometric recognition belongs to behavioural biometric systems because it is based on something that the user has learned to do.

In natural language processing, entity linking, also referred to as named-entity linking (NEL), named-entity disambiguation (NED), named-entity recognition and disambiguation (NERD) or named-entity normalization (NEN) is the task of assigning a unique identity to entities mentioned in text. For example, given the sentence "Paris is the capital of France", the idea is to determine that "Paris" refers to the city of Paris and not to Paris Hilton or any other entity that could be referred to as "Paris". Entity linking is different from named-entity recognition (NER) in that NER identifies the occurrence of a named entity in text but it does not identify which specific entity it is.

The MNIST database is a large database of handwritten digits that is commonly used for training various image processing systems. The database is also widely used for training and testing in the field of machine learning. It was created by "re-mixing" the samples from NIST's original datasets. The creators felt that since NIST's training dataset was taken from American Census Bureau employees, while the testing dataset was taken from American high school students, it was not well-suited for machine learning experiments. Furthermore, the black and white images from NIST were normalized to fit into a 28x28 pixel bounding box and anti-aliased, which introduced grayscale levels.

Convolutional neural network (CNN) is a regularized type of feed-forward neural network that learns feature engineering by itself via filters optimization. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by using regularized weights over fewer connections. For example, for each neuron in the fully-connected layer, 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded convolution kernels, only 25 neurons are required to process 5x5-sized tiles. Higher-layer features are extracted from wider context windows, compared to lower-layer features.

The International Association for Pattern Recognition (IAPR), founded in 1978 by Purdue University computer scientist King-Sun Fu, is an international association of non-profit, scientific, or professional organizations concerned with pattern recognition, computer vision, and image processing in a broad sense. Normally, only one organization is admitted from any one country, and individuals interested in taking part in IAPR's activities may do so by joining their national organization.

Emotion recognition is the process of identifying human emotion. People vary widely in their accuracy at recognizing the emotions of others. Use of technology to help people with emotion recognition is a relatively nascent research area. Generally, the technology works best if it uses multiple modalities in context. To date, the most work has been conducted on automating the recognition of facial expressions from video, spoken expressions from audio, written expressions from text, and physiology as measured by wearables.

Data augmentation is a statistical technique which allows maximum likelihood estimation from incomplete data. Data augmentation has important applications in Bayesian analysis, and the technique is widely used in machine learning to reduce overfitting when training machine learning models, achieved by training models on several slightly-modified copies of existing data.

Connectionist temporal classification (CTC) is a type of neural network output and associated scoring function, for training recurrent neural networks (RNNs) such as LSTM networks to tackle sequence problems where the timing is variable. It can be used for tasks like on-line handwriting recognition or recognizing phonemes in speech audio. CTC refers to the outputs and scoring, and is independent of the underlying neural network structure. It was introduced in 2006.

Scene text is text that appears in an image captured by a camera in an outdoor environment.