Related Research Articles

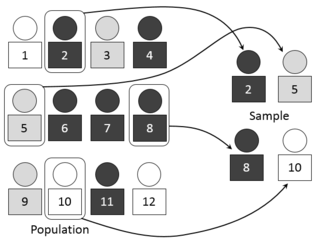

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset or a statistical sample of individuals from within a statistical population to estimate characteristics of the whole population. Statisticians attempt to collect samples that are representative of the population. Sampling has lower costs and faster data collection compared to recording data from the entire population, and thus, it can provide insights in cases where it is infeasible to measure an entire population.

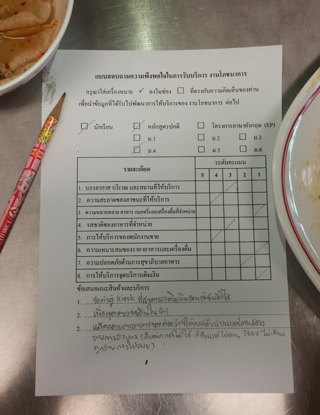

Questionnaire construction refers to the design of a questionnaire to gather statistically useful information about a given topic. When properly constructed and responsibly administered, questionnaires can provide valuable data about any given subject.

Survey methodology is "the study of survey methods". As a field of applied statistics concentrating on human-research surveys, survey methodology studies the sampling of individual units from a population and associated techniques of survey data collection, such as questionnaire construction and methods for improving the number and accuracy of responses to surveys. Survey methodology targets instruments or procedures that ask one or more questions that may or may not be answered.

An opinion poll, often simply referred to as a survey or a poll, is a human research survey of public opinion from a particular sample. Opinion polls are usually designed to represent the opinions of a population by conducting a series of questions and then extrapolating generalities in ratio or within confidence intervals. A person who conducts polls is referred to as a pollster.

A push poll is an interactive marketing technique, most commonly employed during political campaigning, in which a person or organization attempts to manipulate or alter prospective voters' views under the guise of conducting an opinion poll. Large numbers of voters are contacted with little effort made to collect and analyze their response data. Instead, the push poll is a form of telemarketing-based propaganda and rumor-mongering masquerading as an opinion poll. Push polls may rely on innuendo, or information gleaned from opposition research on the political opponent of the interests behind the poll.

A questionnaire is a research instrument that consists of a set of questions for the purpose of gathering information from respondents through survey or statistical study. A research questionnaire is typically a mix of close-ended questions and open-ended questions. Open-ended, long-term questions offer the respondent the ability to elaborate on their thoughts. The Research questionnaire was developed by the Statistical Society of London in 1838.

An independent voter, often also called an unaffiliated voter or non-affiliated voter in the United States, is a voter who does not align themselves with a political party. An independent is variously defined as a voter who votes for candidates on issues rather than on the basis of a political ideology or partisanship; a voter who does not have long-standing loyalty to, or identification with, a political party; a voter who does not usually vote for the same political party from election to election; or a voter who self-describes as an independent.

In survey research, response rate, also known as completion rate or return rate, is the number of people who answered the survey divided by the number of people in the sample. It is usually expressed in the form of a percentage. The term is also used in direct marketing to refer to the number of people who responded to an offer.

SurveyUSA is a polling firm in the United States. It conducts market research for corporations and interest groups, but is best known for conducting opinion polls for various political offices and questions. SurveyUSA conducts these opinion polls under contract by over 50 television stations . The difference between SurveyUSA and other telephone polling firms is twofold. First, SurveyUSA does not use live call center employees, but an automated system. Taped questions are asked of the respondent by a professional announcer, and the respondent is invited to press a button on their touch tone telephone or record a message at a prompt designating their selection. Secondly, SurveyUSA uses more concise language, especially for ballot propositions, than competitors. This can lead to diverging results, such as for California Proposition 76, where one version of the SurveyUSA question with a one sentence description, polled significantly differently compared to another version with a three sentence description.

Gallup, Inc. is an American multinational analytics and advisory company based in Washington, D.C. Founded by George Gallup in 1935, the company became known for its public opinion polls conducted worldwide. Gallup provides analytics and management consulting to organizations globally. In addition the company offers educational consulting, the CliftonStrengths assessment and associated products, and business and management books published by its Gallup Press unit.

The Metallic Metals Act was a fictional piece of legislation included in a 1947 American opinion survey conducted by Sam Gill and published in the March 14, 1947, issue of Tide magazine. When given four possible replies, 70% of respondents claimed to have an opinion on the act. It has become a classic example of the risks of meaningless responses to closed-ended questions and prompted the study of the pseudo-opinion phenomenon.

Automated telephone surveys is a systematic collection a data from demography by making calls automatically to the preset list of respondents at the aim of collecting information and gain feedback via the telephone and the internet. Automated surveys are used for customer research purposes by call centres for customer relationship management and performance management purposes. They are also used for political polling, market research and job satisfaction surveying.

Acquiescence bias, also known as agreement bias, is a category of response bias common to survey research in which respondents have a tendency to select a positive response option or indicate a positive connotation disproportionately more frequently. Respondents do so without considering the content of the question or their 'true' preference. Acquiescence is sometimes referred to as "yea-saying" and is the tendency of a respondent to agree with a statement when in doubt. Questions affected by acquiescence bias take the following format: a stimulus in the form of a statement is presented, followed by 'agree/disagree,' 'yes/no' or 'true/false' response options. For example, a respondent might be presented with the statement "gardening makes me feel happy," and would then be expected to select either 'agree' or 'disagree.' Such question formats are favoured by both survey designers and respondents because they are straightforward to produce and respond to. The bias is particularly prevalent in the case of surveys or questionnaires that employ truisms as the stimuli, such as: "It is better to give than to receive" or "Never a lender nor a borrower be". Acquiescence bias can introduce systematic errors that affect the validity of research by confounding attitudes and behaviours with the general tendency to agree, which can result in misguided inference. Research suggests that the proportion of respondents who carry out this behaviour is between 10% and 20%.

The Bradley effect is a theory concerning observed discrepancies between voter opinion polls and election outcomes in some United States government elections where a white candidate and a non-white candidate run against each other. The theory proposes that some white voters who intend to vote for the white candidate would nonetheless tell pollsters that they are undecided or likely to vote for the non-white candidate. It was named after Los Angeles mayor Tom Bradley, an African-American who lost the 1982 California gubernatorial election to California attorney general George Deukmejian, a white person, despite Bradley being ahead in voter polls going into the elections.

Participation bias or non-response bias is a phenomenon in which the results of elections, studies, polls, etc. become non-representative because the participants disproportionately possess certain traits which affect the outcome. These traits mean the sample is systematically different from the target population, potentially resulting in biased estimates.

In the run up to the general election on 7 May 2015, various organisations carried out opinion polling to gauge voting intention. Results of such polls are displayed in this article. Most of the polling companies listed are members of the British Polling Council (BPC) and abide by its disclosure rules.

With the application of probability sampling in the 1930s, surveys became a standard tool for empirical research in social sciences, marketing, and official statistics. The methods involved in survey data collection are any of a number of ways in which data can be collected for a statistical survey. These are methods that are used to collect information from a sample of individuals in a systematic way. First there was the change from traditional paper-and-pencil interviewing (PAPI) to computer-assisted interviewing (CAI). Now, face-to-face surveys (CAPI), telephone surveys (CATI), and mail surveys are increasingly replaced by web surveys. In addition, remote interviewers could possibly keep the respondent engaged while reducing cost as compared to in-person interviewers.

Jon Alexander Krosnick is a professor of Political Science, Communication, and Psychology, and director of the Political Psychology Research Group (PPRG) at Stanford University. Additionally, he is the Frederic O. Glover Professor in Humanities and Social Sciences and an affiliate of the Woods Institute for the Environment. Krosnick has served as a consultant for government agencies, universities, and businesses, has testified as an expert in court proceedings, and has been an on-air television commentator on election night.

The Suffolk University Political Research Center (SUPRC) is an opinion polling center at Suffolk University in Boston, Massachusetts.

A feeling thermometer, also known as a thermometer scale, is a type of visual analog scale that allows respondents to rank their views of a given subject on a scale from "cold" to "hot", analogous to the temperature scale of a real thermometer. It is often used in survey and political science research to measure how positively individuals feel about a given group, individual, issue, or organisation, as well as in quality of life research to measure individuals' subjective health status. It typically uses a rating scale with options ranging from a minimum of 0 to a maximum of 100. Questions using the feeling thermometer have been included in every year of the American National Election Studies since 1968.

References

Notes

- ↑ Barbara A. Anderson; Brian D. Silver & Paul R. Abramson (Spring 1988). "The Effects of Race of the Interviewer on Measures of Electoral Participation by Blacks in SRC National Election Studies". Public Opinion Quarterly. 52 (1): 53–83. doi:10.1086/269082.

- ↑ Groves, Robert M.; Lou J. Magilavy (1986). "Measuring and Explaining Interviewer Effects in Centralised Telephone Surveys". Public Opinion Quarterly . 50 (2): 251. doi:10.1086/268979. ISSN 0033-362X.

Bibliography

- Anderson, Barbara A., Brian D. Silver, and Paul R. Abramson. "The Effects of Race of the Interviewer on Measures of Electoral Participation by Blacks in SRC National Election Studies," Public Opinion Quarterly 52 (Spring 1988): 53-83.

- Anderson, Barbara A., Brian D. Silver, and Paul R. Abramson, "The Effects of the Race of Interviewer on Race-Related Attitudes of Black Respondents in SRC/CPS National Election Studies," Public Opinion Quarterly 52 (August 1988): 289-324.

- Davis, Darren W., and Brian D. Silver. "Stereotype Threat and Race of Interviewer Effects in a Survey on Political Knowledge," American Journal of Political Science, 47 (December 2002): 33-45.

- Davis, R. E.; et al. (Feb 2010). "Interviewer effects in public health surveys". Health Education Research. 25 (1): 14–26. doi:10.1093/her/cyp046. PMC 2805402 . PMID 19762354.

- Stokes, Lynn; Yeh, Ming-Yih (October 2001). "Chapter 22: Searching for Causes of Interviewer Effects in Telephone Surveys". In Groves, Robert M.; et al. (eds.). Telephone Survey Methodology. Wiley. pp. 357–110. ISBN 978-0-471-20956-0.

- Sudman, Seymour, and Norman Bradburn. Response Effects in Surveys. National Opinion Research Center: Chicago, 1974.