Related Research Articles

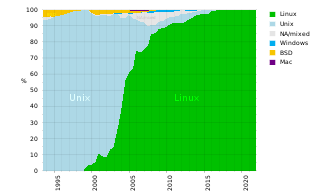

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

The Cray-1 was a supercomputer designed, manufactured and marketed by Cray Research. Announced in 1975, the first Cray-1 system was installed at Los Alamos National Laboratory in 1976. Eventually, eighty Cray-1s were sold, making it one of the most successful supercomputers in history. It is perhaps best known for its unique shape, a relatively small C-shaped cabinet with a ring of benches around the outside covering the power supplies and the cooling system.

The National Center for Supercomputing Applications (NCSA) is a state-federal partnership to develop and deploy national-scale computer infrastructure that advances research, science and engineering based in the United States. NCSA operates as a unit of the University of Illinois Urbana-Champaign, and provides high-performance computing resources to researchers across the country. Support for NCSA comes from the National Science Foundation, the state of Illinois, the University of Illinois, business and industry partners, and other federal agencies.

The National Science Foundation Network (NSFNET) was a program of coordinated, evolving projects sponsored by the National Science Foundation (NSF) from 1985 to 1995 to promote advanced research and education networking in the United States. The program created several nationwide backbone computer networks in support of these initiatives. Initially created to link researchers to the NSF-funded supercomputing centers, through further public funding and private industry partnerships it developed into a major part of the Internet backbone.

ETA Systems was a supercomputer company spun off from Control Data Corporation (CDC) in the early 1980s in order to regain a footing in the supercomputer business. They successfully delivered the ETA-10, but lost money continually while doing so. CDC management eventually gave up and folded the company.

The ETA10 is a vector supercomputer designed, manufactured, and marketed by ETA Systems, a spin-off division of Control Data Corporation (CDC). The ETA10 was an evolution of the CDC Cyber 205, which can trace its origins back to the CDC STAR-100, one of the first vector supercomputers to be developed.

The NASA Advanced Supercomputing (NAS) Division is located at NASA Ames Research Center, Moffett Field in the heart of Silicon Valley in Mountain View, California. It has been the major supercomputing and modeling and simulation resource for NASA missions in aerodynamics, space exploration, studies in weather patterns and ocean currents, and space shuttle and aircraft design and development for almost forty years.

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011.

EPCC, formerly the Edinburgh Parallel Computing Centre, is a supercomputing centre based at the University of Edinburgh. Since its foundation in 1990, its stated mission has been to accelerate the effective exploitation of novel computing throughout industry, academia and commerce.

The Pittsburgh Supercomputing Center (PSC) is a high performance computing and networking center founded in 1986 and one of the original five NSF Supercomputing Centers. PSC is a joint effort of Carnegie Mellon University and the University of Pittsburgh in Pittsburgh, Pennsylvania, United States.

The Texas Advanced Computing Center (TACC) at the University of Texas at Austin, United States, is an advanced computing research center that is based on comprehensive advanced computing resources and supports services to researchers in Texas and across the U.S. The mission of TACC is to enable discoveries that advance science and society through the application of advanced computing technologies. Specializing in high performance computing, scientific visualization, data analysis & storage systems, software, research & development and portal interfaces, TACC deploys and operates advanced computational infrastructure to enable the research activities of faculty, staff, and students of UT Austin. TACC also provides consulting, technical documentation, and training to support researchers who use these resources. TACC staff members conduct research and development in applications and algorithms, computing systems design/architecture, and programming tools and environments.

The National Energy Research Scientific Computing Center (NERSC), is a high-performance computing (supercomputer) National User Facility operated by Lawrence Berkeley National Laboratory for the United States Department of Energy Office of Science. As the mission computing center for the Office of Science, NERSC houses high performance computing and data systems used by 9,000 scientists at national laboratories and universities around the country. Research at NERSC is focused on fundamental and applied research in energy efficiency, storage, and generation; Earth systems science, and understanding of fundamental forces of nature and the universe. The largest research areas are in High Energy Physics, Materials Science, Chemical Sciences, Climate and Environmental Sciences, Nuclear Physics, and Fusion Energy research. NERSC's newest and largest supercomputer is Perlmutter, which debuted in 2021 ranked 5th on the TOP500 list of world's fastest supercomputers.

The National Center for Computational Sciences (NCCS) is a United States Department of Energy (DOE) Leadership Computing Facility that houses the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility charged with helping researchers solve challenging scientific problems of global interest with a combination of leading high-performance computing (HPC) resources and international expertise in scientific computing.

Several centers for supercomputing exist across Europe, and distributed access to them is coordinated by European initiatives to facilitate high-performance computing. One such initiative, the HPC Europa project, fits within the Distributed European Infrastructure for Supercomputing Applications (DEISA), which was formed in 2002 as a consortium of eleven supercomputing centers from seven European countries. Operating within the CORDIS framework, HPC Europa aims to provide access to supercomputers across Europe.

Titan or OLCF-3 was a supercomputer built by Cray at Oak Ridge National Laboratory for use in a variety of science projects. Titan was an upgrade of Jaguar, a previous supercomputer at Oak Ridge, that uses graphics processing units (GPUs) in addition to conventional central processing units (CPUs). Titan was the first such hybrid to perform over 10 petaFLOPS. The upgrade began in October 2011, commenced stability testing in October 2012 and it became available to researchers in early 2013. The initial cost of the upgrade was US$60 million, funded primarily by the United States Department of Energy.

A supercomputer operating system is an operating system intended for supercomputers. Since the end of the 20th century, supercomputer operating systems have undergone major transformations, as fundamental changes have occurred in supercomputer architecture. While early operating systems were custom tailored to each supercomputer to gain speed, the trend has been moving away from in-house operating systems and toward some form of Linux, with it running all the supercomputers on the TOP500 list in November 2017. In 2021, top 10 computers run for instance Red Hat Enterprise Linux (RHEL), or some variant of it or other Linux distribution e.g. Ubuntu.

Appro was a developer of supercomputing supporting High Performance Computing (HPC) markets focused on medium- to large-scale deployments. Appro was based in Milpitas, California with a computing center in Houston, Texas, and a manufacturing and support subsidiary in South Korea and Japan.

Dennis M. Jennings is an Irish physicist, academic, Internet pioneer, and venture capitalist. In 1985–1986 he was responsible for three critical decisions that shaped the subsequent development of NSFNET, the network that became the Internet.

The Cray XC40 is a massively parallel multiprocessor supercomputer manufactured by Cray. It consists of Intel Haswell Xeon processors, with optional Nvidia Tesla or Intel Xeon Phi accelerators, connected together by Cray's proprietary "Aries" interconnect, stored in air-cooled or liquid-cooled cabinets. The XC series supercomputers are available with the Cray DataWarp applications I/O accelerator technology.

The Cray XC50 is a massively parallel multiprocessor supercomputer manufactured by Cray. The machine can support Intel Xeon processors, as well as Cavium ThunderX2 processors, Xeon Phi processors and NVIDIA Tesla P100 GPUs. The processors are connected by Cray's proprietary "Aries" interconnect, in a dragonfly network topology. The XC50 is an evolution of the XC40, with the main difference being the support of Tesla P100 processors and the use of Cray software release CLE 6 or 7.

References

- 1 2 High Performance Computing and Networking for Science-Background Paper. Washington, DC: U.S. Congress, Office of Technology Assessment. September 1989. p. 11. ISBN 9781428922259 . Retrieved 25 July 2015.

- ↑ Kent, Allen; Williams, James (1994-02-08). Encyclopedia of Computer Science and Technology: Volume 30 - Supplement 15: Algebraic Methodology and Software Technology to System Level Modelling. CRC Press. p. 321. ISBN 9780824722838 . Retrieved 26 July 2015.

- 1 2 Anderson, Christopher. "NSF Supercomputer Program Looks Beyond Princeton Recall". The Scientist. Retrieved 24 July 2015.