Related Research Articles

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition.

Speex is an audio compression codec specifically tuned for the reproduction of human speech and also a free software speech codec that may be used on voice over IP applications and podcasts. It is based on the code excited linear prediction speech coding algorithm. Its creators claim Speex to be free of any patent restrictions and it is licensed under the revised (3-clause) BSD license. It may be used with the Ogg container format or directly transmitted over UDP/RTP. It may also be used with the FLV container format.

Digital music technology encompasses digital instruments, computers, electronic effects units, software, or digital audio equipment by a performer, composer, sound engineer, DJ, or record producer to produce, perform or record music. The term refers to electronic devices, instruments, computer hardware, and software used in performance, playback, recording, composition, mixing, analysis, and editing of music.

The Festival Speech Synthesis System is a general multi-lingual speech synthesis system originally developed by Alan W. Black, Paul Taylor and Richard Caley at the Centre for Speech Technology Research (CSTR) at the University of Edinburgh. Substantial contributions have also been provided by Carnegie Mellon University and other sites. It is distributed under a free software license similar to the BSD License.

A digital audio workstation (DAW) is an electronic device or application software used for recording, editing and producing audio files. DAWs come in a wide variety of configurations from a single software program on a laptop, to an integrated stand-alone unit, all the way to a highly complex configuration of numerous components controlled by a central computer. Regardless of configuration, modern DAWs have a central interface that allows the user to alter and mix multiple recordings and tracks into a final produced piece.

PlainTalk is the collective name for several speech synthesis (MacinTalk) and speech recognition technologies developed by Apple Inc. In 1990, Apple invested a lot of work and money in speech recognition technology, hiring many researchers in the field. The result was "PlainTalk", released with the AV models in the Macintosh Quadra series from 1993. It was made a standard system component in System 7.1.2, and has since been shipped on all PowerPC and some 68k Macintoshes.

Panorama Tools(also known as PanoTools) are a suite of programs and libraries for image stitching, i.e., re-projecting and blending multiple source images into immersive panoramas of many types. It was originally written by German physics and mathematics professor Helmut Dersch. Panorama Tools provides a framework An updated version of the Panorama Tools library serves as the underlying core engine for many software panorama graphical user interface front ends.

Bioconductor is a free, open source and open development software project for the analysis and comprehension of genomic data generated by wet lab experiments in molecular biology.

Residual-excited linear prediction (RELP) is an obsolete speech coding algorithm. It was originally proposed in the 1970s and can be seen as an ancestor of code-excited linear prediction (CELP). Unlike CELP however, RELP directly transmits the residual signal. To achieve lower rates, that residual signal is usually down-sampled. The algorithm is hardly used anymore in audio transmission.

CMU Sphinx, also called Sphinx for short, is the general term to describe a group of speech recognition systems developed at Carnegie Mellon University. These include a series of speech recognizers and an acoustic model trainer (SphinxTrain).

Chinese speech synthesis is the application of speech synthesis to the Chinese language. It poses additional difficulties due to the Chinese characters, the complex prosody, which is essential to convey the meaning of words, and sometimes the difficulty in obtaining agreement among native speakers concerning what the correct pronunciation is of certain phonemes.

The CMU Pronouncing Dictionary is an open-source pronouncing dictionary originally created by the Speech Group at Carnegie Mellon University (CMU) for use in speech recognition research.

eSpeak is a free and open-source, cross-platform, compact, software speech synthesizer. It uses a formant synthesis method, providing many languages in a relatively small file size. eSpeakNG is a continuation of the original developer's project with more feedback from native speakers.

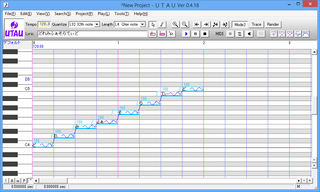

UTAU is a Japanese singing synthesizer application created by Ameya/Ayame (飴屋/菖蒲). This program is similar to the VOCALOID software, with the difference being it is shareware instead of under a third party licensing.

MeVisLab is a cross-platform application framework for medical image processing and scientific visualization. It includes advanced algorithms for image registration, segmentation, and quantitative morphological and functional image analysis. An IDE for graphical programming and rapid user interface prototyping is available.

Loquendo is an Italian multinational computer software technology corporation, headquartered in Torino, Italy, that provides speech recognition, speech synthesis, speaker verification and identification applications. Loquendo, which was founded in 2001 under the Telecom Italia Lab, also had offices in United Kingdom, Spain, Germany, France, and the United States.

Cantor was a vocal singing synthesizer software released four months after the original release of Vocaloid by the company VirSyn, and was based on the same idea of synthesizing the human voice. VirSyn released English and German versions of this software. Cantor 2 boasted a variety of voices from near-realistic sounding ones to highly expressive vocals and robotic voices.

Codec 2 is a low-bitrate speech audio codec that is patent free and open source. Codec 2 compresses speech using sinusoidal coding, a method specialized for human speech. Bit rates of 3200 to 450 bit/s have been successfully created. Codec 2 was designed to be used for amateur radio and other high compression voice applications.

15.ai is a non-commercial freeware artificial intelligence web application that generates natural emotive high-fidelity text-to-speech voices from an assortment of fictional characters from a variety of media sources. Developed by a pseudonymous MIT researcher under the name 15, the project uses a combination of audio synthesis algorithms, speech synthesis deep neural networks, and sentiment analysis models to generate and serve emotive character voices faster than real-time, particularly those with a very small amount of trainable data.

References

- ↑ List of MBROLA voices

- ↑ Mbrola-64 crashes immediately with a SEGFAULT

- ↑ Dutoit, T; Leich, H (Dec 1993). "MBR-PSOLA: Text-To-Speech synthesis based on an MBE re-synthesis of the segments database". Speech Communication. 13 (3–4): 435–440. doi:10.1016/0167-6393(93)90042-J.