2D computer graphics is the computer-based generation of digital images—mostly from two-dimensional models and by techniques specific to them. It may refer to the branch of computer science that comprises such techniques or to the models themselves.

In linear algebra, the trace of a square matrix A, denoted tr(A), is defined to be the sum of elements on the main diagonal of A. The trace is only defined for a square matrix.

In linear algebra, an orthogonal matrix, or orthonormal matrix, is a real square matrix whose columns and rows are orthonormal vectors.

In mathematics, particularly linear algebra and functional analysis, a spectral theorem is a result about when a linear operator or matrix can be diagonalized. This is extremely useful because computations involving a diagonalizable matrix can often be reduced to much simpler computations involving the corresponding diagonal matrix. The concept of diagonalization is relatively straightforward for operators on finite-dimensional vector spaces but requires some modification for operators on infinite-dimensional spaces. In general, the spectral theorem identifies a class of linear operators that can be modeled by multiplication operators, which are as simple as one can hope to find. In more abstract language, the spectral theorem is a statement about commutative C*-algebras. See also spectral theory for a historical perspective.

In mechanics and geometry, the 3D rotation group, often denoted SO(3), is the group of all rotations about the origin of three-dimensional Euclidean space under the operation of composition.

In mathematics, particularly in linear algebra, a skew-symmetricmatrix is a square matrix whose transpose equals its negative. That is, it satisfies the condition

In physics and mathematics, the Lorentz group is the group of all Lorentz transformations of Minkowski spacetime, the classical and quantum setting for all (non-gravitational) physical phenomena. The Lorentz group is named for the Dutch physicist Hendrik Lorentz.

In linear algebra, a square matrix is called diagonalizable or non-defective if it is similar to a diagonal matrix. That is, if there exists an invertible matrix and a diagonal matrix such that . This is equivalent to . This property exists for any linear map: for a finite-dimensional vector space , a linear map is called diagonalizable if there exists an ordered basis of consisting of eigenvectors of . These definitions are equivalent: if has a matrix representation as above, then the column vectors of form a basis consisting of eigenvectors of , and the diagonal entries of are the corresponding eigenvalues of ; with respect to this eigenvector basis, is represented by .

In linear algebra, a Jordan normal form, also known as a Jordan canonical form (JCF), is an upper triangular matrix of a particular form called a Jordan matrix representing a linear operator on a finite-dimensional vector space with respect to some basis. Such a matrix has each non-zero off-diagonal entry equal to 1, immediately above the main diagonal, and with identical diagonal entries to the left and below them.

In mathematics, a triangular matrix is a special kind of square matrix. A square matrix is called lower triangular if all the entries above the main diagonal are zero. Similarly, a square matrix is called upper triangular if all the entries below the main diagonal are zero.

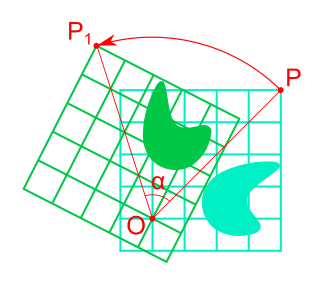

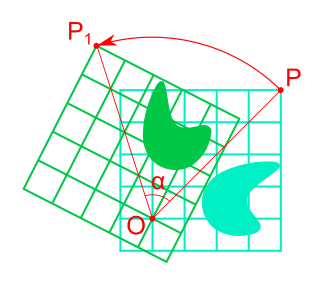

Rotation in mathematics is a concept originating in geometry. Any rotation is a motion of a certain space that preserves at least one point. It can describe, for example, the motion of a rigid body around a fixed point. Rotation can have a sign (as in the sign of an angle): a clockwise rotation is a negative magnitude so a counterclockwise turn has a positive magnitude. A rotation is different from other types of motions: translations, which have no fixed points, and (hyperplane) reflections, each of them having an entire (n − 1)-dimensional flat of fixed points in a n-dimensional space.

In linear algebra, a Hessenberg matrix is a special kind of square matrix, one that is "almost" triangular. To be exact, an upper Hessenberg matrix has zero entries below the first subdiagonal, and a lower Hessenberg matrix has zero entries above the first superdiagonal. They are named after Karl Hessenberg.

In linear algebra, linear transformations can be represented by matrices. If is a linear transformation mapping to and is a column vector with entries, then

In linear algebra, a rotation matrix is a transformation matrix that is used to perform a rotation in Euclidean space. For example, using the convention below, the matrix

In geometry, Euler's rotation theorem states that, in three-dimensional space, any displacement of a rigid body such that a point on the rigid body remains fixed, is equivalent to a single rotation about some axis that runs through the fixed point. It also means that the composition of two rotations is also a rotation. Therefore the set of rotations has a group structure, known as a rotation group.

In mathematics, the Cayley transform, named after Arthur Cayley, is any of a cluster of related things. As originally described by Cayley (1846), the Cayley transform is a mapping between skew-symmetric matrices and special orthogonal matrices. The transform is a homography used in real analysis, complex analysis, and quaternionic analysis. In the theory of Hilbert spaces, the Cayley transform is a mapping between linear operators.

In linear algebra, the Frobenius normal form or rational canonical form of a square matrix A with entries in a field F is a canonical form for matrices obtained by conjugation by invertible matrices over F. The form reflects a minimal decomposition of the vector space into subspaces that are cyclic for A. Since only one normal form can be reached from a given matrix, a matrix B is similar to A if and only if it has the same rational canonical form as A. Since this form can be found without any operations that might change when extending the field F, notably without factoring polynomials, this shows that whether two matrices are similar does not change upon field extensions. The form is named after German mathematician Ferdinand Georg Frobenius.

In linear algebra, it is often important to know which vectors have their directions unchanged by a given linear transformation. An eigenvector or characteristic vector is such a vector. Thus an eigenvector of a linear transformation is scaled by a constant factor when the linear transformation is applied to it: . The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor .

In Hamiltonian mechanics, the linear canonical transformation (LCT) is a family of integral transforms that generalizes many classical transforms. It has 4 parameters and 1 constraint, so it is a 3-dimensional family, and can be visualized as the action of the special linear group SL2(R) on the time–frequency plane (domain). As this defines the original function up to a sign, this translates into an action of its double cover on the original function space.

Symmetries in quantum mechanics describe features of spacetime and particles which are unchanged under some transformation, in the context of quantum mechanics, relativistic quantum mechanics and quantum field theory, and with applications in the mathematical formulation of the standard model and condensed matter physics. In general, symmetry in physics, invariance, and conservation laws, are fundamentally important constraints for formulating physical theories and models. In practice, they are powerful methods for solving problems and predicting what can happen. While conservation laws do not always give the answer to the problem directly, they form the correct constraints and the first steps to solving a multitude of problems. In application, understanding symmetries can also provide insights on the eigenstates that can be expected. For example, the existence of degenerate states can be inferred by the presence of non commuting symmetry operators or that the non degenerate states are also eigenvectors of symmetry operators.