Related Research Articles

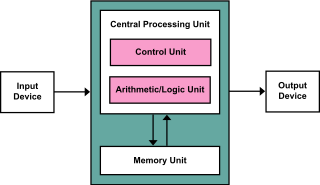

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs).

Computer data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers.

In computing, memory is a device or system that is used to store information for immediate use in a computer or related computer hardware and digital electronic devices. The term memory is often synonymous with the term primary storage or main memory. An archaic synonym for memory is store.

In computing, a cache is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs.

Kendall Square Research (KSR) was a supercomputer company headquartered originally in Kendall Square in Cambridge, Massachusetts in 1986, near Massachusetts Institute of Technology (MIT). It was co-founded by Steven Frank and Henry Burkhardt III, who had formerly helped found Data General and Encore Computer and was one of the original team that designed the PDP-8. KSR produced two models of supercomputer, the KSR1 and KSR2. It went bankrupt in 1994.

In computing, virtual memory, or virtual storage is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory".

The Harvard architecture is a computer architecture with separate storage and signal pathways for instructions and data. It contrasts with the von Neumann architecture, where program instructions and data share the same memory and pathways.

Static random-access memory is a type of random-access memory (RAM) that uses latching circuitry (flip-flop) to store each bit. SRAM is volatile memory; data is lost when power is removed.

Dynamic random-access memory is a type of random-access semiconductor memory that stores each bit of data in a memory cell, usually consisting of a tiny capacitor and a transistor, both typically based on metal-oxide-semiconductor (MOS) technology. While most DRAM memory cell designs use a capacitor and transistor, some only use two transistors. In the designs where a capacitor is used, the capacitor can either be charged or discharged; these two states are taken to represent the two values of a bit, conventionally called 0 and 1. The electric charge on the capacitors gradually leaks away; without intervention the data on the capacitor would soon be lost. To prevent this, DRAM requires an external memory refresh circuit which periodically rewrites the data in the capacitors, restoring them to their original charge. This refresh process is the defining characteristic of dynamic random-access memory, in contrast to static random-access memory (SRAM) which does not require data to be refreshed. Unlike flash memory, DRAM is volatile memory, since it loses its data quickly when power is removed. However, DRAM does exhibit limited data remanence.

A digital signal processor (DSP) is a specialized microprocessor chip, with its architecture optimized for the operational needs of digital signal processing. DSPs are fabricated on MOS integrated circuit chips. They are widely used in audio signal processing, telecommunications, digital image processing, radar, sonar and speech recognition systems, and in common consumer electronic devices such as mobile phones, disk drives and high-definition television (HDTV) products.

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the First Draft of a Report on the EDVAC. The document describes a design architecture for an electronic digital computer with these components:

In computing, a memory address is a reference to a specific memory location used at various levels by software and hardware. Memory addresses are fixed-length sequences of digits conventionally displayed and manipulated as unsigned integers. Such numerical semantic bases itself upon features of CPU, as well upon use of the memory like an array endorsed by various programming languages.

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels, with different instruction-specific and data-specific caches at level 1. The cache memory is almost always implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM not always used for all levels, or even any level, sometimes some latter or all levels implemented with eDRAM.

The Blackfin is a family of 16-/32-bit microprocessors developed, manufactured and marketed by Analog Devices. The processors have built-in, fixed-point digital signal processor (DSP) functionality supplied by 16-bit multiply–accumulates (MACs), accompanied on-chip by a microcontroller. It was designed for a unified low-power processor architecture that can run operating systems while simultaneously handling complex numeric tasks such as real-time H.264 video encoding.

Memory segmentation is an operating system memory management technique of division of a computer's primary memory into segments or sections. In a computer system using segmentation, a reference to a memory location includes a value that identifies a segment and an offset within that segment. Segments or sections are also used in object files of compiled programs when they are linked together into a program image and when the image is loaded into memory.

Scratchpad memory (SPM), also known as scratchpad, scratchpad RAM or local store in computer terminology, is a high-speed internal memory used for temporary storage of calculations, data, and other work in progress. In reference to a microprocessor, scratchpad refers to a special high-speed memory used to hold small items of data for rapid retrieval. It is similar to the usage and size of a scratchpad in life: a pad of paper for preliminary notes or sketches or writings, etc.

Count key data (CKD) is a direct-access storage device (DASD) data recording format introduced in 1964, by IBM with its IBM System/360 and still being emulated on IBM mainframes. It is a self-defining format with each data record represented by a Count Area that identifies the record and provides the number of bytes in an optional Key Area and an optional Data Area. This is in contrast to devices using fixed sector size or a separate format track.

The modified Harvard architecture is a variation of the Harvard computer architecture that, unlike the pure Harvard architecture, allows the contents of the instruction memory to be accessed as data. Most modern computers that are documented as Harvard architecture are, in fact, modified Harvard architecture.

This glossary of computer hardware terms is a list of definitions of terms and concepts related to computer hardware, i.e. the physical and structural components of computers, architectural issues, and peripheral devices.

Texas Memory Systems, Inc. (TMS) was an American corporation that designed and manufactured solid-state disks (SSDs) and digital signal processors (DSPs). TMS was founded in 1978 and that same year introduced their first solid-state drive, followed by their first digital signal processor. In 2000 they introduced the RamSan line of SSDs. Based in Houston, Texas, they supply these two product categories to large enterprise and government organizations.

References

- 1 2 "Memory Architectures: Harvard vs Princeton".

- ↑ Robert Oshana. DSP Software Development Techniques for Embedded and Real-Time Systems. 2006. "5 - DSP Architectures". p. 123. doi : 10.1016/B978-075067759-2/50007-7