Related Research Articles

The inner ear is the innermost part of the vertebrate ear. In vertebrates, the inner ear is mainly responsible for sound detection and balance. In mammals, it consists of the bony labyrinth, a hollow cavity in the temporal bone of the skull with a system of passages comprising two main functional parts:

Pitch is a perceptual property that allows sounds to be ordered on a frequency-related scale, or more commonly, pitch is the quality that makes it possible to judge sounds as "higher" and "lower" in the sense associated with musical melodies. Pitch is a major auditory attribute of musical tones, along with duration, loudness, and timbre.

The cochlea is the part of the inner ear involved in hearing. It is a spiral-shaped cavity in the bony labyrinth, in humans making 2.75 turns around its axis, the modiolus. A core component of the cochlea is the organ of Corti, the sensory organ of hearing, which is distributed along the partition separating the fluid chambers in the coiled tapered tube of the cochlea.

The basilar membrane is a stiff structural element within the cochlea of the inner ear which separates two liquid-filled tubes that run along the coil of the cochlea, the scala media and the scala tympani. The basilar membrane moves up and down in response to incoming sound waves, which are converted to traveling waves on the basilar membrane.

Stimulus modality, also called sensory modality, is one aspect of a stimulus or what is perceived after a stimulus. For example, the temperature modality is registered after heat or cold stimulate a receptor. Some sensory modalities include: light, sound, temperature, taste, pressure, and smell. The type and location of the sensory receptor activated by the stimulus plays the primary role in coding the sensation. All sensory modalities work together to heighten stimuli sensation when necessary.

The auditory system is the sensory system for the sense of hearing. It includes both the sensory organs and the auditory parts of the sensory system.

Sensorineural hearing loss (SNHL) is a type of hearing loss in which the root cause lies in the inner ear, sensory organ, or the vestibulocochlear nerve. SNHL accounts for about 90% of reported hearing loss. SNHL is usually permanent and can be mild, moderate, severe, profound, or total. Various other descriptors can be used depending on the shape of the audiogram, such as high frequency, low frequency, U-shaped, notched, peaked, or flat.

In physiology, tonotopy is the spatial arrangement of where sounds of different frequency are processed in the brain. Tones close to each other in terms of frequency are represented in topologically neighbouring regions in the brain. Tonotopic maps are a particular case of topographic organization, similar to retinotopy in the visual system.

In audiology and psychoacoustics the concept of critical bands, introduced by Harvey Fletcher in 1933 and refined in 1940, describes the frequency bandwidth of the "auditory filter" created by the cochlea, the sense organ of hearing within the inner ear. Roughly, the critical band is the band of audio frequencies within which a second tone will interfere with the perception of the first tone by auditory masking.

Neuroprosthetics is a discipline related to neuroscience and biomedical engineering concerned with developing neural prostheses. They are sometimes contrasted with a brain–computer interface, which connects the brain to a computer rather than a device meant to replace missing biological functionality.

Volley theory states that groups of neurons of the auditory system respond to a sound by firing action potentials slightly out of phase with one another so that when combined, a greater frequency of sound can be encoded and sent to the brain to be analyzed. The theory was proposed by Ernest Wever and Charles Bray in 1930 as a supplement to the frequency theory of hearing. It was later discovered that this only occurs in response to sounds that are about 500 Hz to 5000 Hz.

The temporal theory of hearing, also called frequency theory or timing theory, states that human perception of sound depends on temporal patterns with which neurons respond to sound in the cochlea. Therefore, in this theory, the pitch of a pure tone is determined by the period of neuron firing patterns—either of single neurons, or groups as described by the volley theory. Temporal theory competes with the place theory of hearing, which instead states that pitch is signaled according to the locations of vibrations along the basilar membrane.

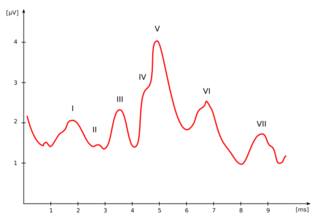

The auditory brainstem response (ABR), also called brainstem evoked response audiometry (BERA) or brainstem auditory evoked potentials (BAEPs) or brainstem auditory evoked responses (BAERs) is an auditory evoked potential extracted from ongoing electrical activity in the brain and recorded via electrodes placed on the scalp. The measured recording is a series of six to seven vertex positive waves of which I through V are evaluated. These waves, labeled with Roman numerals in Jewett and Williston convention, occur in the first 10 milliseconds after onset of an auditory stimulus. The ABR is considered an exogenous response because it is dependent upon external factors.

Binaural fusion or binaural integration is a cognitive process that involves the combination of different auditory information presented binaurally, or to each ear. In humans, this process is essential in understanding speech in noisy and reverberent environments.

An analog ear or analog cochlea is a model of the ear or of the cochlea based on an electrical, electronic or mechanical analog. An analog ear is commonly described as an interconnection of electrical elements such as resistors, capacitors, and inductors; sometimes transformers and active amplifiers are included.

Computational auditory scene analysis (CASA) is the study of auditory scene analysis by computational means. In essence, CASA systems are "machine listening" systems that aim to separate mixtures of sound sources in the same way that human listeners do. CASA differs from the field of blind signal separation in that it is based on the mechanisms of the human auditory system, and thus uses no more than two microphone recordings of an acoustic environment. It is related to the cocktail party problem.

Hearing, or auditory perception, is the ability to perceive sounds through an organ, such as an ear, by detecting vibrations as periodic changes in the pressure of a surrounding medium. The academic field concerned with hearing is auditory science.

The neuroscience of music is the scientific study of brain-based mechanisms involved in the cognitive processes underlying music. These behaviours include music listening, performing, composing, reading, writing, and ancillary activities. It also is increasingly concerned with the brain basis for musical aesthetics and musical emotion. Scientists working in this field may have training in cognitive neuroscience, neurology, neuroanatomy, psychology, music theory, computer science, and other relevant fields.

Electrocochleography is a technique of recording electrical potentials generated in the inner ear and auditory nerve in response to sound stimulation, using an electrode placed in the ear canal or tympanic membrane. The test is performed by an otologist or audiologist with specialized training, and is used for detection of elevated inner ear pressure or for the testing and monitoring of inner ear and auditory nerve function during surgery.

Temporal envelope (ENV) and temporal fine structure (TFS) are changes in the amplitude and frequency of sound perceived by humans over time. These temporal changes are responsible for several aspects of auditory perception, including loudness, pitch and timbre perception and spatial hearing.

References

- 1 2 Mather, George (2006). Foundations of perception. Psychology Press. ISBN 0-86377-834-8.

- 1 2 Moore, Brian C. J. (2003). An introduction to the psychology of hearing. Emeraldn Group Publishing. ISBN 0-12-505628-1.

- ↑ Delgutte, Bertrand (1999). "Auditory Neural Processing of Speech". In William J. Hardcastle; John Laver (eds.). The Handbook of Phonetic Sciences. Blackwell Publishing. ISBN 0-631-21478-X.

- 1 2 Gelfand, Stanley A. (2001). Essentials of Audiology. Thieme. ISBN 1-58890-017-7.

- ↑ d'Cheveigné, Alain (2005). "Pitch Perception Models". In Christopher J. Plack; Andrew J. Oxenham; Richard R. Fay; Arthur N. Popper (eds.). Pitch. Birkhäuser. ISBN 0-387-23472-1.

- ↑ Lightfoot Barnes, Charles (1897). Practical Acoustics. Macmillan. p. 160.

- ↑ Martin, Benjamin (1781). The Young Gentleman and Lady's Philosophy: In a Continued Survey of the Works of Nature and Art by Way of Dialogue. W. Owen.

- ↑ Fearn R, Carter P, Wolfe J (1999). "The perception of pitch by users of cochlear implants: possible significance for rate and place theories of pitch". Acoustics Australia. 27 (2): 41–43.