Texture mapping is a method for mapping a texture on a computer-generated graphic. "Texture" in this context can be high frequency detail, surface texture, or color.

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

Skeletal animation or rigging is a technique in computer animation in which a character is represented in two parts: a polygonal or parametric mesh representation of the surface of the object, and a hierarchical set of interconnected parts, a virtual armature used to animate the mesh. While this technique is often used to animate humans and other organic figures, it only serves to make the animation process more intuitive, and the same technique can be used to control the deformation of any object—such as a door, a spoon, a building, or a galaxy. When the animated object is more general than, for example, a humanoid character, the set of "bones" may not be hierarchical or interconnected, but simply represent a higher-level description of the motion of the part of mesh it is influencing.

In 3D computer graphics and solid modeling, a polygon mesh is a collection of vertices, edges and faces that defines the shape of a polyhedral object. The faces usually consist of triangles, quadrilaterals (quads), or other simple convex polygons (n-gons), since this simplifies rendering, but may also be more generally composed of concave polygons, or even polygons with holes.

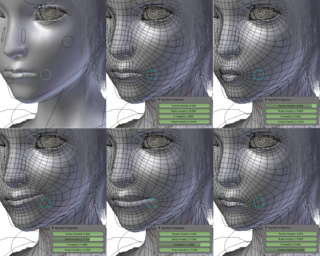

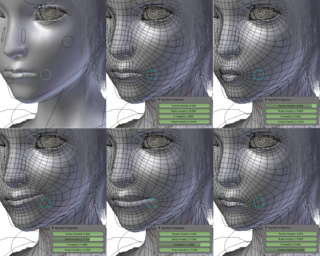

In the field of 3D computer graphics, a subdivision surface is a curved surface represented by the specification of a coarser polygon mesh and produced by a recursive algorithmic method. The curved surface, the underlying inner mesh, can be calculated from the coarse mesh, known as the control cage or outer mesh, as the functional limit of an iterative process of subdividing each polygonal face into smaller faces that better approximate the final underlying curved surface. Less commonly, a simple algorithm is used to add geometry to a mesh by subdividing the faces into smaller ones without changing the overall shape or volume.

In computer graphics, a shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene—a process known as shading. Shaders have evolved to perform a variety of specialized functions in computer graphics special effects and video post-processing, as well as general-purpose computing on graphics processing units.

In computer graphics, level of detail (LOD) refers to the complexity of a 3D model representation. LOD can be decreased as the model moves away from the viewer or according to other metrics such as object importance, viewpoint-relative speed or position. LOD techniques increase the efficiency of rendering by decreasing the workload on graphics pipeline stages, usually vertex transformations. The reduced visual quality of the model is often unnoticed because of the small effect on object appearance when distant or moving fast.

In 3D computer graphics, polygonal modeling is an approach for modeling objects by representing or approximating their surfaces using polygon meshes. Polygonal modeling is well suited to scanline rendering and is therefore the method of choice for real-time computer graphics. Alternate methods of representing 3D objects include NURBS surfaces, subdivision surfaces, and equation-based representations used in ray tracers.

Geometry processing is an area of research that uses concepts from applied mathematics, computer science and engineering to design efficient algorithms for the acquisition, reconstruction, analysis, manipulation, simulation and transmission of complex 3D models. As the name implies, many of the concepts, data structures, and algorithms are directly analogous to signal processing and image processing. For example, where image smoothing might convolve an intensity signal with a blur kernel formed using the Laplace operator, geometric smoothing might be achieved by convolving a surface geometry with a blur kernel formed using the Laplace-Beltrami operator.

Computer facial animation is primarily an area of computer graphics that encapsulates methods and techniques for generating and animating images or models of a character face. The character can be a human, a humanoid, an animal, a legendary creature or character, etc. Due to its subject and output type, it is also related to many other scientific and artistic fields from psychology to traditional animation. The importance of human faces in verbal and non-verbal communication and advances in computer graphics hardware and software have caused considerable scientific, technological, and artistic interests in computer facial animation.

Mesh generation is the practice of creating a mesh, a subdivision of a continuous geometric space into discrete geometric and topological cells. Often these cells form a simplicial complex. Usually the cells partition the geometric input domain. Mesh cells are used as discrete local approximations of the larger domain. Meshes are created by computer algorithms, often with human guidance through a GUI, depending on the complexity of the domain and the type of mesh desired. A typical goal is to create a mesh that accurately captures the input domain geometry, with high-quality (well-shaped) cells, and without so many cells as to make subsequent calculations intractable. The mesh should also be fine in areas that are important for the subsequent calculations.

Interactive skeleton-driven simulation is a scientific computer simulation technique used to approximate realistic physical deformations of dynamic bodies in real-time. It involves using elastic dynamics and mathematical optimizations to decide the body-shapes during motion and interaction with forces. It has various applications within realistic simulations for medicine, 3D computer animation and virtual reality.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

MakeHuman is a free and open source 3D computer graphics middleware designed for the prototyping of photorealistic humanoids. It is developed by a community of programmers, artists, and academics interested in 3D character modeling.

Simplygon is 3D computer graphics software for automatic 3D optimization, based on proprietary methods for creating levels of detail (LODs) through Polygon mesh reduction and other optimization techniques.

Morph target animation, per-vertex animation, shape interpolation, shape keys, or blend shapes is a method of 3D computer animation used together with techniques such as skeletal animation. In a morph target animation, a "deformed" version of a mesh is stored as a series of vertex positions. In each key frame of an animation, the vertices are then interpolated between these stored positions.

In 3D computer graphics, 3D modeling is the process of developing a mathematical coordinate-based representation of a surface of an object in three dimensions via specialized software by manipulating edges, vertices, and polygons in a simulated 3D space.

In computer graphics, tessellation is the dividing of datasets of polygons presenting objects in a scene into suitable structures for rendering. Especially for real-time rendering, data is tessellated into triangles, for example in OpenGL 4.0 and Direct3D 11.

This is a glossary of terms relating to computer graphics.

Amitabh Varshney is an Indian-born American computer scientist. He is an IEEE fellow, and serves as Dean of the University of Maryland College of Computer, Mathematical, and Natural Sciences. Before being named Dean, Varshney was the director of the University of Maryland Institute for Advanced Computer Studies (UMIACS) from 2010 to 2018.