Bra–ket notation, also called Dirac notation, is a notation for linear algebra and linear operators on complex vector spaces together with their dual space both in the finite-dimensional and infinite-dimensional case. It is specifically designed to ease the types of calculations that frequently come up in quantum mechanics. Its use in quantum mechanics is quite widespread.

In mathematics, an inner product space is a real vector space or a complex vector space with an operation called an inner product. The inner product of two vectors in the space is a scalar, often denoted with angle brackets such as in . Inner products allow formal definitions of intuitive geometric notions, such as lengths, angles, and orthogonality of vectors. Inner product spaces generalize Euclidean vector spaces, in which the inner product is the dot product or scalar product of Cartesian coordinates. Inner product spaces of infinite dimension are widely used in functional analysis. Inner product spaces over the field of complex numbers are sometimes referred to as unitary spaces. The first usage of the concept of a vector space with an inner product is due to Giuseppe Peano, in 1898.

The Riesz representation theorem, sometimes called the Riesz–Fréchet representation theorem after Frigyes Riesz and Maurice René Fréchet, establishes an important connection between a Hilbert space and its continuous dual space. If the underlying field is the real numbers, the two are isometrically isomorphic; if the underlying field is the complex numbers, the two are isometrically anti-isomorphic. The (anti-) isomorphism is a particular natural isomorphism.

The Cauchy–Schwarz inequality is an upper bound on the inner product between two vectors in an inner product space in terms of the product of the vector norms. It is considered one of the most important and widely used inequalities in mathematics.

Distributions, also known as Schwartz distributions or generalized functions, are objects that generalize the classical notion of functions in mathematical analysis. Distributions make it possible to differentiate functions whose derivatives do not exist in the classical sense. In particular, any locally integrable function has a distributional derivative.

In mathematics, particularly linear algebra, an orthonormal basis for an inner product space with finite dimension is a basis for whose vectors are orthonormal, that is, they are all unit vectors and orthogonal to each other. For example, the standard basis for a Euclidean space is an orthonormal basis, where the relevant inner product is the dot product of vectors. The image of the standard basis under a rotation or reflection is also orthonormal, and every orthonormal basis for arises in this fashion.

In mathematics, a direct limit is a way to construct a object from many objects that are put together in a specific way. These objects may be groups, rings, vector spaces or in general objects from any category. The way they are put together is specified by a system of homomorphisms between those smaller objects. The direct limit of the objects , where ranges over some directed set , is denoted by . This notation suppresses the system of homomorphisms; however, the limit depends on the system of homomorphisms.

In mathematics, a linear form is a linear map from a vector space to its field of scalars.

The Fock space is an algebraic construction used in quantum mechanics to construct the quantum states space of a variable or unknown number of identical particles from a single particle Hilbert space H. It is named after V. A. Fock who first introduced it in his 1932 paper "Konfigurationsraum und zweite Quantelung".

Pseudo-spectral methods, also known as discrete variable representation (DVR) methods, are a class of numerical methods used in applied mathematics and scientific computing for the solution of partial differential equations. They are closely related to spectral methods, but complement the basis by an additional pseudo-spectral basis, which allows representation of functions on a quadrature grid. This simplifies the evaluation of certain operators, and can considerably speed up the calculation when using fast algorithms such as the fast Fourier transform.

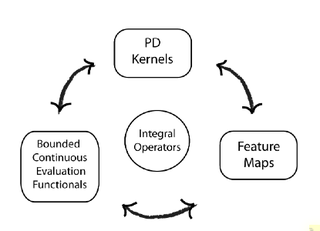

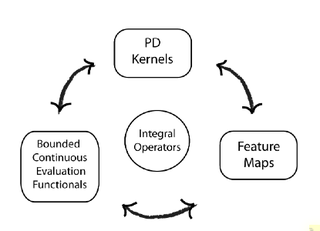

In functional analysis, a reproducing kernel Hilbert space (RKHS) is a Hilbert space of functions in which point evaluation is a continuous linear functional. Roughly speaking, this means that if two functions and in the RKHS are close in norm, i.e., is small, then and are also pointwise close, i.e., is small for all . The converse does not need to be true. Informally, this can be shown by looking at the supremum norm: the sequence of functions converges pointwise, but does not converge uniformly i.e. does not converge with respect to the supremum norm.

In linear algebra, the Gram matrix of a set of vectors in an inner product space is the Hermitian matrix of inner products, whose entries are given by the inner product . If the vectors are the columns of matrix then the Gram matrix is in the general case that the vector coordinates are complex numbers, which simplifies to for the case that the vector coordinates are real numbers.

In mathematics, Bochner's theorem characterizes the Fourier transform of a positive finite Borel measure on the real line. More generally in harmonic analysis, Bochner's theorem asserts that under Fourier transform a continuous positive-definite function on a locally compact abelian group corresponds to a finite positive measure on the Pontryagin dual group. The case of sequences was first established by Gustav Herglotz

In mathematics, a holomorphic vector bundle is a complex vector bundle over a complex manifold X such that the total space E is a complex manifold and the projection map π : E → X is holomorphic. Fundamental examples are the holomorphic tangent bundle of a complex manifold, and its dual, the holomorphic cotangent bundle. A holomorphic line bundle is a rank one holomorphic vector bundle.

In operator theory, Naimark's dilation theorem is a result that characterizes positive operator valued measures. It can be viewed as a consequence of Stinespring's dilation theorem.

Coherent states have been introduced in a physical context, first as quasi-classical states in quantum mechanics, then as the backbone of quantum optics and they are described in that spirit in the article Coherent states. However, they have generated a huge variety of generalizations, which have led to a tremendous amount of literature in mathematical physics. In this article, we sketch the main directions of research on this line. For further details, we refer to several existing surveys.

For computer science, in statistical learning theory, a representer theorem is any of several related results stating that a minimizer of a regularized empirical risk functional defined over a reproducing kernel Hilbert space can be represented as a finite linear combination of kernel products evaluated on the input points in the training set data.

Regularized least squares (RLS) is a family of methods for solving the least-squares problem while using regularization to further constrain the resulting solution.

Low-rank matrix approximations are essential tools in the application of kernel methods to large-scale learning problems.

In mathematical analysis, the spaces of test functions and distributions are topological vector spaces (TVSs) that are used in the definition and application of distributions. Test functions are usually infinitely differentiable complex-valued functions on a non-empty open subset that have compact support. The space of all test functions, denoted by is endowed with a certain topology, called the canonical LF-topology, that makes into a complete Hausdorff locally convex TVS. The strong dual space of is called the space of distributions on and is denoted by where the "" subscript indicates that the continuous dual space of denoted by is endowed with the strong dual topology.