The graphical user interface, or GUI, is a form of user interface that allows users to interact with electronic devices through graphical icons and audio indicators such as primary notation, instead of text-based UIs, typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs), which require commands to be typed on a computer keyboard.

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

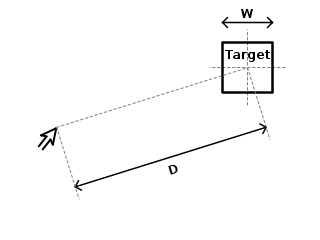

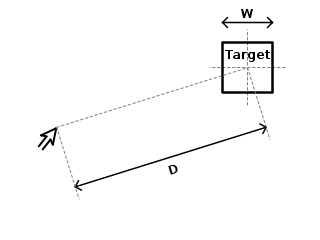

Fitts's law is a predictive model of human movement primarily used in human–computer interaction and ergonomics. The law predicts that the time required to rapidly move to a target area is a function of the ratio between the distance to the target and the width of the target. Fitts's law is used to model the act of pointing, either by physically touching an object with a hand or finger, or virtually, by pointing to an object on a computer monitor using a pointing device. It was initially developed by Paul Fitts.

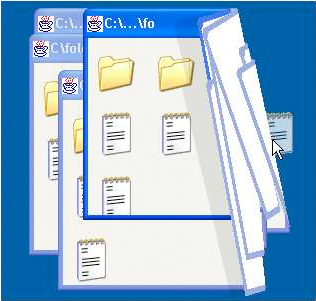

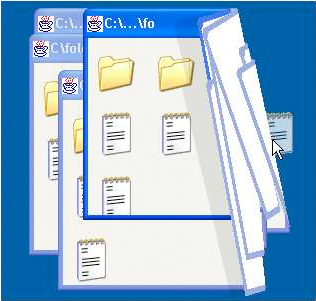

In computing, a windowing system is a software suite that manages separately different parts of display screens. It is a type of graphical user interface (GUI) which implements the WIMP paradigm for a user interface.

Jakob Nielsen is a Danish web usability consultant, human–computer interaction researcher, and co-founder of Nielsen Norman Group. He was named the “guru of Web page usability” in 1998 by The New York Times and the “king of usability” by Internet Magazine.

A heuristic evaluation is a usability inspection method for computer software that helps to identify usability problems in the user interface design. It specifically involves evaluators examining the interface and judging its compliance with recognized usability principles. These evaluation methods are now widely taught and practiced in the new media sector, where user interfaces are often designed in a short space of time on a budget that may restrict the amount of money available to provide for other types of interface testing.

In computer science, human–computer interaction, and interaction design, direct manipulation is an approach to interfaces which involves continuous representation of objects of interest together with rapid, reversible, and incremental actions and feedback. As opposed to other interaction styles, for example, the command language, the intention of direct manipulation is to allow a user to manipulate objects presented to them, using actions that correspond at least loosely to manipulation of physical objects. An example of direct manipulation is resizing a graphical shape, such as a rectangle, by dragging its corners or edges with a mouse.

In human–computer interaction, WIMP stands for "windows, icons, menus, pointer", denoting a style of interaction using these elements of the user interface. Other expansions are sometimes used, such as substituting "mouse" and "mice" for menus, or "pull-down menu" and "pointing" for pointer.

A voice-user interface (VUI) enables spoken human interaction with computers, using speech recognition to understand spoken commands and answer questions, and typically text to speech to play a reply. A voice command device is a device controlled with a voice user interface.

The Special Interest Group on Computer–Human Interaction (SIGCHI) is one of the Association for Computing Machinery's special interest groups which is focused on human–computer interactions (HCI).

An interaction technique, user interface technique or input technique is a combination of hardware and software elements that provides a way for computer users to accomplish a single task. For example, one can go back to the previously visited page on a Web browser by either clicking a button, pressing a key, performing a mouse gesture or uttering a speech command. It is a widely used term in human-computer interaction. In particular, the term "new interaction technique" is frequently used to introduce a novel user interface design idea.

The task-focused interface is a type of user interface which extends the desktop metaphor of the graphical user interface to make tasks, not files and folders, the primary unit of interaction. Instead of showing entire hierarchies of information, such as a tree of documents, a task-focused interface shows the subset of the tree that is relevant to the task-at-hand. This addresses the problem of information overload when dealing with large hierarchies, such as those in software systems or large sets of documents. The task-focused interface is composed of a mechanism which allows the user to specify the task being worked on and to switch between active tasks, a model of the task context such as a degree-of-interest (DOI) ranking, a focusing mechanism to filter or highlight the relevant documents. The task-focused interface has been validated with statistically significant increases to knowledge worker productivity. It has been broadly adopted by programmers and is a key part of the Eclipse integrated development environment. The technology is also referred to as the "task context" model and the "task-focused programming" paradigm.

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface (HCI)".

Marilyn Mantei Tremaine is an American computer scientist. She is an expert in human–computer interaction and considered a pioneer of the field.

Michel Beaudouin-Lafon is a French computer scientist working in the field of human–computer interaction. He received his PhD from the Paris-Sud 11 University in 1985. He is currently professor of computer science at Paris-Sud 11 University since 1992 and was director of LRI, the laboratory for computer science, from 2002 to 2009.

Wendy Elizabeth Mackay is a Canadian researcher specializing in human-computer interaction. She has served in all of the roles on the SIGCHI committee, including Chair. She is a member of the CHI Academy and a recipient of a European Research Council Advanced grant. She has been a visiting professor in Stanford University between 2010 and 2012, and received the ACM SIGCHI Lifetime Service Award in 2014.

Yves Guiard is a French cognitive neuroscientist and researcher best known for his work in human laterality and stimulus-response compatibility in the field of human-computer interaction. He is the director of research at French National Center for Scientific Research and a member of CHI Academy since 2016. He is also an associate editor of ACM Transactions on Computer-Human Interaction and member of the advisory council of the International Association for the Study of Attention and Performance.

Andrew Cockburn is currently working as a Professor in the Department of Computer Science and Software Engineering at the University of Canterbury in Christchurch, New Zealand. He is in charge of the Human Computer Interactions Lab where he conducts research focused on designing and testing user interfaces that integrate with inherent human factors.

Shumin Zhai is a Chinese-born American Canadian Human–computer interaction (HCI) research scientist and inventor. He is known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. His studies have contributed to both foundational models and understandings of HCI and practical user interface designs and flagship products. He previously worked at IBM where he invented the ShapeWriter text entry method for smartphones, which is a predecessor to the modern Swype keyboard. Dr. Zhai's publications have won the ACM UIST Lasting Impact Award and the IEEE Computer Society Best Paper Award, among others, and he is most known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. Dr. Zhai is currently a Principal Scientist at Google where he leads and directs research, design, and development of human-device input methods and haptics systems.