Related Research Articles

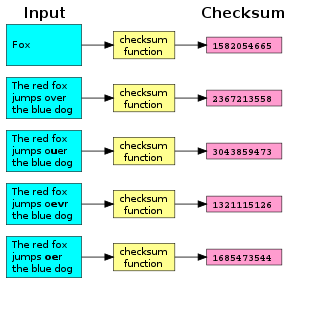

A checksum is a small-sized block of data derived from another block of digital data for the purpose of detecting errors that may have been introduced during its transmission or storage. By themselves, checksums are often used to verify data integrity but are not relied upon to verify data authenticity.

gzip is a file format and a software application used for file compression and decompression. The program was created by Jean-loup Gailly and Mark Adler as a free software replacement for the compress program used in early Unix systems, and intended for use by GNU. Version 0.1 was first publicly released on 31 October 1992, and version 1.0 followed in February 1993.

In computing, tar is a computer software utility for collecting many files into one archive file, often referred to as a tarball, for distribution or backup purposes. The name is derived from "tape archive", as it was originally developed to write data to sequential I/O devices with no file system of their own. The archive data sets created by tar contain various file system parameters, such as name, timestamps, ownership, file-access permissions, and directory organization.

In computing, ls is a command to list computer files in Unix and Unix-like operating systems. ls is specified by POSIX and the Single UNIX Specification. When invoked without any arguments, ls lists the files in the current working directory. The command is also available in the EFI shell. In other environments, such as DOS, OS/2, and Microsoft Windows, similar functionality is provided by the dir command. The numerical computing environments MATLAB and GNU Octave include an ls function with similar functionality.

head is a program on Unix and Unix-like operating systems used to display the beginning of a text file or piped data.

The archiver, also known simply as ar, is a Unix utility that maintains groups of files as a single archive file. Today, ar is generally used only to create and update static library files that the link editor or linker uses and for generating .deb packages for the Debian family; it can be used to create archives for any purpose, but has been largely replaced by tar for purposes other than static libraries. An implementation of ar is included as one of the GNU Binutils.

md5sum is a computer program that calculates and verifies 128-bit MD5 hashes, as described in RFC 1321. The MD5 hash functions as a compact digital fingerprint of a file. As with all such hashing algorithms, there is theoretically an unlimited number of files that will have any given MD5 hash. However, it is very unlikely that any two non-identical files in the real world will have the same MD5 hash, unless they have been specifically created to have the same hash.

dd is a command-line utility for Unix and Unix-like operating systems, the primary purpose of which is to convert and copy files.

The Fletcher checksum is an algorithm for computing a position-dependent checksum devised by John G. Fletcher (1934–2012) at Lawrence Livermore Labs in the late 1970s. The objective of the Fletcher checksum was to provide error-detection properties approaching those of a cyclic redundancy check but with the lower computational effort associated with summation techniques.

wc is a command in Unix, Plan 9, Inferno, and Unix-like operating systems. The program reads either standard input or a list of computer files and generates one or more of the following statistics: newline count, word count, and byte count. If a list of files is provided, both individual file and total statistics follow.

In computing, cp is a command in various Unix and Unix-like operating systems for copying files and directories. The command has three principal modes of operation, expressed by the types of arguments presented to the program for copying a file to another file, one or more files to a directory, or for copying entire directories to another directory.

cksum is a command in Unix and Unix-like operating systems that generates a checksum value for a file or stream of data. The cksum command reads each file given in its arguments, or standard input if no arguments are provided, and outputs the file's CRC-32 checksum and byte count.

df is a standard Unix command used to display the amount of available disk space for file systems on which the invoking user has appropriate read access. df is typically implemented using the statfs or statvfs system calls.

In computing, rm is a basic command on Unix and Unix-like operating systems used to remove objects such as computer files, directories and symbolic links from file systems and also special files such as device nodes, pipes and sockets, similar to the del command in MS-DOS, OS/2, and Microsoft Windows. The command is also available in the EFI shell.

env is a shell command for Unix and Unix-like operating systems. It is used to either print a list of environment variables or run another utility in an altered environment without having to modify the currently existing environment. Using env, variables may be added or removed, and existing variables may be changed by assigning new values to them.

tail is a program available on Unix, Unix-like systems, FreeDOS and MSX-DOS used to display the tail end of a text file or piped data.

sha1sum is a computer program that calculates and verifies SHA-1 hashes. It is commonly used to verify the integrity of files. It is installed by default in most Linux distributions. Typically distributed alongside sha1sum are sha224sum, sha256sum, sha384sum and sha512sum, which use a specific SHA-2 hash function.

sum is a legacy utility available on some Unix and Unix-like operating systems. This utility outputs the checksum of each argument file, as well as the number of blocks they take on disk.

The BSD checksum algorithm was a commonly used, legacy checksum algorithm. It has been implemented in old BSD and is also available through the sum command line utility.

cat is a standard Unix utility that reads files sequentially, writing them to standard output. The name is derived from its function to concatenate files.