Related Research Articles

Ubiquitous computing is a concept in software engineering, hardware engineering and computer science where computing is made to appear anytime and everywhere. In contrast to desktop computing, ubiquitous computing can occur using any device, in any location, and in any format. A user interacts with the computer, which can exist in many different forms, including laptop computers, tablets, smart phones and terminals in everyday objects such as a refrigerator or a pair of glasses. The underlying technologies to support ubiquitous computing include Internet, advanced middleware, operating system, mobile code, sensors, microprocessors, new I/O and user interfaces, computer networks, mobile protocols, location and positioning, and new materials.

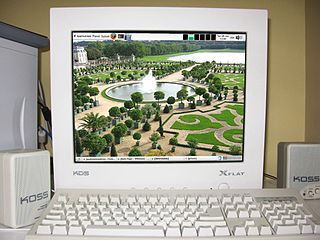

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

The Aspen Movie Map was a hypermedia system developed at MIT that enabled the user to take a virtual tour through the city of Aspen, Colorado. It was developed by a team working with Andrew Lippman in 1978 with funding from ARPA.

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development is to empower collaboration, learning, and design by giving physical forms to digital information, thus taking advantage of the human ability to grasp and manipulate physical objects and materials.

Ambient intelligence (AmI) is a term used in computing to refer to electronic environments that are sensitive to the presence of people. The term is generally applied to consumer electronics, telecommunications, and computing.

Digital signage is a segment of electronic signage. Digital displays use technologies such as LCD, LED, projection and e-paper to display digital images, video, web pages, weather data, restaurant menus, or text. They can be found in public spaces, transportation systems, museums, stadiums, retail stores, hotels, restaurants and corporate buildings etc., to provide wayfinding, exhibitions, marketing and outdoor advertising. They are used as a network of electronic displays that are centrally managed and individually addressable for the display of text, animated or video messages for advertising, information, entertainment and merchandising to targeted audiences.

In computing, multi-touch is technology that enables a surface to recognize the presence of more than one point of contact with the surface at the same time. The origins of multitouch began at CERN, MIT, University of Toronto, Carnegie Mellon University and Bell Labs in the 1970s. CERN started using multi-touch screens as early as 1976 for the controls of the Super Proton Synchrotron. Capacitive multi-touch displays were popularized by Apple's iPhone in 2007. Multi-touch may be used to implement additional functionality, such as pinch to zoom or to activate certain subroutines attached to predefined gestures using gesture recognition.

Microsoft PixelSense was an interactive surface computing platform that allowed one or more people to use and touch real-world objects, and share digital content at the same time. The PixelSense platform consists of software and hardware products that combine vision based multitouch PC hardware, 360-degree multiuser application design, and Windows software to create a natural user interface (NUI).

Kent Larson is an architect and Professor of the Practice at the Massachusetts Institute of Technology. Larson is currently director of the City Science research group at the MIT Media Lab, and co-director with Lord Norman Foster of the Norman Foster Institute on Sustainable Cities based in Madrid. His research is focused on urban design, modeling and simulation, compact transformable housing, and ultralight autonomous mobility on demand. He has established an international consortium of City Science Network labs, and is a founder of multiple MIT Media Lab spin-off companies, including Ori Living and L3cities.

A smart object is an object that enhances the interaction with not only people but also with other smart objects. Also known as smart connected products or smart connected things (SCoT), they are products, assets and other things embedded with processors, sensors, software and connectivity that allow data to be exchanged between the product and its environment, manufacturer, operator/user, and other products and systems. Connectivity also enables some capabilities of the product to exist outside the physical device, in what is known as the product cloud. The data collected from these products can be then analyzed to inform decision-making, enable operational efficiencies and continuously improve the performance of the product.

IEEE 1451 is a set of smart transducer interface standards developed by the Institute of Electrical and Electronics Engineers (IEEE) Instrumentation and Measurement Society's Sensor Technology Technical Committee describing a set of open, common, network-independent communication interfaces for connecting transducers to microprocessors, instrumentation systems, and control/field networks. One of the key elements of these standards is the definition of Transducer electronic data sheets (TEDS) for each transducer. The TEDS is a memory device attached to the transducer, which stores transducer identification, calibration, correction data, and manufacturer-related information. The goal of the IEEE 1451 family of standards is to allow the access of transducer data through a common set of interfaces whether the transducers are connected to systems or networks via a wired or wireless means.

SixthSense is a gesture-based wearable computer system developed at MIT Media Lab by Steve Mann in 1994 and 1997, and 1998, and further developed by Pranav Mistry, in 2009, both of whom developed both hardware and software for both headworn and neckworn versions of it. It comprises a headworn or neck-worn pendant that contains both a data projector and camera. Headworn versions were built at MIT Media Lab in 1997 that combined cameras and illumination systems for interactive photographic art, and also included gesture recognition.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface (HCI)".

Intelligent street is the name given to a type of intelligent environment which can be found on a public transit street. It has arisen from the convergence of communications and Ubiquitous Computing, intelligent and adaptable user interfaces, and the common infrastructure of the intelligent or mixed pavement.

Sifteo Cubes are an interactive gaming platform developed by Sifteo, Inc. The cubes are 'motion-aware' and 1.5-inch in size with touch screens. They are designed for use by players ages six and up. On August 30, 2012, Sifteo announced the second generation of their product, Sifteo Cubes Interactive Game System, which was meant to have improved upon various perceived deficiencies of the original Sifteo Cubes. Sifteo was acquired by 3D Robotics in July 2014 for an undisclosed amount of money and Sifteo Cubes were discontinued.

Albrecht Schmidt is a computer scientist best known for his work in ubiquitous computing, pervasive computing, and the tangible user interface. He is a professor at Ludwig Maximilian University of Munich where he joined the faculty in 2017.

The Human Media Lab(HML) is a research laboratory in Human-Computer Interaction at Queen's University's School of Computing in Kingston, Ontario. Its goals are to advance user interface design by creating and empirically evaluating disruptive new user interface technologies, and educate graduate students in this process. The Human Media Lab was founded in 2000 by Prof. Roel Vertegaal and employs an average of 12 graduate students.

AudioCubes are a collection of wireless intelligent light-emitting objects, capable of detecting each other's location, orientation, and user gestures. They were created by Bert Schiettecatte as electronic musical instruments for use by musicians in live performance, sound design, musical composition, and for creating interactive applications in max/msp, pd and C++.

James Patten is an American interaction designer, inventor, and visual artist. Patten is a TED fellow and speaker whose studio-initiated research has led to the creation of new technology platforms, like Thumbles, tiny-computer controlled robots; interactive, kinetic lighting features; and immersive environments that engage the body.

Chris Harrison is a British-born, American computer scientist and entrepreneur, working in the fields of human–computer interaction, machine learning and sensor-driven interactive systems. He is a professor at Carnegie Mellon University and director of the Future Interfaces Group within the Human–Computer Interaction Institute. He has previously conducted research at AT&T Labs, Microsoft Research, IBM Research and Disney Research. He is also the CTO and co-founder of Qeexo, a machine learning and interaction technology startup.