Related Research Articles

Digital signal processing (DSP) is the use of digital processing, such as by computers or more specialized digital signal processors, to perform a wide variety of signal processing operations. The digital signals processed in this manner are a sequence of numbers that represent samples of a continuous variable in a domain such as time, space, or frequency. In digital electronics, a digital signal is represented as a pulse train, which is typically generated by the switching of a transistor.

Signal processing is an electrical engineering subfield that focuses on analysing, modifying, and synthesizing signals such as sound, images, and scientific measurements. Signal processing techniques can be used to improve transmission, storage efficiency and subjective quality and to also emphasize or detect components of interest in a measured signal.

The principal components of a collection of points in a real coordinate space are a sequence of unit vectors, where the -th vector is the direction of a line that best fits the data while being orthogonal to the first vectors. Here, a best-fitting line is defined as one that minimizes the average squared distance from the points to the line. These directions constitute an orthonormal basis in which different individual dimensions of the data are linearly uncorrelated. Principal component analysis (PCA) is the process of computing the principal components and using them to perform a change of basis on the data, sometimes using only the first few principal components and ignoring the rest.

In mathematics, orthogonality is the generalization of the notion of perpendicularity to the linear algebra of bilinear forms. Two elements u and v of a vector space with bilinear form B are orthogonal when B(u, v) = 0. Depending on the bilinear form, the vector space may contain nonzero self-orthogonal vectors. In the case of function spaces, families of orthogonal functions are used to form a basis.

In linear algebra, orthogonalization is the process of finding a set of orthogonal vectors that span a particular subspace. Formally, starting with a linearly independent set of vectors {v1, ... , vk} in an inner product space, orthogonalization results in a set of orthogonal vectors {u1, ... , uk} that generate the same subspace as the vectors v1, ... , vk. Every vector in the new set is orthogonal to every other vector in the new set; and the new set and the old set have the same linear span.

High-dimensional data, meaning data that requires more than two or three dimensions to represent, can be difficult to interpret. One approach to simplification is to assume that the data of interest lies within lower-dimensional space. If the data of interest is of low enough dimension, the data can be visualised in the low-dimensional space.

In statistics and signal processing, the method of empirical orthogonal function (EOF) analysis is a decomposition of a signal or data set in terms of orthogonal basis functions which are determined from the data. The term is also interchangeable with the geographically weighted Principal components analysis in geophysics.

Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension. Working in high-dimensional spaces can be undesirable for many reasons; raw data are often sparse as a consequence of the curse of dimensionality, and analyzing the data is usually computationally intractable. Dimensionality reduction is common in fields that deal with large numbers of observations and/or large numbers of variables, such as signal processing, speech recognition, neuroinformatics, and bioinformatics.

In signal processing, a filter bank is an array of bandpass filters that separates the input signal into multiple components, each one carrying a single frequency sub-band of the original signal. One application of a filter bank is a graphic equalizer, which can attenuate the components differently and recombine them into a modified version of the original signal. The process of decomposition performed by the filter bank is called analysis ; the output of analysis is referred to as a subband signal with as many subbands as there are filters in the filter bank. The reconstruction process is called synthesis, meaning reconstitution of a complete signal resulting from the filtering process.

Non-negative matrix factorization, also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix V is factorized into (usually) two matrices W and H, with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms or muscular activity, non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically.

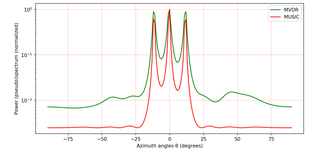

MUSIC is an algorithm used for frequency estimation and radio direction finding.

In statistical signal processing, the goal of spectral density estimation (SDE) is to estimate the spectral density of a random signal from a sequence of time samples of the signal. Intuitively speaking, the spectral density characterizes the frequency content of the signal. One purpose of estimating the spectral density is to detect any periodicities in the data, by observing peaks at the frequencies corresponding to these periodicities.

In time series analysis, singular spectrum analysis (SSA) is a nonparametric spectral estimation method. It combines elements of classical time series analysis, multivariate statistics, multivariate geometry, dynamical systems and signal processing. Its roots lie in the classical Karhunen (1946)–Loève spectral decomposition of time series and random fields and in the Mañé (1981)–Takens (1981) embedding theorem. SSA can be an aid in the decomposition of time series into a sum of components, each having a meaningful interpretation. The name "singular spectrum analysis" relates to the spectrum of eigenvalues in a singular value decomposition of a covariance matrix, and not directly to a frequency domain decomposition.

Speech enhancement aims to improve speech quality by using various algorithms. The objective of enhancement is improvement in intelligibility and/or overall perceptual quality of degraded speech signal using audio signal processing techniques.

Multilinear subspace learning is an approach to dimensionality reduction. Dimensionality reduction can be performed on a data tensor whose observations have been vectorized and organized into a data tensor, or whose observations are matrices that are concatenated into a data tensor. Here are some examples of data tensors whose observations are vectorized or whose observations are matrices concatenated into data tensor images (2D/3D), video sequences (3D/4D), and hyperspectral cubes (3D/4D).

In machine learning, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task.

In signal processing, a kernel adaptive filter is a type of nonlinear adaptive filter. An adaptive filter is a filter that adapts its transfer function to changes in signal properties over time by minimizing an error or loss function that characterizes how far the filter deviates from ideal behavior. The adaptation process is based on learning from a sequence of signal samples and is thus an online algorithm. A nonlinear adaptive filter is one in which the transfer function is nonlinear.

Robust Principal Component Analysis (RPCA) is a modification of the widely used statistical procedure of principal component analysis (PCA) which works well with respect to grossly corrupted observations. A number of different approaches exist for Robust PCA, including an idealized version of Robust PCA, which aims to recover a low-rank matrix L0 from highly corrupted measurements M = L0 +S0. This decomposition in low-rank and sparse matrices can be achieved by techniques such as Principal Component Pursuit method (PCP), Stable PCP, Quantized PCP, Block based PCP, and Local PCP. Then, optimization methods are used such as the Augmented Lagrange Multiplier Method (ALM), Alternating Direction Method (ADM), Fast Alternating Minimization (FAM), Iteratively Reweighted Least Squares (IRLS ) or alternating projections (AP).

In mathematics and statistics, random projection is a technique used to reduce the dimensionality of a set of points which lie in Euclidean space. Random projection methods are known for their power, simplicity, and low error rates when compared to other methods. According to experimental results, random projection preserves distances well, but empirical results are sparse. They have been applied to many natural language tasks under the name random indexing.

In signal processing, multidimensional empirical mode decomposition is an extension of the one-dimensional (1-D) EMD algorithm to a signal encompassing multiple dimensions. The Hilbert–Huang empirical mode decomposition (EMD) process decomposes a signal into intrinsic mode functions combined with the Hilbert spectral analysis, known as the Hilbert–Huang transform (HHT). The multidimensional EMD extends the 1-D EMD algorithm into multiple-dimensional signals. This decomposition can be applied to image processing, audio signal processing, and various other multidimensional signals.

References

- ↑ Krim, Hamid; Viberg, Mats (1996). "Two decades of array signal processing research". IEEE Signal Processing Magazine.

- Kris Hermus; Patrick Wambacq & Hugo Van hamme (2007). "A Review of Signal Subspace Speech Enhancement and Its Application to Noise Robust Speech Recognition". EURASIP Journal on Advances in Signal Processing. 2007: 1. doi: 10.1155/2007/45821 .