Related Research Articles

In computing, a compiler is a computer program that translates computer code written in one programming language into another language. The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a low-level programming language to create an executable program.

An optimizing compiler is a compiler designed to generate code that is optimized in aspects such as minimizing program execution time, memory usage, storage size, and power consumption. Optimization is generally implemented as a sequence of optimizing transformations, a.k.a. compiler optimizations – algorithms that transform code to produce semantically equivalent code optimized for some aspect.

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, such as a central processing unit (CPU), is called an implementation of that ISA.

In computer science, abstract interpretation is a theory of sound approximation of the semantics of computer programs, based on monotonic functions over ordered sets, especially lattices. It can be viewed as a partial execution of a computer program which gains information about its semantics without performing all the calculations.

Constant folding and constant propagation are related compiler optimizations used by many modern compilers. An advanced form of constant propagation known as sparse conditional constant propagation can more accurately propagate constants and simultaneously remove dead code.

In computing, inline expansion, or inlining, is a manual or compiler optimization that replaces a function call site with the body of the called function. Inline expansion is similar to macro expansion, but occurs during compilation, without changing the source code, while macro expansion occurs prior to compilation, and results in different text that is then processed by the compiler.

In computer science, program optimization, code optimization, or software optimization is the process of modifying a software system to make some aspect of it work more efficiently or use fewer resources. In general, a computer program may be optimized so that it executes more rapidly, or to make it capable of operating with less memory storage or other resources, or draw less power.

In compiler design, static single assignment form is a type of intermediate representation (IR) where each variable is assigned exactly once. SSA is used in most high-quality optimizing compilers for imperative languages, including LLVM, the GNU Compiler Collection, and many commercial compilers.

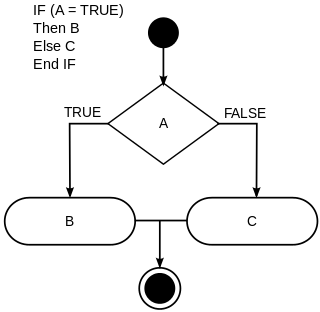

In computer science, conditionals are programming language constructs that perform different computations or actions or return different values depending on the value of a Boolean expression, called a condition.

In compiler theory, dead-code elimination is a compiler optimization to remove dead code. Removing such code has several benefits: it shrinks program size, an important consideration in some contexts, it reduces resource usage such as the number of bytes to be transferred and it allows the running program to avoid executing irrelevant operations, which reduces its running time. It can also enable further optimizations by simplifying program structure. Dead code includes code that can never be executed, and code that only affects dead variables, that is, irrelevant to the program.

In compiler optimization, register allocation is the process of assigning local automatic variables and expression results to a limited number of processor registers.

Data-flow analysis is a technique for gathering information about the possible set of values calculated at various points in a computer program. A program's control-flow graph (CFG) is used to determine those parts of a program to which a particular value assigned to a variable might propagate. The information gathered is often used by compilers when optimizing a program. A canonical example of a data-flow analysis is reaching definitions.

A branch, jump or transfer is an instruction in a computer program that can cause a computer to begin executing a different instruction sequence and thus deviate from its default behavior of executing instructions in order. Branch may also refer to the act of switching execution to a different instruction sequence as a result of executing a branch instruction. Branch instructions are used to implement control flow in program loops and conditionals.

An intermediate representation (IR) is the data structure or code used internally by a compiler or virtual machine to represent source code. An IR is designed to be conducive to further processing, such as optimization and translation. A "good" IR must be accurate – capable of representing the source code without loss of information – and independent of any particular source or target language. An IR may take one of several forms: an in-memory data structure, or a special tuple- or stack-based code readable by the program. In the latter case it is also called an intermediate language.

In compiler theory, partial redundancy elimination (PRE) is a compiler optimization that eliminates expressions that are redundant on some but not necessarily all paths through a program. PRE is a form of common subexpression elimination.

In computer science, pointer analysis, or points-to analysis, is a static code analysis technique that establishes which pointers, or heap references, can point to which variables, or storage locations. It is often a component of more complex analyses such as escape analysis. A closely related technique is shape analysis.

Control tables are tables that control the control flow or play a major part in program control. There are no rigid rules about the structure or content of a control table—its qualifying attribute is its ability to direct control flow in some way through "execution" by a processor or interpreter. The design of such tables is sometimes referred to as table-driven design. In some cases, control tables can be specific implementations of finite-state-machine-based automata-based programming. If there are several hierarchical levels of control table they may behave in a manner equivalent to UML state machines

AbsInt is a software-development tools vendor based in Saarbrücken, Germany. The company was founded in 1998 as a technology spin-off from the Department of Programming Languages and Compiler Construction of Prof. Reinhard Wilhelm at Saarland University. AbsInt specializes in software-verification tools based on abstract interpretation. Its tools are used worldwide by Fortune 500 companies, educational institutions, government agencies and startups.

Value numbering is a technique of determining when two computations in a program are equivalent and eliminating one of them with a semantics-preserving optimization.

A sea of nodes is a graph representation of single-static assignment (SSA) representation of a program that combines data flow and control flow, and relaxes the control flow from a total order to a partial order, keeping only the orderings required by data flow. It is similar to a value dependency graph (VDG).

References

- Cooper, Keith D. and Torczon, Linda. Engineering a Compiler. Morgan Kaufmann. 2005.