Microscopy is the technical field of using microscopes to view objects and areas of objects that cannot be seen with the naked eye. There are three well-known branches of microscopy: optical, electron, and scanning probe microscopy, along with the emerging field of X-ray microscopy.

In optics, the aperture of an optical system is a hole or an opening that primarily limits light propagated through the system. More specifically, the entrance pupil as the front side image of the aperture and focal length of an optical system determine the cone angle of a bundle of rays that comes to a focus in the image plane.

A camera lens is an optical lens or assembly of lenses used in conjunction with a camera body and mechanism to make images of objects either on photographic film or on other media capable of storing an image chemically or electronically.

Adaptive optics (AO) is a technique of precisely deforming a mirror in order to compensate for light distortion. It is used in astronomical telescopes and laser communication systems to remove the effects of atmospheric distortion, in microscopy, optical fabrication and in retinal imaging systems to reduce optical aberrations. Adaptive optics works by measuring the distortions in a wavefront and compensating for them with a device that corrects those errors such as a deformable mirror or a liquid crystal array.

In optics, any optical instrument or system – a microscope, telescope, or camera – has a principal limit to its resolution due to the physics of diffraction. An optical instrument is said to be diffraction-limited if it has reached this limit of resolution performance. Other factors may affect an optical system's performance, such as lens imperfections or aberrations, but these are caused by errors in the manufacture or calculation of a lens, whereas the diffraction limit is the maximum resolution possible for a theoretically perfect, or ideal, optical system.

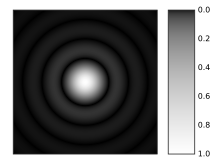

The point spread function (PSF) describes the response of a focused optical imaging system to a point source or point object. A more general term for the PSF is the system's impulse response; the PSF is the impulse response or impulse response function (IRF) of a focused optical imaging system. The PSF in many contexts can be thought of as the extended blob in an image that represents a single point object, that is considered as a spatial impulse. In functional terms, it is the spatial domain version of the optical transfer function (OTF) of an imaging system. It is a useful concept in Fourier optics, astronomical imaging, medical imaging, electron microscopy and other imaging techniques such as 3D microscopy and fluorescence microscopy.

Particle image velocimetry (PIV) is an optical method of flow visualization used in education and research. It is used to obtain instantaneous velocity measurements and related properties in fluids. The fluid is seeded with tracer particles which, for sufficiently small particles, are assumed to faithfully follow the flow dynamics. The fluid with entrained particles is illuminated so that particles are visible. The motion of the seeding particles is used to calculate speed and direction of the flow being studied.

Confocal microscopy, most frequently confocal laser scanning microscopy (CLSM) or laser scanning confocal microscopy (LSCM), is an optical imaging technique for increasing optical resolution and contrast of a micrograph by means of using a spatial pinhole to block out-of-focus light in image formation. Capturing multiple two-dimensional images at different depths in a sample enables the reconstruction of three-dimensional structures within an object. This technique is used extensively in the scientific and industrial communities and typical applications are in life sciences, semiconductor inspection and materials science.

Cone tracing and beam tracing are a derivative of the ray tracing algorithm that replaces rays, which have no thickness, with thick rays.

Optical resolution describes the ability of an imaging system to resolve detail, in the object that is being imaged. An imaging system may have many individual components, including one or more lenses, and/or recording and display components. Each of these contributes to the optical resolution of the system; the environment in which the imaging is done often is a further important factor.

Image noise is random variation of brightness or color information in images, and is usually an aspect of electronic noise. It can be produced by the image sensor and circuitry of a scanner or digital camera. Image noise can also originate in film grain and in the unavoidable shot noise of an ideal photon detector. Image noise is an undesirable by-product of image capture that obscures the desired information. Typically the term “image noise” is used to refer to noise in 2D images, not 3D images.

In signal processing, apodization is the modification of the shape of a mathematical function. The function may represent an electrical signal, an optical transmission, or a mechanical structure. In optics, it is primarily used to remove Airy disks caused by diffraction around an intensity peak, improving the focus.

The optical transfer function (OTF) of an optical system such as a camera, microscope, human eye, or projector specifies how different spatial frequencies are captured or transmitted. It is used by optical engineers to describe how the optics project light from the object or scene onto a photographic film, detector array, retina, screen, or simply the next item in the optical transmission chain. A variant, the modulation transfer function (MTF), neglects phase effects, but is equivalent to the OTF in many situations.

The following are common definitions related to the machine vision field.

Argus was a two-beam high power infrared neodymium doped silica glass laser with a 20 cm (7.9 in) output aperture built at Lawrence Livermore National Laboratory in 1976 for the study of inertial confinement fusion. Argus advanced the study of laser-target interaction and paved the way for the construction of its successor, the 20 beam Shiva laser.

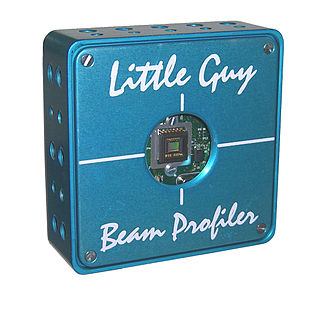

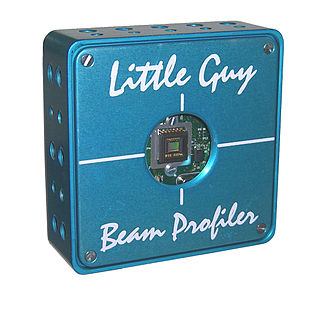

A laser beam profiler captures, displays, and records the spatial intensity profile of a laser beam at a particular plane transverse to the beam propagation path. Since there are many types of lasers—ultraviolet, visible, infrared, continuous wave, pulsed, high-power, low-power—there is an assortment of instrumentation for measuring laser beam profiles. No single laser beam profiler can handle every power level, pulse duration, repetition rate, wavelength, and beam size.

Speckle, speckle pattern, or speckle noise is a granular noise texture degrading the quality as a consequence of interference among wavefronts in coherent imaging systems, such as radar, synthetic aperture radar (SAR), medical ultrasound and optical coherence tomography. Speckle is not external noise; rather, it is an inherent fluctuation in diffuse reflections, because the scatterers are not identical for each cell, and the coherent illumination wave is highly sensitive to small variations in phase changes.

The study of image formation encompasses the radiometric and geometric processes by which 2D images of 3D objects are formed. In the case of digital images, the image formation process also includes analog to digital conversion and sampling.

A pinhole is a small circular hole, as could be made with the point of a pin. In optics, pinholes with diameter between a few micrometers and a hundred micrometers are used as apertures in optical systems. Pinholes are commonly used to spatially filter a beam, where the small pinhole acts as a low-pass filter for spatial frequencies in the image plane of the beam.

Computational imaging is the process of indirectly forming images from measurements using algorithms that rely on a significant amount of computing. In contrast to traditional imaging, computational imaging systems involve a tight integration of the sensing system and the computation in order to form the images of interest. The ubiquitous availability of fast computing platforms, the advances in algorithms and modern sensing hardware is resulting in imaging systems with significantly enhanced capabilities. Computational Imaging systems cover a broad range of applications include computational microscopy, tomographic imaging, MRI, ultrasound imaging, computational photography, Synthetic Aperture Radar (SAR), seismic imaging etc. The integration of the sensing and the computation in computational imaging systems allows for accessing information which was otherwise not possible. For example: