In statistical classification, two main approaches are called the generative approach and the discriminative approach. These compute classifiers by different approaches, differing in the degree of statistical modelling. Terminology is inconsistent, but three major types can be distinguished, following Jebara (2004):

- A generative model is a statistical model of the joint probability distribution on a given observable variable X and target variable Y; A generative model can be used to "generate" random instances (outcomes) of an observation x.

- A discriminative model is a model of the conditional probability of the target Y, given an observation x. It can be used to "discriminate" the value of the target variable Y, given an observation x.

- Classifiers computed without using a probability model are also referred to loosely as "discriminative".

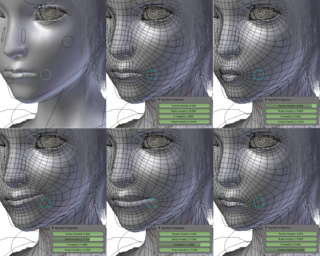

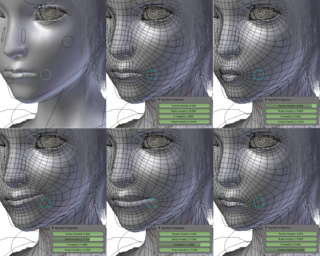

Human image synthesis is technology that can be applied to make believable and even photorealistic renditions of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery have featured synthetic images of human-like characters digitally composited onto the real or other simulated film material. Towards the end of the 2010s deep learning artificial intelligence has been applied to synthesize images and video that look like humans, without need for human assistance, once the training phase has been completed, whereas the old school 7D-route required massive amounts of human work .

An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data. An autoencoder learns two functions: an encoding function that transforms the input data, and a decoding function that recreates the input data from the encoded representation. The autoencoder learns an efficient representation (encoding) for a set of data, typically for dimensionality reduction.

WikiArt is a visual art wiki, active since 2010.

Adversarial machine learning is the study of the attacks on machine learning algorithms, and of the defenses against such attacks. A survey from May 2020 exposes the fact that practitioners report a dire need for better protecting machine learning systems in industrial applications.

A generative adversarial network (GAN) is a class of machine learning frameworks and a prominent framework for approaching generative AI. The concept was initially developed by Ian Goodfellow and his colleagues in June 2014. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss.

Ian J. Goodfellow is an American computer scientist, engineer, and executive, most noted for his work on artificial neural networks and deep learning. He was previously employed as a research scientist at Google Brain and director of machine learning at Apple and has made several important contributions to the field of deep learning including the invention of the generative adversarial network (GAN). Goodfellow co-wrote, as the first author, the textbook Deep Learning (2016) and wrote the chapter on deep learning in the authoritative textbook of the field of artificial intelligence, Artificial Intelligence: A Modern Approach.

Data augmentation is a statistical technique which allows maximum likelihood estimation from incomplete data. Data augmentation has important applications in Bayesian analysis, and the technique is widely used in machine learning to reduce overfitting when training machine learning models, achieved by training models on several slightly-modified copies of existing data.

Artificial intelligence art is visual artwork created through the use of an artificial intelligence (AI) program.

Artificial neural networks (ANNs) are models created using machine learning to perform a number of tasks. Their creation was inspired by neural circuitry. While some of the computational implementations ANNs relate to earlier discoveries in mathematics, the first implementation of ANNs was by psychologist Frank Rosenblatt, who developed the perceptron. Little research was conducted on ANNs in the 1970s and 1980s, with the AAAI calling that period an "AI winter".

Synthetic media is a catch-all term for the artificial production, manipulation, and modification of data and media by automated means, especially through the use of artificial intelligence algorithms, such as for the purpose of misleading people or changing an original meaning. Synthetic media as a field has grown rapidly since the creation of generative adversarial networks, primarily through the rise of deepfakes as well as music synthesis, text generation, human image synthesis, speech synthesis, and more. Though experts use the term "synthetic media," individual methods such as deepfakes and text synthesis are sometimes not referred to as such by the media but instead by their respective terminology Significant attention arose towards the field of synthetic media starting in 2017 when Motherboard reported on the emergence of AI altered pornographic videos to insert the faces of famous actresses. Potential hazards of synthetic media include the spread of misinformation, further loss of trust in institutions such as media and government, the mass automation of creative and journalistic jobs and a retreat into AI-generated fantasy worlds. Synthetic media is an applied form of artificial imagination.

In machine learning, a variational autoencoder (VAE) is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling. It is part of the families of probabilistic graphical models and variational Bayesian methods.

An energy-based model (EBM) (also called a Canonical Ensemble Learning(CEL) or Learning via Canonical Ensemble (LCE)) is an application of canonical ensemble formulation of statistical physics for learning from data problems. The approach prominently appears in generative models (GMs).

The Fréchet inception distance (FID) is a metric used to assess the quality of images created by a generative model, like a generative adversarial network (GAN). Unlike the earlier inception score (IS), which evaluates only the distribution of generated images, the FID compares the distribution of generated images with the distribution of a set of real images. The FID metric does not completely replace the IS metric. Classifiers that achieve the best (lowest) FID score tend to have greater sample variety while classifiers achieving the best (highest) IS score tend to have better quality within individual images.

Deep learning speech synthesis refers to the application of deep learning models to generate natural-sounding human speech from written text (text-to-speech) or spectrum (vocoder). Deep neural networks (DNN) are trained using a large amount of recorded speech and, in the case of a text-to-speech system, the associated labels and/or input text.

The Wasserstein Generative Adversarial Network (WGAN) is a variant of generative adversarial network (GAN) proposed in 2017 that aims to "improve the stability of learning, get rid of problems like mode collapse, and provide meaningful learning curves useful for debugging and hyperparameter searches".

The Inception Score (IS) is an algorithm used to assess the quality of images created by a generative image model such as a generative adversarial network (GAN). The score is calculated based on the output of a separate, pretrained Inceptionv3 image classification model applied to a sample of (typically around 30,000) images generated by the generative model. The Inception Score is maximized when the following conditions are true:

- The entropy of the distribution of labels predicted by the Inceptionv3 model for the generated images is minimized. In other words, the classification model confidently predicts a single label for each image. Intuitively, this corresponds to the desideratum of generated images being "sharp" or "distinct".

- The predictions of the classification model are evenly distributed across all possible labels. This corresponds to the desideratum that the output of the generative model is "diverse".

A text-to-image model is a machine learning model which takes an input natural language description and produces an image matching that description.

In machine learning, diffusion models, also known as diffusion probabilistic models or score-based generative models, are a class of latent variable generative models. A diffusion model consists of three major components: the forward process, the reverse process, and the sampling procedure. The goal of diffusion models is to learn a diffusion process that generates a probability distribution for a given dataset from which we can then sample new elements. They learn the latent structure of a dataset by modeling the way in which data points diffuse through their latent space.

Audio inpainting is an audio restoration task which deals with the reconstruction of missing or corrupted portions of a digital audio signal. Inpainting techniques are employed when parts of the audio have been lost due to various factors such as transmission errors, data corruption or errors during recording.