Ubiquitous computing is a concept in software engineering, hardware engineering and computer science where computing is made to appear anytime and everywhere. In contrast to desktop computing, ubiquitous computing can occur using any device, in any location, and in any format. A user interacts with the computer, which can exist in many different forms, including laptop computers, tablets, smart phones and terminals in everyday objects such as a refrigerator or a pair of glasses. The underlying technologies to support ubiquitous computing include Internet, advanced middleware, operating system, mobile code, sensors, microprocessors, new I/O and user interfaces, computer networks, mobile protocols, location and positioning, and new materials.

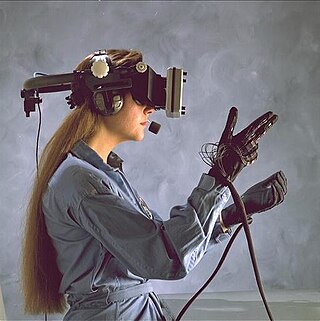

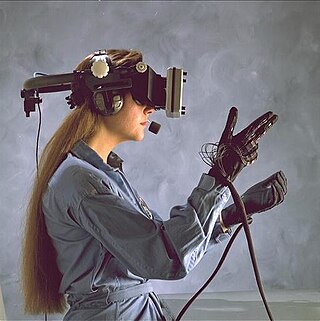

Augmented reality (AR) is an interactive experience that combines the real world and computer-generated content. The content can span multiple sensory modalities, including visual, auditory, haptic, somatosensory and olfactory. AR can be defined as a system that incorporates three basic features: a combination of real and virtual worlds, real-time interaction, and accurate 3D registration of virtual and real objects. The overlaid sensory information can be constructive, or destructive. This experience is seamlessly interwoven with the physical world such that it is perceived as an immersive aspect of the real environment. In this way, augmented reality alters one's ongoing perception of a real-world environment, whereas virtual reality completely replaces the user's real-world environment with a simulated one.

Haptic technology is technology that can create an experience of touch by applying forces, vibrations, or motions to the user. These technologies can be used to create virtual objects in a computer simulation, to control virtual objects, and to enhance remote control of machines and devices (telerobotics). Haptic devices may incorporate tactile sensors that measure forces exerted by the user on the interface. The word haptic, from the Greek: ἁπτικός (haptikos), means "tactile, pertaining to the sense of touch". Simple haptic devices are common in the form of game controllers, joysticks, and steering wheels.

Computer-mediated reality refers to the ability to add to, subtract information from, or otherwise manipulate one's perception of reality through the use of a wearable computer or hand-held device such as a smartphone.

Mixed reality (MR) is a term used to describe the merging of a real-world environment and a computer-generated one. Physical and virtual objects may co-exist in mixed reality environments and interact in real time.

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development is to empower collaboration, learning, and design by giving physical forms to digital information, thus taking advantage of the human ability to grasp and manipulate physical objects and materials.

Daniel John DiLorenzo is a medical device entrepreneur and physician-scientist. He is the inventor of several technologies for the treatment of neurological disease and is the founder of several companies which are developing technologies to treat epilepsy and other medical diseases and improve the quality of life of afflicted patients.

Haptic perception means literally the ability "to grasp something". Perception in this case is achieved through the active exploration of surfaces and objects by a moving subject, as opposed to passive contact by a static subject during tactile perception.

SixthSense is a gesture-based wearable computer system developed at MIT Media Lab by Steve Mann in 1994 and 1997, and 1998, and further developed by Pranav Mistry, in 2009, both of whom developed both hardware and software for both headworn and neckworn versions of it. It comprises a headworn or neck-worn pendant that contains both a data projector and camera. Headworn versions were built at MIT Media Lab in 1997 that combined cameras and illumination systems for interactive photographic art, and also included gesture recognition.

Desney Tan is vice president and managing director of Microsoft Health Futures, a cross-organizational incubation group that serves as Microsoft's Health and Life Science "moonshot factory". He also holds an affiliate faculty appointment in the Department of Computer Science and Engineering at the University of Washington, serves on the Board of Directors for ResMed, is senior advisor and chief technologist for Seattle-based life science incubator IntuitiveX, advises multiple startup companies, and is an active startup and real estate investor.

Innovations in International Health (IIH) was an innovation platform that facilitated multidisciplinary research to develop medical technologies for developing world settings at MIT. It was based at the Massachusetts Institute of Technology from 2008 through 2012. IIH's mission was to accelerate the development of appropriate and affordable health technologies by facilitating collaboration between researchers, users and health practitioners around the world.

Sound and music computing (SMC) is a research field that studies the whole sound and music communication chain from a multidisciplinary point of view. By combining scientific, technological and artistic methodologies it aims at understanding, modeling and generating sound and music through computational approaches.

Adam Ezra Cohen is a Professor of Chemistry, Chemical Biology, and Physics at Harvard University. He has received the Presidential Early Career Award for Scientists and Engineers and been selected by MIT Technology Review to the TR35 list of the world's top innovators under 35.

Ajit Narayanan is the inventor of FreeSpeech, a picture language with a deep grammatical structure. He's also the inventor of Avaz, India's first Augmentative and Alternative Communication device for children with disabilities. He is a TR35 awardee (2011) and an awardee of the National Award for Empowerment of Persons with Disabilities by the President of India (2010).

Visuo-haptic mixed reality (VHMR) is a branch of mixed reality that has the ability of merging visual and tactile perceptions of both virtual and real objects with a collocated approach. The first known system to overlay augmented haptic perceptions on direct views of the real world is the Virtual Fixtures system developed in 1992 at the US Air Force Research Laboratories. Like any emerging technology, the development of the VHMR systems is accompanied by challenges that, in this case, deal with the efforts to enhance the multi-modal human perception with the user-computer interface and interaction devices at the moment available. Visuo-haptic mixed reality (VHMR) consists of adding to a real scene the ability to see and touch virtual objects. It requires the use of see-through display technology for visually mixing real and virtual objects and haptic devices necessary to provide haptic stimuli to the user while interacting with the virtual objects. A VHMR setup allows the user to perceive visual and kinesthetic stimuli in a co-located manner, i.e., the user can see and touch virtual objects at the same spatial location. This setup overcomes the limits of the traditional one, i.e, display and haptic device, because the visuo-haptic co-location of the user's hand and a virtual tool improve the sensory integration of multimodal cues and makes the interaction more natural. But it also comes with technological challenges in order to improve the naturalness of the perceptual experience.

Jeffrey Michael Heer is an American computer scientist best known for his work on information visualization and interactive data analysis. He is a professor of computer science & engineering at the University of Washington, where he directs the UW Interactive Data Lab. He co-founded Trifacta with Joe Hellerstein and Sean Kandel in 2012.

Roopam Sharma, is an Indian scientist. He is best known for his work on Manovue, a technology which enables the visually impaired to read printed text. His research interests include Wearable Computing, Mobile Application Development, Human Centered Design, Computer Vision, AI and Cognitive Science. Roopam was recently awarded the Gifted Citizen Prize 2016 and has been listed as one of the top 8 Innovators Under 35 by the MIT Technology Review for the year 2016 in India. In 2018, he was honoured as part of Asia's 21 Young Leaders Initiative in Manila.

Chris Harrison is a British-born, American computer scientist and entrepreneur, working in the fields of human–computer interaction, machine learning and sensor-driven interactive systems. He is a professor at Carnegie Mellon University and director of the Future Interfaces Group within the Human–Computer Interaction Institute. He has previously conducted research at AT&T Labs, Microsoft Research, IBM Research and Disney Research. He is also the CTO and co-founder of Qeexo, a machine learning and interaction technology startup.

Javier G. Fernandez is a Spanish physicist and bioengineer. He is associate professor at the Singapore University of Technology and Design. He is known for his work in biomimetic materials and sustainable biomanufacturing, particularly for pioneering chitin's use for general and sustainable manufacturing.

Chandrajith Ashuboda "Ashu" Marasinghe is a Sri Lankan politician, professor and academic. He served as an advisor to Sri Lankan president Ranil Wickremesinghe and also served as a former member of parliament of Sri Lanka. He was a national list member of the Parliament of Sri Lanka proposed by United National Front following the 2015 Sri Lankan parliamentary election and subsequently served in the 15th Parliament of Sri Lanka as an MP.