In computing, multitasking is the concurrent execution of multiple tasks over a certain period of time. New tasks can interrupt already started ones before they finish, instead of waiting for them to end. As a result, a computer executes segments of multiple tasks in an interleaved manner, while the tasks share common processing resources such as central processing units (CPUs) and main memory. Multitasking automatically interrupts the running program, saving its state and loading the saved state of another program and transferring control to it. This "context switch" may be initiated at fixed time intervals, or the running program may be coded to signal to the supervisory software when it can be interrupted.

Multiple Virtual Storage, more commonly called MVS, is the most commonly used operating system on the System/370, System/390 and IBM Z IBM mainframe computers. IBM developed MVS, along with OS/VS1 and SVS, as a successor to OS/360. It is unrelated to IBM's other mainframe operating system lines, e.g., VSE, VM, TPF.

An operating system (OS) is system software that manages computer hardware and software resources, and provides common services for computer programs.

Computerized batch processing is a method of running software programs called jobs in batches automatically. While users are required to submit the jobs, no other interaction by the user is required to process the batch. Batches may automatically be run at scheduled times as well as being run contingent on the availability of computer resources.

In computing, a process is the instance of a computer program that is being executed by one or many threads. There are many different process models, some of which are light weight, but almost all processes are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions concurrently.

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems. In Modern Operating Systems, Tanenbaum shows that many distinct models of process organization are possible. In many cases, a thread is a component of a process. The multiple threads of a given process may be executed concurrently, sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non-thread-local global variables at any given time.

Computer operating systems (OSes) provide a set of functions needed and used by most application programs on a computer, and the links needed to control and synchronize computer hardware. On the first computers, with no operating system, every program needed the full hardware specification to run correctly and perform standard tasks, and its own drivers for peripheral devices like printers and punched paper card readers. The growing complexity of hardware and application programs eventually made operating systems a necessity for everyday use.

Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined.

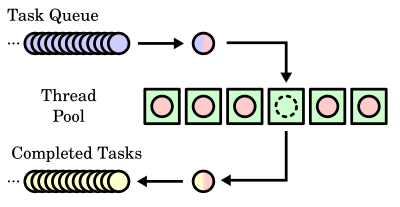

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

In multitasking computer operating systems, a daemon is a computer program that runs as a background process, rather than being under the direct control of an interactive user. Traditionally, the process names of a daemon end with the letter d, for clarification that the process is in fact a daemon, and for differentiation between a daemon and a normal computer program. For example, syslogd is a daemon that implements system logging facility, and sshd is a daemon that serves incoming SSH connections.

In computing, spooling is a specialized form of multi-programming for the purpose of copying data between different devices. In contemporary systems, it is usually used for mediating between a computer application and a slow peripheral, such as a printer. Spooling allows programs to "hand off" work to be done by the peripheral and then proceed to other tasks, or to not begin until input has been transcribed. A dedicated program, the spooler, maintains an orderly sequence of jobs for the peripheral and feeds it data at its own rate. Conversely, for slow input peripherals, such as a card reader, a spooler can maintain a sequence of computational jobs waiting for data, starting each job when all of the relevant input is available; see batch processing. The spool itself refers to the sequence of jobs, or the storage area where they are held. In many cases, the spooler is able to drive devices at their full rated speed with minimal impact on other processing.

The Completely Fair Scheduler (CFS) is a process scheduler that was merged into the 2.6.23 release of the Linux kernel and is the default scheduler of the tasks of the SCHED_NORMAL class. It handles CPU resource allocation for executing processes, and aims to maximize overall CPU utilization while also maximizing interactive performance.

In a non-interactive computer system, particularly IBM mainframes, a job stream, jobstream, or simply job is the sequence of job control language statements (JCL) and data that comprise a single "unit of work for an operating system". The term job traditionally means a one-off piece of work, and is contrasted with a batch, but non-interactive computation has come to be called "batch processing", and thus a unit of batch processing is often called a job, or by the oxymoronic term batch job; see job for details. Performing a job consists of executing one or more programs. Each program execution, called a job step, jobstep, or step, is usually related in some way to the others in the job. Steps in a job are executed sequentially, possibly depending on the results of previous steps, particularly in batch processing.

Parallel Extensions was the development name for a managed concurrency library developed by a collaboration between Microsoft Research and the CLR team at Microsoft. The library was released in version 4.0 of the .NET Framework. It is composed of two parts: Parallel LINQ (PLINQ) and Task Parallel Library (TPL). It also consists of a set of coordination data structures (CDS) – sets of data structures used to synchronize and co-ordinate the execution of concurrent tasks.

The history of IBM mainframe operating systems is significant within the history of mainframe operating systems, because of IBM's long-standing position as the world's largest hardware supplier of mainframe computers. IBM mainframes run operating systems supplied by IBM and by third parties.

OS/360, officially known as IBM System/360 Operating System, is a discontinued batch processing operating system developed by IBM for their then-new System/360 mainframe computer, announced in 1964; it was influenced by the earlier IBSYS/IBJOB and Input/Output Control System (IOCS) packages for the IBM 7090/7094 and even more so by the PR155 Operating System for the IBM 1410/7010 processors. It was one of the earliest operating systems to require the computer hardware to include at least one direct access storage device.

A process is a program in execution, and an integral part of any modern-day operating system (OS). The OS must allocate resources to processes, enable processes to share and exchange information, protect the resources of each process from other processes and enable synchronization among processes. To meet these requirements, the OS must maintain a data structure for each process, which describes the state and resource ownership of that process, and which enables the OS to exert control over each process.

OS 2200 is the operating system for the Unisys ClearPath Dorado family of mainframe systems. The operating system kernel of OS 2200 is a lineal descendant of Exec 8 for the UNIVAC 1108. Documentation and other information on current and past Unisys systems can be found on the Unisys public support website.

The Slurm Workload Manager, formerly known as Simple Linux Utility for Resource Management (SLURM), or simply Slurm, is a free and open-source job scheduler for Linux and Unix-like kernels, used by many of the world's supercomputers and computer clusters.

In computing, a job is a unit of work or unit of execution. A component of a job is called a task or a step. As a unit of execution, a job may be concretely identified with a single process, which may in turn have subprocesses which perform the tasks or steps that comprise the work of the job; or with a process group; or with an abstract reference to a process or process group, as in Unix job control.