In linguistics, syntax is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). Diverse approaches, such as generative grammar and functional grammar, offer unique perspectives on syntax, reflecting its complexity and centrality to understanding human language.

In grammar, a phrase—called expression in some contexts—is a group of words or singular word acting as a grammatical unit. For instance, the English expression "the very happy squirrel" is a noun phrase which contains the adjective phrase "very happy". Phrases can consist of a single word or a complete sentence. In theoretical linguistics, phrases are often analyzed as units of syntactic structure such as a constituent. There is a difference between the common use of the term phrase and its technical use in linguistics. In common usage, a phrase is usually a group of words with some special idiomatic meaning or other significance, such as "all rights reserved", "economical with the truth", "kick the bucket", and the like. It may be a euphemism, a saying or proverb, a fixed expression, a figure of speech, etc.. In linguistics, these are known as phrasemes.

Phrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957. They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a constituency grammar; as such, it stands in contrast to dependency grammars, which are based on the dependency relation.

A noun phrase – or NP or nominal (phrase) – is a phrase that usually has a noun or pronoun as its head, and has the same grammatical functions as a noun. Noun phrases are very common cross-linguistically, and they may be the most frequently occurring phrase type.

In language, a clause is a constituent or phrase that comprises a semantic predicand and a semantic predicate. A typical clause consists of a subject and a syntactic predicate, the latter typically a verb phrase composed of a verb with or without any objects and other modifiers. However, the subject is sometimes unexpressed if it is easily deducible from the context, especially in null-subject language but also in other languages, including instances of the imperative mood in English.

A parse tree or parsing tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term parse tree itself is used primarily in computational linguistics; in theoretical syntax, the term syntax tree is more common.

In linguistics, a verb phrase (VP) is a syntactic unit composed of a verb and its arguments except the subject of an independent clause or coordinate clause. Thus, in the sentence A fat man quickly put the money into the box, the words quickly put the money into the box constitute a verb phrase; it consists of the verb put and its arguments, but not the subject a fat man. A verb phrase is similar to what is considered a predicate in traditional grammars.

Dependency grammar (DG) is a class of modern grammatical theories that are all based on the dependency relation and that can be traced back primarily to the work of Lucien Tesnière. Dependency is the notion that linguistic units, e.g. words, are connected to each other by directed links. The (finite) verb is taken to be the structural center of clause structure. All other syntactic units (words) are either directly or indirectly connected to the verb in terms of the directed links, which are called dependencies. Dependency grammar differs from phrase structure grammar in that while it can identify phrases it tends to overlook phrasal nodes. A dependency structure is determined by the relation between a word and its dependents. Dependency structures are flatter than phrase structures in part because they lack a finite verb phrase constituent, and they are thus well suited for the analysis of languages with free word order, such as Czech or Warlpiri.

In linguistics, wh-movement is the formation of syntactic dependencies involving interrogative words. An example in English is the dependency formed between what and the object position of doing in "What are you doing?". Interrogative forms are sometimes known within English linguistics as wh-words, such as what, when, where, who, and why, but also include other interrogative words, such as how. This dependency has been used as a diagnostic tool in syntactic studies as it can be observed to interact with other grammatical constraints.

In linguistics, pied-piping is a phenomenon of syntax whereby a given focused expression brings along an encompassing phrase with it when it is moved.

The term predicate is used in two ways in linguistics and its subfields. The first defines a predicate as everything in a standard declarative sentence except the subject, and the other defines it as only the main content verb or associated predicative expression of a clause. Thus, by the first definition, the predicate of the sentence Frank likes cake is likes cake, while by the second definition, it is only the content verb likes, and Frank and cake are the arguments of this predicate. The conflict between these two definitions can lead to confusion.

In linguistics, an argument is an expression that helps complete the meaning of a predicate, the latter referring in this context to a main verb and its auxiliaries. In this regard, the complement is a closely related concept. Most predicates take one, two, or three arguments. A predicate and its arguments form a predicate-argument structure. The discussion of predicates and arguments is associated most with (content) verbs and noun phrases (NPs), although other syntactic categories can also be construed as predicates and as arguments. Arguments must be distinguished from adjuncts. While a predicate needs its arguments to complete its meaning, the adjuncts that appear with a predicate are optional; they are not necessary to complete the meaning of the predicate. Most theories of syntax and semantics acknowledge arguments and adjuncts, although the terminology varies, and the distinction is generally believed to exist in all languages. Dependency grammars sometimes call arguments actants, following Lucien Tesnière (1959).

Antecedent-contained deletion (ACD), also called antecedent-contained ellipsis, is a phenomenon whereby an elided verb phrase appears to be contained within its own antecedent. For instance, in the sentence "I read every book that you did", the verb phrase in the main clause appears to license ellipsis inside the relative clause which modifies its object. ACD is a classic puzzle for theories of the syntax-semantics interface, since it threatens to introduce an infinite regress. It is commonly taken as motivation for syntactic transformations such as quantifier raising, though some approaches explain it using semantic composition rules or by adoption more flexible notions of what it means to be a syntactic unit.

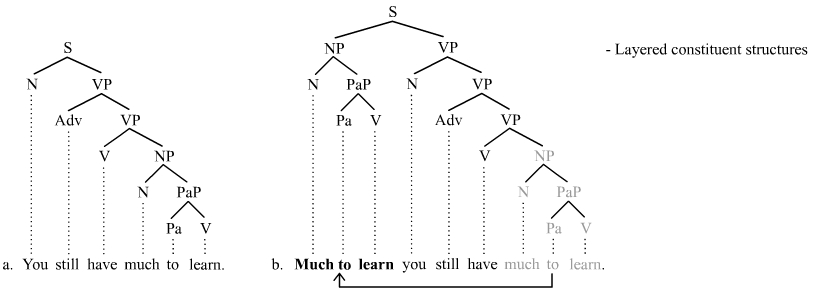

Syntactic movement is the means by which some theories of syntax address discontinuities. Movement was first postulated by structuralist linguists who expressed it in terms of discontinuous constituents or displacement. Some constituents appear to have been displaced from the position in which they receive important features of interpretation. The concept of movement is controversial and is associated with so-called transformational or derivational theories of syntax. Representational theories, in contrast, reject the notion of movement and often instead address discontinuities with other mechanisms including graph reentrancies, feature passing, and type shifters.

In linguistics, Immediate Constituent Analysis (ICA) is a syntactic theory which focuses on the hierarchical structure of sentences by isolating and identifying the constituents. While the idea of breaking down sentences into smaller components can be traced back to early psychological and linguistic theories, ICA as a formal method was developed in the early 20th century. It was influenced by Wilhelm Wundt's psychological theories of sentence structure but was later refined and formalized within the framework of structural linguistics by Leonard Bloomfield. The method gained traction in the distributionalist tradition through the work of Zellig Harris and Charles F. Hockett, who expanded and applied it to sentence analysis. Additionally, ICA was further explored within the context of glossematics by Knud Togeby. These contributions helped ICA become a central tool in syntactic analysis, focusing on the hierarchical relationships between sentence constituents.

In linguistics, a catena is a unit of syntax and morphology, closely associated with dependency grammars. It is a more flexible and inclusive unit than the constituent and its proponents therefore consider it to be better suited than the constituent to serve as the fundamental unit of syntactic and morphosyntactic analysis.

In syntax, shifting occurs when two or more constituents appearing on the same side of their common head exchange positions in a sense to obtain non-canonical order. The most widely acknowledged type of shifting is heavy NP shift, but shifting involving a heavy NP is just one manifestation of the shifting mechanism. Shifting occurs in most if not all European languages, and it may in fact be possible in all natural languages including sign languages. Shifting is not inversion, and inversion is not shifting, but the two mechanisms are similar insofar as they are both present in languages like English that have relatively strict word order. The theoretical analysis of shifting varies in part depending on the theory of sentence structure that one adopts. If one assumes relatively flat structures, shifting does not result in a discontinuity. Shifting is often motivated by the relative weight of the constituents involved. The weight of a constituent is determined by a number of factors: e.g., number of words, contrastive focus, and semantic content.

In linguistics, a discontinuity occurs when a given word or phrase is separated from another word or phrase that it modifies in such a manner that a direct connection cannot be established between the two without incurring crossing lines in the tree structure. The terminology that is employed to denote discontinuities varies depending on the theory of syntax at hand. The terms discontinuous constituent, displacement, long distance dependency, unbounded dependency, and projectivity violation are largely synonymous with the term discontinuity. There are various types of discontinuities, the most prominent and widely studied of these being topicalization, wh-fronting, scrambling, and extraposition.

Extraposition is a mechanism of syntax that alters word order in such a manner that a relatively "heavy" constituent appears to the right of its canonical position. Extraposing a constituent results in a discontinuity and in this regard, it is unlike shifting, which does not generate a discontinuity. The extraposed constituent is separated from its governor by one or more words that dominate its governor. Two types of extraposition are acknowledged in theoretical syntax: standard cases where extraposition is optional and it-extraposition where extraposition is obligatory. Extraposition is motivated in part by a desire to reduce center embedding by increasing right-branching and thus easing processing, center-embedded structures being more difficult to process. Extraposition occurs frequently in English and related languages.

Subject–verb inversion in English is a type of inversion marked by a predicate verb that precedes a corresponding subject, e.g., "Beside the bed stood a lamp". Subject–verb inversion is distinct from subject–auxiliary inversion because the verb involved is not an auxiliary verb.