Related Research Articles

In mathematics, the additive Schwarz method, named after Hermann Schwarz, solves a boundary value problem for a partial differential equation approximately by splitting it into boundary value problems on smaller domains and adding the results.

Numerical methods for partial differential equations is the branch of numerical analysis that studies the numerical solution of partial differential equations (PDEs).

In numerical analysis, a multigrid method is an algorithm for solving differential equations using a hierarchy of discretizations. They are an example of a class of techniques called multiresolution methods, very useful in problems exhibiting multiple scales of behavior. For example, many basic relaxation methods exhibit different rates of convergence for short- and long-wavelength components, suggesting these different scales be treated differently, as in a Fourier analysis approach to multigrid. MG methods can be used as solvers as well as preconditioners.

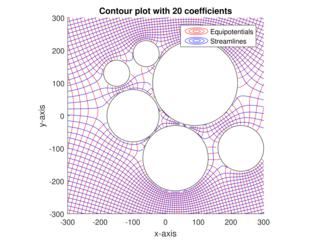

The analytic element method (AEM) is a numerical method used for the solution of partial differential equations. It was initially developed by O.D.L. Strack at the University of Minnesota. It is similar in nature to the boundary element method (BEM), as it does not rely upon the discretization of volumes or areas in the modeled system; only internal and external boundaries are discretized. One of the primary distinctions between AEM and BEMs is that the boundary integrals are calculated analytically. Although originally developed to model groundwater flow, AEM has subsequently been applied to other fields of study including studies of heat flow and conduction, periodic waves, and deformation by force.

In mathematics, in particular numerical analysis, the FETI method is an iterative substructuring method for solving systems of linear equations from the finite element method for the solution of elliptic partial differential equations, in particular in computational mechanics In each iteration, FETI requires the solution of a Neumann problem in each substructure and the solution of a coarse problem. The simplest version of FETI with no preconditioner in the substructure is scalable with the number of substructures but the condition number grows polynomially with the number of elements per substructure. FETI with a preconditioner consisting of the solution of a Dirichlet problem in each substructure is scalable with the number of substructures and its condition number grows only polylogarithmically with the number of elements per substructure. The coarse space in FETI consists of the nullspace on each substructure.

Hendrik "Henk" Albertus van der Vorst is a Dutch mathematician and Emeritus Professor of Numerical Analysis at Utrecht University. According to the Institute for Scientific Information (ISI), his paper on the BiCGSTAB method was the most cited paper in the field of mathematics in the 1990s. He is a member of the Royal Netherlands Academy of Arts and Sciences (KNAW) since 2002 and the Netherlands Academy of Technology and Innovation. In 2006 he was awarded a knighthood of the Order of the Netherlands Lion. Henk van der Vorst is a Fellow of Society for Industrial and Applied Mathematics (SIAM).

In numerical analysis, BDDC (balancing domain decomposition by constraints) is a domain decomposition method for solving large symmetric, positive definite systems of linear equations that arise from the finite element method. BDDC is used as a preconditioner to the conjugate gradient method. A specific version of BDDC is characterized by the choice of coarse degrees of freedom, which can be values at the corners of the subdomains, or averages over the edges or the faces of the interface between the subdomains. One application of the BDDC preconditioner then combines the solution of local problems on each subdomains with the solution of a global coarse problem with the coarse degrees of freedom as the unknowns. The local problems on different subdomains are completely independent of each other, so the method is suitable for parallel computing. With a proper choice of the coarse degrees of freedom (corners in 2D, corners plus edges or corners plus faces in 3D) and with regular subdomain shapes, the condition number of the method is bounded when increasing the number of subdomains, and it grows only very slowly with the number of elements per subdomain. Thus the number of iterations is bounded in the same way, and the method scales well with the problem size and the number of subdomains.

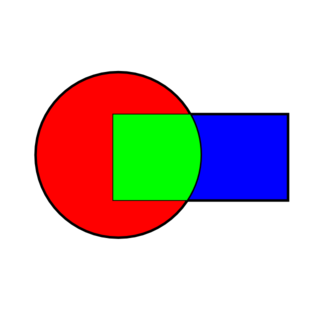

In mathematics, numerical analysis, and numerical partial differential equations, domain decomposition methods solve a boundary value problem by splitting it into smaller boundary value problems on subdomains and iterating to coordinate the solution between adjacent subdomains. A coarse problem with one or few unknowns per subdomain is used to further coordinate the solution between the subdomains globally. The problems on the subdomains are independent, which makes domain decomposition methods suitable for parallel computing. Domain decomposition methods are typically used as preconditioners for Krylov space iterative methods, such as the conjugate gradient method, GMRES, and LOBPCG.

The FETI-DP method is a domain decomposition method that enforces equality of the solution at subdomain interfaces by Lagrange multipliers except at subdomain corners, which remain primal variables. The first mathematical analysis of the method was provided by Mandel and Tezaur. The method was further improved by enforcing the equality of averages across the edges or faces on subdomain interfaces which is important for parallel scalability for 3D problems. FETI-DP is a simplification and a better performing version of FETI. The eigenvalues of FETI-DP are same as those of BDDC, except for the eigenvalue equal to one, and so the performance of FETI-DP and BDDC is essentially same.

In mathematics, the Schwarz alternating method or alternating process is an iterative method introduced in 1869–1870 by Hermann Schwarz in the theory of conformal mapping. Given two overlapping regions in the complex plane in each of which the Dirichlet problem could be solved, Schwarz described an iterative method for solving the Dirichlet problem in their union, provided their intersection was suitably well behaved. This was one of several constructive techniques of conformal mapping developed by Schwarz as a contribution to the problem of uniformization, posed by Riemann in the 1850s and first resolved rigorously by Koebe and Poincaré in 1907. It furnished a scheme for uniformizing the union of two regions knowing how to uniformize each of them separately, provided their intersection was topologically a disk or an annulus. From 1870 onwards Carl Neumann also contributed to this theory.

In mathematics, Neumann–Neumann methods are domain decomposition preconditioners named so because they solve a Neumann problem on each subdomain on both sides of the interface between the subdomains. Just like all domain decomposition methods, so that the number of iterations does not grow with the number of subdomains, Neumann–Neumann methods require the solution of a coarse problem to provide global communication. The balancing domain decomposition is a Neumann–Neumann method with a special kind of coarse problem.

In mathematics, the Neumann–Dirichlet method is a domain decomposition preconditioner which involves solving Neumann boundary value problem on one subdomain and Dirichlet boundary value problem on another, adjacent across the interface between the subdomains. On a problem with many subdomains organized in a rectangular mesh, the subdomains are assigned Neumann or Dirichlet problems in a checkerboard fashion.

In numerical analysis, mortar methods are discretization methods for partial differential equations, which use separate finite element discretization on nonoverlapping subdomains. The meshes on the subdomains do not match on the interface, and the equality of the solution is enforced by Lagrange multipliers, judiciously chosen to preserve the accuracy of the solution. Mortar discretizations lend themselves naturally to the solution by iterative domain decomposition methods such as FETI and balancing domain decomposition In the engineering practice in the finite element method, continuity of solutions between non-matching subdomains is implemented by multiple-point constraints.

In numerical analysis, the Schur complement method, named after Issai Schur, is the basic and the earliest version of non-overlapping domain decomposition method, also called iterative substructuring. A finite element problem is split into non-overlapping subdomains, and the unknowns in the interiors of the subdomains are eliminated. The remaining Schur complement system on the unknowns associated with subdomain interfaces is solved by the conjugate gradient method.

In numerical analysis, coarse problem is an auxiliary system of equations used in an iterative method for the solution of a given larger system of equations. A coarse problem is basically a version of the same problem at a lower resolution, retaining its essential characteristics, but with fewer variables. The purpose of the coarse problem is to propagate information throughout the whole problem globally.

Andrew Knyazev is an American mathematician. He graduated from the Faculty of Computational Mathematics and Cybernetics of Moscow State University under the supervision of Evgenii Georgievich D'yakonov in 1981 and obtained his PhD in Numerical Mathematics at the Russian Academy of Sciences under the supervision of Vyacheslav Ivanovich Lebedev in 1985. He worked at the Kurchatov Institute between 1981–1983, and then to 1992 at the Marchuk Institute of Numerical Mathematics of the Russian Academy of Sciences, headed by Gury Marchuk.

João Arménio Correia Martins was born on November 11, 1951, at the southern town of Olhão in Portugal. He attended high school at the Liceu Nacional de Faro which he completed in 1969. Afterwards João Martins moved to Lisbon where he was graduate student of Civil Engineering at Instituto Superior Técnico (IST) until 1976. He was a research assistant and assistant instructor at IST until 1981. Subsequently, he entered the graduate school in the College of Engineering, Department of Aerospace Engineering and Engineering Mechanics of The University of Texas at Austin, USA. There he obtained a MSc in 1983 with a thesis titled A Numerical Analysis of a Class of Problems in Elastodynamics with Friction Effects and a PhD in 1986 with a thesis titled Dynamic Frictional Contact Problems Involving Metallic Bodies, both supervised by Prof. John Tinsley Oden. He returned to Portugal in 1986 and became assistant professor at IST. In 1989 he became associate professor and in 1996 he earned the academic degree of “agregado” from Universidade Técnica de Lisboa. Later, in 2005, he became full professor in the Department of Civil Engineering and Architecture of IST.

High-order compact finite difference schemes are used for solving third-order differential equations created during the study of obstacle boundary value problems. They have been shown to be highly accurate and efficient. They are constructed by modifying the second-order scheme that was developed by Noor and Al-Said in 2002. The convergence rate of the high-order compact scheme is third order, the second-order scheme is fourth order.

MoFEM is an open source finite element analysis code developed and maintained at the University of Glasgow. MoFEM is tailored for the solution of multi-physics problems with arbitrary levels of approximation, different levels of mesh refinement and optimised for high-performance computing. MoFEM is the blend of the Boost MultiIndex containers, MOAB and PETSc. MoFEM is developed in C++ and it is open-source software under the GNU Lesser General Public License (GPL).

References

- ↑ J. Mandel, Balancing domain decomposition, Comm. Numer. Methods Engrg., 9 (1993), pp. 233–241. doi : 10.1002/cnm.1640090307

- ↑ L. C. Cowsar, J. Mandel, and M. F. Wheeler, Balancing domain decomposition for mixed finite elements, Math. Comp., 64 (1995), pp. 989–1015. doi : 10.1090/S0025-5718-1995-1297465-9

- 1 2 P. Le Tallec, J. Mandel, and M. Vidrascu, A Neumann–Neumann domain decomposition algorithm for solving plate and shell problems, SIAM Journal on Numerical Analysis, 35 (1998), pp. 836–867. doi : 10.1137/S0036142995291019

- ↑ J. Mandel and C. R. Dohrmann, Convergence of a balancing domain decomposition by constraints and energy minimization, Numer. Linear Algebra Appl., 10 (2003), pp. 639–659. doi : 10.1002/nla.341

- ↑ M. Bhardwaj, D. Day, C. Farhat, M. Lesoinne, K. Pierson, and D. Rixen, Application of the FETI method to ASCI problems – scalability results on 1000 processors and discussion of highly heterogeneous problems, International Journal for Numerical Methods in Engineering, 47 (2000), pp. 513–535. doi : 10.1002/(SICI)1097-0207(20000110/30)47:1/3<513::AID-NME782>3.0.CO;2-V

- ↑ Y. Fragakis, Force and displacement duality in Domain Decomposition Methods for Solid and Structural Mechanics. To appear in Comput. Methods Appl. Mech. Engrg., 2007.

- ↑ B. Sousedík and J. Mandel, On the equivalence of primal and dual substructuring preconditioners. arXiv:math/0802.4328, 2008.