Related Research Articles

Cognitive science is the interdisciplinary, scientific study of the mind and its processes with input from linguistics, psychology, neuroscience, philosophy, computer science/artificial intelligence, and anthropology. It examines the nature, the tasks, and the functions of cognition. Cognitive scientists study intelligence and behavior, with a focus on how nervous systems represent, process, and transform information. Mental faculties of concern to cognitive scientists include language, perception, memory, attention, reasoning, and emotion; to understand these faculties, cognitive scientists borrow from fields such as linguistics, psychology, artificial intelligence, philosophy, neuroscience, and anthropology. The typical analysis of cognitive science spans many levels of organization, from learning and decision to logic and planning; from neural circuitry to modular brain organization. One of the fundamental concepts of cognitive science is that "thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures."

Simple Network Management Protocol (SNMP) is an Internet Standard protocol for collecting and organizing information about managed devices on IP networks and for modifying that information to change device behaviour. Devices that typically support SNMP include cable modems, routers, switches, servers, workstations, printers, and more.

Social simulation is a research field that applies computational methods to study issues in the social sciences. The issues explored include problems in computational law, psychology, organizational behavior, sociology, political science, economics, anthropology, geography, engineering, archaeology and linguistics.

Experimental economics is the application of experimental methods to study economic questions. Data collected in experiments are used to estimate effect size, test the validity of economic theories, and illuminate market mechanisms. Economic experiments usually use cash to motivate subjects, in order to mimic real-world incentives. Experiments are used to help understand how and why markets and other exchange systems function as they do. Experimental economics have also expanded to understand institutions and the law.

Soar is a cognitive architecture, originally created by John Laird, Allen Newell, and Paul Rosenbloom at Carnegie Mellon University. It is now maintained and developed by John Laird's research group at the University of Michigan.

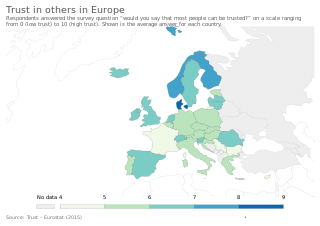

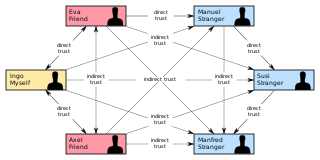

Trust is the willingness of one party to become vulnerable to another party on the presumption that the trustee will act in ways that benefit the trustor. In addition, the trustor does not have control over the actions of the trustee. Scholars distinguish between generalized trust, which is the extension of trust to a relatively large circle of unfamiliar others, and particularized trust, which is contingent on a specific situation or a specific relationship.

In psychology and sociology, a trust metric is a measurement or metric of the degree to which one social actor trusts another social actor. Trust metrics may be abstracted in a manner that can be implemented on computers, making them of interest for the study and engineering of virtual communities, such as Friendster and LiveJournal.

Computational sociology is a branch of sociology that uses computationally intensive methods to analyze and model social phenomena. Using computer simulations, artificial intelligence, complex statistical methods, and analytic approaches like social network analysis, computational sociology develops and tests theories of complex social processes through bottom-up modeling of social interactions.

Multilevel security or multiple levels of security (MLS) is the application of a computer system to process information with incompatible classifications, permit access by users with different security clearances and needs-to-know, and prevent users from obtaining access to information for which they lack authorization. There are two contexts for the use of multilevel security. One is to refer to a system that is adequate to protect itself from subversion and has robust mechanisms to separate information domains, that is, trustworthy. Another context is to refer to an application of a computer that will require the computer to be strong enough to protect itself from subversion and possess adequate mechanisms to separate information domains, that is, a system we must trust. This distinction is important because systems that need to be trusted are not necessarily trustworthy.

There are various definitions of autonomous agent. According to Brustoloni (1991)

"Autonomous agents are systems capable of autonomous, purposeful action in the real world."

The actor model in computer science is a mathematical model of concurrent computation that treats an actor as the basic building block of concurrent computation. In response to a message it receives, an actor can: make local decisions, create more actors, send more messages, and determine how to respond to the next message received. Actors may modify their own private state, but can only affect each other indirectly through messaging.

Reputation systems are programs or algorithms that allow users to rate each other in online communities in order to build trust through reputation. Some common uses of these systems can be found on E-commerce websites such as eBay, Amazon.com, and Etsy as well as online advice communities such as Stack Exchange. These reputation systems represent a significant trend in "decision support for Internet mediated service provisions". With the popularity of online communities for shopping, advice, and exchange of other important information, reputation systems are becoming vitally important to the online experience. The idea of reputation systems is that even if the consumer can't physically try a product or service, or see the person providing information, that they can be confident in the outcome of the exchange through trust built by recommender systems.

In computer security, shoulder surfing is a type of social engineering technique used to obtain information such as personal identification numbers (PINs), passwords and other confidential data by looking over the victim's shoulder. Unauthorized users watch the keystrokes inputted on a device or listen to sensitive information being spoken, which is also known as eavesdropping.

Virgil Dorin Gligor is a Romanian-American professor of electrical and computer engineering who specializes in the research of network security and applied cryptography.

Ron Sun is a cognitive scientist who made significant contributions to computational psychology and other areas of cognitive science and artificial intelligence. He is currently professor of cognitive sciences at Rensselaer Polytechnic Institute, and formerly the James C. Dowell Professor of Engineering and Professor of Computer Science at University of Missouri. He received his Ph.D. in 1992 from Brandeis University.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface (HCI)".

Informatics is the study of computational systems. According to the ACM Europe Council and Informatics Europe, informatics is synonymous with computer science and computing as a profession, in which the central notion is transformation of information. In other countries, the term "informatics" is used with a different meaning in the context of library science, in which case it is synonymous with data storage and retrieval.

Natural computing, also called natural computation, is a terminology introduced to encompass three classes of methods: 1) those that take inspiration from nature for the development of novel problem-solving techniques; 2) those that are based on the use of computers to synthesize natural phenomena; and 3) those that employ natural materials to compute. The main fields of research that compose these three branches are artificial neural networks, evolutionary algorithms, swarm intelligence, artificial immune systems, fractal geometry, artificial life, DNA computing, and quantum computing, among others.

Swift trust is a form of trust occurring in temporary organizational structures, which can include quick starting groups or teams. It was first explored by Debra Meyerson and colleagues in 1996. In swift trust theory, a group or team assumes trust initially, and later verifies and adjusts trust beliefs accordingly.

Opportunistic mobile social networks are a form of mobile ad hoc networks that exploit the human social characteristics, such as similarities, daily routines, mobility patterns, and interests to perform the message routing and data sharing. In such networks, the users with mobile devices are able to form on-the-fly social networks to communicate with each other and share data objects.

References

- ↑ Weise, J. (August 2001). "Public Key Infrastructure Overview". SunPs Global Security Practice, SunMicrosystems.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Kohl J.; B. C. Neuman (1993). "The Kerberos Network Authentication Service(Version 5)". Internet Request for Comments RFC-1510.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Seigneur J.M. (2005). "Trust, Security and Privacy in Global Computing". PhD Thesis, University of Dublin, Trinity College.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ "IST, Global Computing, EU". 2004.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Longo L.; Dondio P.; Barrett S. (2007). "Temporal Factors to evaluate trustworthiness of virtual identities" (PDF). Third International Workshop on the Value of Security through Collaboration, SECURECOMM.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Dellarocas C. (2003). "The digitalization of Word-Of-Mouth: Promise and Challenges of Online Reputation Mechanism". Management Science.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Montaner M.; Lopez B.; De La Rosa J. (2002). "Developing Trust in Recommender Agents". Proceedings of the First International Joint Conference on Autonomous Agents and Multiagent Systems (AAMAS-02).

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Romano D.M. (2003). "The Nature of Trust: Conceptual and Operational Clarification". Louisiana State University, PhD Thesis.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Gambetta D. "Can We Trust Trust". Trust: Making and Breaking Cooperative Relations. Chapt. Can We Trust Trust? Basil Blackwell, Oxford, pp. 213-237.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Marsh S. (1994). "Formalizing Trust as a Computational Concept". PhD thesis, University of Stirling, Department of Computer Science and Mathematics.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Sabater J.; Sierra C. (2005). "Review on Computational Trust and Reputation Models". Artificial Intelligence Review, 24:33-60, Springer.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Esfandiari B.; Chandrasekharan S. (2001). "On How Agents Make Friends: Mechanism for Trust Acquisition". In proocedings of the Fourth Workshop on Deception Fraud and Trust in Agent Societies, Montreal, Canada. pp. 27-34.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Hoogendoorn, Mark; Jaffry, S. Waqar; Treur, Jan (2011). Advances in Cognitive Neurodynamics (II). Springer, Dordrecht. pp. 523–536. CiteSeerX 10.1.1.160.2535 . doi:10.1007/978-90-481-9695-1_81. ISBN 9789048196944.

- ↑ Jaffry, S. Waqar; Treur, Jan (2009-12-01). Comparing a Cognitive and a Neural Model for Relative Trust Dynamics. Neural Information Processing. Lecture Notes in Computer Science. Springer, Berlin, Heidelberg. pp. 72–83. CiteSeerX 10.1.1.149.7940 . doi:10.1007/978-3-642-10677-4_8. ISBN 9783642106767.

- ↑ Gambetta D. "Can We Trust Trust?". In. Trust: Making and Breaking Cooperative Relations. Chapt. Can We Trust Trust? Basil Blackwell, Oxford, pp. 213-237.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Hoogendoorn, M.; Jaffry, S. W. (August 2009). "The Influence of Personalities Upon the Dynamics of Trust and Reputation". 2009 International Conference on Computational Science and Engineering. Vol. 3. pp. 263–270. doi:10.1109/CSE.2009.379. ISBN 978-1-4244-5334-4. S2CID 14294422.

- ↑ Scott, J.; Tallia, A; Crosson, JC; Orzano, AJ; Stroebel, C; Dicicco-Bloom, B; O'Malley, D; Shaw, E; Crabtree, B (September 2005). "Social Network Analysis as an Analytic Tool for Interaction Patterns in Primary Care Practices". Annals of Family Medicine. 3 (5): 443–8. doi:10.1370/afm.344. PMC 1466914 . PMID 16189061.

- ↑ Bacharach M.; Gambetta D. (2001). "Trust in Society". Chapt. Trust in signs. Russel Sage Foundation, .

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Hoogendoorn, M.; Jaffry, S.W.; Maanen, P.P. van & Treur, J. (2011). Modeling and Validation of Biased Human Trust. IEEE Computer Society Press, 2011.

- ↑ Mark, Hoogendoorn; Waqar, Jaffry, Syed; Peter-Paul, van Maanen; Jan, Treur (2013-01-01). "Modelling biased human trust dynamics". Web Intelligence and Agent Systems. 11 (1): 21–40. doi:10.3233/WIA-130260. ISSN 1570-1263.

- ↑ McKnight D.H.; Chervany N.L. (1996). "The meanings of trust. Technical report". university of Minnesota Management Information Systems Research Center.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ McKnight D.H.; Chervany N.L. (2002). "Conceptualizing Trust: A Typology and E-Commerce Customer Relationships Model". In: Proceedings of the 34th Hawaii International Conference on System Sciences.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Mui L.; Halberstadt A.; Mohtashemi M. (2002). "Notions of Reputation in Multi-Agent Systems: a Review". In: Proceedings of the First International Joint Conference on Autonomous Agents and Multi-Agent Systems (AAMAS-02), Bologna, Italy, pp. 280-287.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Dondio, P.; Longo, L. (2011). "Trust-Based Techniques for Collective Intelligence in Social Search Systems". Next Generation Data Technologies For Collective Computational Intelligence. Studies in Computational Intelligence. Vol. 352. Springer. pp. 113–135. doi:10.1007/978-3-642-20344-2_5. ISBN 978-3-642-20343-5.

- ↑ Longo L. (2007). "Security Through Collaboration in Global Computing: a Computational Trust Model Based on Temporal Factors to Evaluate Trustworthiness of Virtual Identities". Master Degree, Insubria University.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ D. Quercia; S. Hailes; L. Capra (2006). "B-trust: Bayesian Trust Framework for Pervasive Computing" (PDF). iTrust.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Seigneur J.M. (2006). "Seigneur J.M., Ambitrust? Immutable and Context Aware Trust Fusion". Technical Report, Univ. of Geneva.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Dondio, P.; Longo, L.; et al. (June 18–20, 2008). "A Translation Mechanism for Recommendations" (PDF). In Karabulut, Yücel; Mitchell, John C.; Herrmann, Peter; Jensen, Christian Damsgaard (eds.). Trust Management II Proceedings of IFIPTM 2008: Joint iTrust and PST Conferences on Privacy, Trust Management and Security. IFIP – The International Federation for Information Processing. Vol. 263. Trondheim, Norway: Springer. pp. 87–102. doi: 10.1007/978-0-387-09428-1_6 . ISBN 978-0-387-09427-4.

- ↑ Staab, E.; Engel, T. (2009). "Tuning Evidence-Based Trust Models". 2009 International Conference on Computational Science and Engineering. Vancouver, Canada: IEEE. pp. 92–99. doi:10.1109/CSE.2009.209. ISBN 978-1-4244-5334-4.

- ↑ Lagesse, B. (2012). "Analytical evaluation of P2P reputation systems" (PDF). International Journal of Communication Networks and Distributed Systems. 9: 82–96. CiteSeerX 10.1.1.407.7659 . doi:10.1504/IJCNDS.2012.047897.