In mathematics, the concept of a measure is a generalization and formalization of geometrical measures and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations of measure are widely used in quantum physics and physics in general.

In probability theory, Chebyshev's inequality provides an upper bound on the probability of deviation of a random variable from its mean. More specifically, the probability that a random variable deviates from its mean by more than is at most , where is any positive constant.

In probability theory, the law of large numbers (LLN) is a mathematical theorem that states that the average of the results obtained from a large number of independent and identical random samples converges to the true value, if it exists. More formally, the LLN states that given a sample of independent and identically distributed values, the sample mean converges to the true mean.

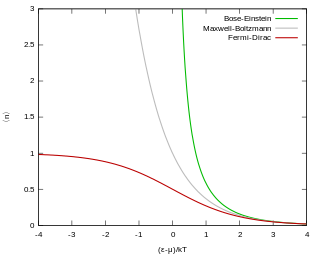

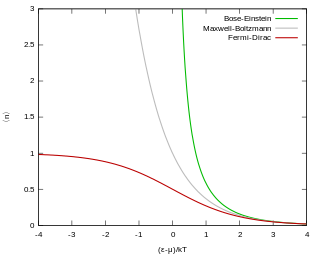

In quantum statistics, Bose–Einstein statistics describes one of two possible ways in which a collection of non-interacting identical particles may occupy a set of available discrete energy states at thermodynamic equilibrium. The aggregation of particles in the same state, which is a characteristic of particles obeying Bose–Einstein statistics, accounts for the cohesive streaming of laser light and the frictionless creeping of superfluid helium. The theory of this behaviour was developed (1924–25) by Satyendra Nath Bose, who recognized that a collection of identical and indistinguishable particles can be distributed in this way. The idea was later adopted and extended by Albert Einstein in collaboration with Bose.

In probability theory, Markov's inequality gives an upper bound on the probability that a non-negative random variable is greater than or equal to some positive constant. It is named after the Russian mathematician Andrey Markov, although it appeared earlier in the work of Pafnuty Chebyshev, and many sources, especially in analysis, refer to it as Chebyshev's inequality or Bienaymé's inequality. Markov's inequality is tight in the sense that for each chosen positive constant, there exists a random variable such that the inequality is in fact an equality.

In mathematics, the Radon–Nikodym theorem is a result in measure theory that expresses the relationship between two measures defined on the same measurable space. A measure is a set function that assigns a consistent magnitude to the measurable subsets of a measurable space. Examples of a measure include area and volume, where the subsets are sets of points; or the probability of an event, which is a subset of possible outcomes within a wider probability space.

In general relativity, the Gibbons–Hawking–York boundary term is a term that needs to be added to the Einstein–Hilbert action when the underlying spacetime manifold has a boundary.

In relativistic physics, the electromagnetic stress–energy tensor is the contribution to the stress–energy tensor due to the electromagnetic field. The stress–energy tensor describes the flow of energy and momentum in spacetime. The electromagnetic stress–energy tensor contains the negative of the classical Maxwell stress tensor that governs the electromagnetic interactions.

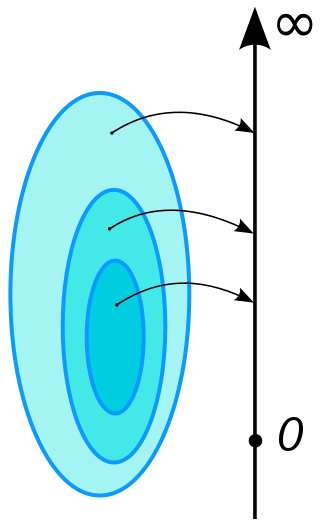

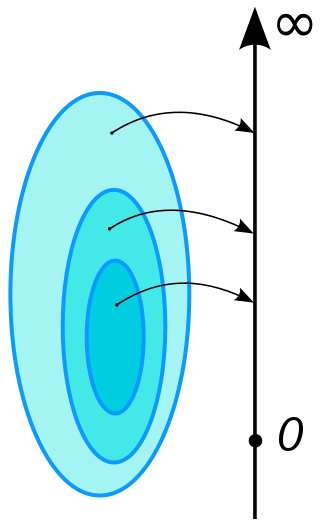

In mathematics, tightness is a concept in measure theory. The intuitive idea is that a given collection of measures does not "escape to infinity".

In mathematics, the Lévy–Prokhorov metric is a metric on the collection of probability measures on a given metric space. It is named after the French mathematician Paul Lévy and the Soviet mathematician Yuri Vasilyevich Prokhorov; Prokhorov introduced it in 1956 as a generalization of the earlier Lévy metric.

In mathematics, more specifically measure theory, there are various notions of the convergence of measures. For an intuitive general sense of what is meant by convergence of measures, consider a sequence of measures μn on a space, sharing a common collection of measurable sets. Such a sequence might represent an attempt to construct 'better and better' approximations to a desired measure μ that is difficult to obtain directly. The meaning of 'better and better' is subject to all the usual caveats for taking limits; for any error tolerance ε > 0 we require there be N sufficiently large for n ≥ N to ensure the 'difference' between μn and μ is smaller than ε. Various notions of convergence specify precisely what the word 'difference' should mean in that description; these notions are not equivalent to one another, and vary in strength.

In mathematics, the Brunn–Minkowski theorem is an inequality relating the volumes of compact subsets of Euclidean space. The original version of the Brunn–Minkowski theorem applied to convex sets; the generalization to compact nonconvex sets stated here is due to Lazar Lyusternik (1935).

In mathematics, Dvoretzky's theorem is an important structural theorem about normed vector spaces proved by Aryeh Dvoretzky in the early 1960s, answering a question of Alexander Grothendieck. In essence, it says that every sufficiently high-dimensional normed vector space will have low-dimensional subspaces that are approximately Euclidean. Equivalently, every high-dimensional bounded symmetric convex set has low-dimensional sections that are approximately ellipsoids.

In mathematics, uniform integrability is an important concept in real analysis, functional analysis and measure theory, and plays a vital role in the theory of martingales.

In mathematics, the Prékopa–Leindler inequality is an integral inequality closely related to the reverse Young's inequality, the Brunn–Minkowski inequality and a number of other important and classical inequalities in analysis. The result is named after the Hungarian mathematicians András Prékopa and László Leindler.

Financial models with long-tailed distributions and volatility clustering have been introduced to overcome problems with the realism of classical financial models. These classical models of financial time series typically assume homoskedasticity and normality cannot explain stylized phenomena such as skewness, heavy tails, and volatility clustering of the empirical asset returns in finance. In 1963, Benoit Mandelbrot first used the stable distribution to model the empirical distributions which have the skewness and heavy-tail property. Since -stable distributions have infinite -th moments for all , the tempered stable processes have been proposed for overcoming this limitation of the stable distribution.

In probability theory, the multidimensional Chebyshev's inequality is a generalization of Chebyshev's inequality, which puts a bound on the probability of the event that a random variable differs from its expected value by more than a specified amount.

In mathematical analysis, Lorentz spaces, introduced by George G. Lorentz in the 1950s, are generalisations of the more familiar spaces.

In the probability theory field of mathematics, Talagrand's concentration inequality is an isoperimetric-type inequality for product probability spaces. It was first proved by the French mathematician Michel Talagrand. The inequality is one of the manifestations of the concentration of measure phenomenon.

In theoretical physics, the dual graviton is a hypothetical elementary particle that is a dual of the graviton under electric-magnetic duality, as an S-duality, predicted by some formulations of supergravity in eleven dimensions.