In computing, a hash table, also known as a hash map or a hash set, is a data structure that implements an associative array, also called a dictionary, which is an abstract data type that maps keys to values. A hash table uses a hash function to compute an index, also called a hash code, into an array of buckets or slots, from which the desired value can be found. During lookup, the key is hashed and the resulting hash indicates where the corresponding value is stored.

In computer science, a trie, also called digital tree or prefix tree, is a type of k-ary search tree, a tree data structure used for locating specific keys from within a set. These keys are most often strings, with links between nodes defined not by the entire key, but by individual characters. In order to access a key, the trie is traversed depth-first, following the links between nodes, which represent each character in the key.

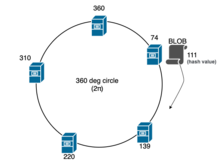

A distributed hash table (DHT) is a distributed system that provides a lookup service similar to a hash table. Key–value pairs are stored in a DHT, and any participating node can efficiently retrieve the value associated with a given key. The main advantage of a DHT is that nodes can be added or removed with minimum work around re-distributing keys. Keys are unique identifiers which map to particular values, which in turn can be anything from addresses, to documents, to arbitrary data. Responsibility for maintaining the mapping from keys to values is distributed among the nodes, in such a way that a change in the set of participants causes a minimal amount of disruption. This allows a DHT to scale to extremely large numbers of nodes and to handle continual node arrivals, departures, and failures.

In Vapnik–Chervonenkis theory, the Vapnik–Chervonenkis (VC) dimension is a measure of the size of a class of sets. The notion can be extended to classes of binary functions. It is defined as the cardinality of the largest set of points that the algorithm can shatter, which means the algorithm can always learn a perfect classifier for any labeling of at least one configuration of those data points. It was originally defined by Vladimir Vapnik and Alexey Chervonenkis.

In computing, Chord is a protocol and algorithm for a peer-to-peer distributed hash table. A distributed hash table stores key-value pairs by assigning keys to different computers ; a node will store the values for all the keys for which it is responsible. Chord specifies how keys are assigned to nodes, and how a node can discover the value for a given key by first locating the node responsible for that key.

Kademlia is a distributed hash table for decentralized peer-to-peer computer networks designed by Petar Maymounkov and David Mazières in 2002. It specifies the structure of the network and the exchange of information through node lookups. Kademlia nodes communicate among themselves using UDP. A virtual or overlay network is formed by the participant nodes. Each node is identified by a number or node ID. The node ID serves not only as identification, but the Kademlia algorithm uses the node ID to locate values.

A Bloom filter is a space-efficient probabilistic data structure, conceived by Burton Howard Bloom in 1970, that is used to test whether an element is a member of a set. False positive matches are possible, but false negatives are not – in other words, a query returns either "possibly in set" or "definitely not in set". Elements can be added to the set, but not removed ; the more items added, the larger the probability of false positives.

In computer science, a suffix tree is a compressed trie containing all the suffixes of the given text as their keys and positions in the text as their values. Suffix trees allow particularly fast implementations of many important string operations.

In mathematics, and specifically in potential theory, the Poisson kernel is an integral kernel, used for solving the two-dimensional Laplace equation, given Dirichlet boundary conditions on the unit disk. The kernel can be understood as the derivative of the Green's function for the Laplace equation. It is named for Siméon Poisson.

In computer programming, primary clustering is a phenomenon that causes performance degradation in linear-probing hash tables. The phenomenon states that, as elements are added to a linear probing hash table, they have a tendency to cluster together into long runs. If the hash table is at a load factor of for some parameter , then the expected length of the run containing a given element is . This causes insertions and negative queries to take expected time in a linear-probing hash table.

2-choice hashing, also known as 2-choice chaining, is "a variant of a hash table in which keys are added by hashing with two hash functions. The key is put in the array position with the fewer (colliding) keys. Some collision resolution scheme is needed, unless keys are kept in buckets. The average-case cost of a successful search is , where is the number of keys and is the size of the array. The most collisions is with high probability."

In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hashes similar input items into the same "buckets" with high probability. Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items.

The terms toroidal and poloidal refer to directions relative to a torus of reference. They describe a three-dimensional coordinate system in which the poloidal direction follows a small circular ring around the surface, while the toroidal direction follows a large circular ring around the torus, encircling the central void.

In peer-to-peer networks, Koorde is a distributed hash table (DHT) system based on the Chord DHT and the De Bruijn graph. Inheriting the simplicity of Chord, Koorde meets O(log n) hops per node, and hops per lookup request with O(log n) neighbors per node.

In theoretical computer science, smoothed analysis is a way of measuring the complexity of an algorithm. Since its introduction in 2001, smoothed analysis has been used as a basis for considerable research, for problems ranging from mathematical programming, numerical analysis, machine learning, and data mining. It can give a more realistic analysis of the practical performance of the algorithm compared to analysis that uses worst-case or average-case scenarios.

Because matrix multiplication is such a central operation in many numerical algorithms, much work has been invested in making matrix multiplication algorithms efficient. Applications of matrix multiplication in computational problems are found in many fields including scientific computing and pattern recognition and in seemingly unrelated problems such as counting the paths through a graph. Many different algorithms have been designed for multiplying matrices on different types of hardware, including parallel and distributed systems, where the computational work is spread over multiple processors.

In probability theory and directional statistics, a wrapped Cauchy distribution is a wrapped probability distribution that results from the "wrapping" of the Cauchy distribution around the unit circle. The Cauchy distribution is sometimes known as a Lorentzian distribution, and the wrapped Cauchy distribution may sometimes be referred to as a wrapped Lorentzian distribution.

In machine learning, feature hashing, also known as the hashing trick, is a fast and space-efficient way of vectorizing features, i.e. turning arbitrary features into indices in a vector or matrix. It works by applying a hash function to the features and using their hash values as indices directly, rather than looking the indices up in an associative array. In addition to its use for encoding non-numeric values, feature hashing can also be used for dimensionality reduction.

Rendezvous or highest random weight (HRW) hashing is an algorithm that allows clients to achieve distributed agreement on a set of options out of a possible set of options. A typical application is when clients need to agree on which sites objects are assigned to.

A hyperbolic geometric graph (HGG) or hyperbolic geometric network (HGN) is a special type of spatial network where (1) latent coordinates of nodes are sprinkled according to a probability density function into a hyperbolic space of constant negative curvature and (2) an edge between two nodes is present if they are close according to a function of the metric (typically either a Heaviside step function resulting in deterministic connections between vertices closer than a certain threshold distance, or a decaying function of hyperbolic distance yielding the connection probability). A HGG generalizes a random geometric graph (RGG) whose embedding space is Euclidean.