In computer science, the ELIZA effect is the tendency to project human traits — such as experience, semantic comprehension or empathy — into computer programs that have a textual interface. The effect is a category mistake that arises when the program's symbolic computations are described through terms such as "think", "know" or "understand."

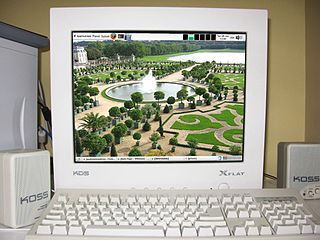

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

A chatbot is a software application or web interface that aims to mimic human conversation through text or voice interactions. Modern chatbots are typically online and use artificial intelligence (AI) systems that are capable of maintaining a conversation with a user in natural language and simulating the way a human would behave as a conversational partner. Such technologies often utilize aspects of deep learning and natural language processing, but more simplistic chatbots have been around for decades prior.

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures. Gestures can originate from any bodily motion or state, but commonly originate from the face or hand. One area of the field is emotion recognition derived from facial expressions and hand gestures. Users can make simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language, however, the identification and recognition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques. Gesture recognition is a path for computers to begin to better understand and interpret human body language, previously not possible through text or unenhanced graphical (GUI) user interfaces.

Multimodal interaction provides the user with multiple modes of interacting with a system. A multimodal interface provides several distinct tools for input and output of data.

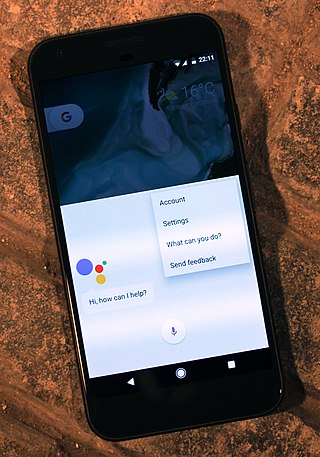

A voice-user interface (VUI) enables spoken human interaction with computers, using speech recognition to understand spoken commands and answer questions, and typically text to speech to play a reply. A voice command device is a device controlled with a voice user interface.

A dialogue system, or conversational agent (CA), is a computer system intended to converse with a human. Dialogue systems employed one or more of text, speech, graphics, haptics, gestures, and other modes for communication on both the input and output channel.

Justine M. Cassell is an American professor and researcher interested in human-human conversation, human-computer interaction, and storytelling. Since August 2010 she has been on the faculty of the Carnegie Mellon Human Computer Interaction Institute (HCII) and the Language Technologies Institute, with courtesy appointments in Psychology, and the Center for Neural Bases of Cognition.

A virtual assistant (VA) is a software agent that can perform a range of tasks or services for a user based on user input such as commands or questions, including verbal ones. Such technologies often incorporate chatbot capabilities to simulate human conversation, such as via online chat, to facilitate interaction with their users. The interaction may be via text, graphical interface, or voice - as some virtual assistants are able to interpret human speech and respond via synthesized voices.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface (HCI)".

Virtual intelligence (VI) is the term given to artificial intelligence that exists within a virtual world. Many virtual worlds have options for persistent avatars that provide information, training, role playing, and social interactions.

The Rich Representation Language, often abbreviated as RRL, is a computer animation language specifically designed to facilitate the interaction of two or more animated characters. The research effort was funded by the European Commission as part of the NECA Project. The NECA framework within which RRL was developed was not oriented towards the animation of movies, but the creation of intelligent "virtual characters" that interact within a virtual world and hold conversations with emotional content, coupled with suitable facial expressions.

The NECA Project was a research project that focused on multimodal communication with animated agents in a virtual world. NECA was funded by the European Commission from 1998 to 2002 and the research results were published up to 2005.

Elizabeth Frances Churchill is a British American psychologist specializing in human-computer interaction (HCI) and social computing. She is a Director of User Experience at Google. She has held a number of positions in the ACM including Secretary Treasurer from 2016 to 2018, and Executive Vice President from 2018 to 2020.

A pedagogical agent is a concept borrowed from computer science and artificial intelligence and applied to education, usually as part of an intelligent tutoring system (ITS). It is a simulated human-like interface between the learner and the content, in an educational environment. A pedagogical agent is designed to model the type of interactions between a student and another person. Mabanza and de Wet define it as "a character enacted by a computer that interacts with the user in a socially engaging manner". A pedagogical agent can be assigned different roles in the learning environment, such as tutor or co-learner, depending on the desired purpose of the agent. "A tutor agent plays the role of a teacher, while a co-learner agent plays the role of a learning companion".

Nadine is a gynoid humanoid social robot that is modelled on Professor Nadia Magnenat Thalmann. The robot has a strong human-likeness with a natural-looking skin and hair and realistic hands. Nadine is a socially intelligent robot which returns a greeting, makes eye contact, and can remember all the conversations had with it. It is able to answer questions autonomously in several languages, simulate emotions both in gestures and facially, depending on the content of the interaction with the user. Nadine can recognise persons it has previously seen, and engage in flowing conversation. Nadine has been programmed with a "personality", in that its demeanour can change according to what is said to it. Nadine has a total of 27 degrees of freedom for facial expressions and upper body movements. With persons it has previously encountered, it remembers facts and events related to each person. It can assist people with special needs by reading stories, showing images, put on Skype sessions, send emails, and communicate with other members of the family. It can play the role of a receptionist in an office or be dedicated to be a personal coach.

An echoborg is a person whose words and actions are determined, in whole or in part, by an artificial intelligence (AI).

A conversational user interface (CUI) is a user interface for computers that emulates a conversation with a real human. Historically, computers have relied on text-based user interfaces and graphical user interfaces (GUIs) to translate the user's desired action into commands the computer understands. While an effective mechanism of completing computing actions, there is a learning curve for the user associated with GUI. Instead, CUIs provide opportunity for the user to communicate with the computer in their natural language rather than in a syntax specific commands.

Elisabeth André is a German Computer Scientist from Saarlouis, Saarland who specializes in Intelligent User Interfaces, Virtual Agents, and Social Computing.

A software bot is a type of software agent in the service of software project management and software engineering. A software bot has an identity and potentially personified aspects in order to serve their stakeholders. Software bots often compose software services and provide an alternative user interface, which is sometimes, but not necessarily conversational.