Statistical inference is the process of using data analysis to infer properties of an underlying distribution of probability. Inferential statistical analysis infers properties of a population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is sampled from a larger population.

The likelihood function is the joint probability of observed data viewed as a function of the parameters of a statistical model.

In statistics, the likelihood-ratio test assesses the goodness of fit of two competing statistical models, specifically one found by maximization over the entire parameter space and another found after imposing some constraint, based on the ratio of their likelihoods. If the constraint is supported by the observed data, the two likelihoods should not differ by more than sampling error. Thus the likelihood-ratio test tests whether this ratio is significantly different from one, or equivalently whether its natural logarithm is significantly different from zero.

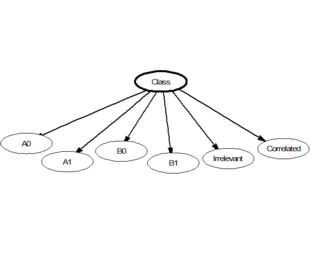

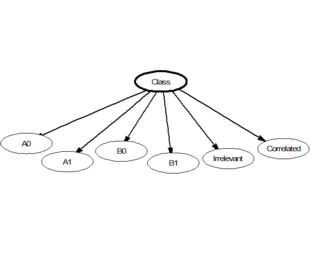

In statistics, naive Bayes classifiers are a family of linear "probabilistic classifiers" which assumes that the features are conditionally independent, given the target class. The strength (naivety) of this assumption is what gives the classifier its name. These classifiers are among the simplest Bayesian network models.

In statistics, point estimation involves the use of sample data to calculate a single value which is to serve as a "best guess" or "best estimate" of an unknown population parameter. More formally, it is the application of a point estimator to the data to obtain a point estimate.

Nonparametric statistics is a type of statistical analysis that makes minimal assumptions about the underlying distribution of the data being studied. Often these models are infinite-dimensional, rather than finite dimensional, as is parametric statistics. Nonparametric statistics can be used for descriptive statistics or statistical inference. Nonparametric tests are often used when the assumptions of parametric tests are evidently violated.

Bayesian statistics is a theory in the field of statistics based on the Bayesian interpretation of probability where probability expresses a degree of belief in an event. The degree of belief may be based on prior knowledge about the event, such as the results of previous experiments, or on personal beliefs about the event. This differs from a number of other interpretations of probability, such as the frequentist interpretation that views probability as the limit of the relative frequency of an event after many trials. More concretely, analysis in Bayesian methods codifies prior knowledge in the form of a prior distribution.

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection.

Empirical Bayes methods are procedures for statistical inference in which the prior probability distribution is estimated from the data. This approach stands in contrast to standard Bayesian methods, for which the prior distribution is fixed before any data are observed. Despite this difference in perspective, empirical Bayes may be viewed as an approximation to a fully Bayesian treatment of a hierarchical model wherein the parameters at the highest level of the hierarchy are set to their most likely values, instead of being integrated out. Empirical Bayes, also known as maximum marginal likelihood, represents a convenient approach for setting hyperparameters, but has been mostly supplanted by fully Bayesian hierarchical analyses since the 2000s with the increasing availability of well-performing computation techniques. It is still commonly used, however, for variational methods in Deep Learning, such as variational autoencoders, where latent variable spaces are high-dimensional.

Mathematical statistics is the application of probability theory, a branch of mathematics, to statistics, as opposed to techniques for collecting statistical data. Specific mathematical techniques which are used for this include mathematical analysis, linear algebra, stochastic analysis, differential equations, and measure theory.

This glossary of statistics and probability is a list of definitions of terms and concepts used in the mathematical sciences of statistics and probability, their sub-disciplines, and related fields. For additional related terms, see Glossary of mathematics and Glossary of experimental design.

In statistics, the method of moments is a method of estimation of population parameters. The same principle is used to derive higher moments like skewness and kurtosis.

In statistics, the frequency or absolute frequency of an event is the number of times the observation has occurred/recorded in an experiment or study. These frequencies are often depicted graphically or in tabular form.

Approximate Bayesian computation (ABC) constitutes a class of computational methods rooted in Bayesian statistics that can be used to estimate the posterior distributions of model parameters.

Statistics models the collection, organization, analysis, interpretation, presentation of data, and is used to solve mathematical problems. Conclusions drawn from statistical analysis typically contain uncertainties, certainties or as they represent the probability of an event occurring. Statistics is fundamental to disciplines of science that involve predicting or classifying events based on a large set of data and is an integral part of fields such as machine learning, bioinformatics, genomics, and economics.

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or proportion of findings in the data. Frequentist inference underlies frequentist statistics, in which the well-established methodologies of statistical hypothesis testing and confidence intervals are founded.

In statistics, additive smoothing, also called Laplace smoothing or Lidstone smoothing, is a technique used to smooth count data, eliminating issues caused by certain values having 0 occurrences. Given a set of observation counts from a -dimensional multinomial distribution with trials, a "smoothed" version of the counts gives the estimator:

In probability theory, an experiment or trial is any procedure that can be infinitely repeated and has a well-defined set of possible outcomes, known as the sample space. An experiment is said to be random if it has more than one possible outcome, and deterministic if it has only one. A random experiment that has exactly two possible outcomes is known as a Bernoulli trial.

In marketing, Bayesian inference allows for decision making and market research evaluation under uncertainty and with limited data.