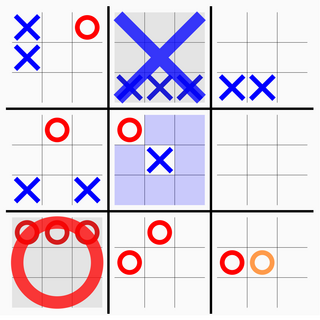

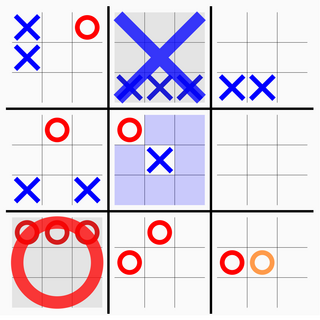

Minmax is a decision rule used in artificial intelligence, decision theory, game theory, statistics, and philosophy for minimizing the possible loss for a worst case scenario. When dealing with gains, it is referred to as "maximin" – to maximize the minimum gain. Originally formulated for several-player zero-sum game theory, covering both the cases where players take alternate moves and those where they make simultaneous moves, it has also been extended to more complex games and to general decision-making in the presence of uncertainty.

Tic-tac-toe, noughts and crosses, or Xs and Os is a paper-and-pencil game for two players who take turns marking the spaces in a three-by-three grid with X or O. The player who succeeds in placing three of their marks in a horizontal, vertical, or diagonal row is the winner. It is a solved game, with a forced draw assuming best play from both players.

A solved game is a game whose outcome can be correctly predicted from any position, assuming that both players play perfectly. This concept is usually applied to abstract strategy games, and especially to games with full information and no element of chance; solving such a game may use combinatorial game theory and/or computer assistance.

Computer chess includes both hardware and software capable of playing chess. Computer chess provides opportunities for players to practice even in the absence of human opponents, and also provides opportunities for analysis, entertainment and training. Computer chess applications that play at the level of a chess grandmaster or higher are available on hardware from supercomputers to smart phones. Standalone chess-playing machines are also available. Stockfish, Leela Chess Zero, GNU Chess, Fruit, and other free open source applications are available for various platforms.

Alpha–beta pruning is a search algorithm that seeks to decrease the number of nodes that are evaluated by the minimax algorithm in its search tree. It is an adversarial search algorithm used commonly for machine playing of two-player combinatorial games. It stops evaluating a move when at least one possibility has been found that proves the move to be worse than a previously examined move. Such moves need not be evaluated further. When applied to a standard minimax tree, it returns the same move as minimax would, but prunes away branches that cannot possibly influence the final decision.

The horizon effect, also known as the horizon problem, is a problem in artificial intelligence whereby, in many games, the number of possible states or positions is immense and computers can only feasibly search a small portion of them, typically a few plies down the game tree. Thus, for a computer searching only five plies, there is a possibility that it will make a detrimental move, but the effect is not visible because the computer does not search to the depth of the error.

Combinatorial game theory is a branch of mathematics and theoretical computer science that typically studies sequential games with perfect information. Study has been largely confined to two-player games that have a position that the players take turns changing in defined ways or moves to achieve a defined winning condition. Combinatorial game theory has not traditionally studied games of chance or those that use imperfect or incomplete information, favoring games that offer perfect information in which the state of the game and the set of available moves is always known by both players. However, as mathematical techniques advance, the types of game that can be mathematically analyzed expands, thus the boundaries of the field are ever changing. Scholars will generally define what they mean by a "game" at the beginning of a paper, and these definitions often vary as they are specific to the game being analyzed and are not meant to represent the entire scope of the field.

Combinatorial game theory measures game complexity in several ways:

- State-space complexity,

- Game tree size,

- Decision complexity,

- Game-tree complexity,

- Computational complexity.

In combinatorial game theory, the strategy-stealing argument is a general argument that shows, for many two-player games, that the second player cannot have a guaranteed winning strategy. The strategy-stealing argument applies to any symmetric game in which an extra move can never be a disadvantage. A key property of a strategy-stealing argument is that it proves that the first player can win the game without actually constructing such a strategy. So, although it might prove the existence of a winning strategy, the proof gives no information about what that strategy is.

In game theory, an extensive-form game is a specification of a game allowing for the explicit representation of a number of key aspects, like the sequencing of players' possible moves, their choices at every decision point, the information each player has about the other player's moves when they make a decision, and their payoffs for all possible game outcomes. Extensive-form games also allow for the representation of incomplete information in the form of chance events modeled as "moves by nature". Extensive-form representations differ from normal-form in that they provide a more complete description of the game in question, whereas normal-form simply boils down the game into a payoff matrix.

Negamax search is a variant form of minimax search that relies on the zero-sum property of a two-player game.

In game theory, a sequential game is a game where one player chooses their action before the others choose theirs. The other players must have information on the first player's choice so that the difference in time has no strategic effect. Sequential games are governed by the time axis and represented in the form of decision trees.

An and–or tree is a graphical representation of the reduction of problems to conjunctions and disjunctions of subproblems.

In computer science, B* is a best-first graph search algorithm that finds the least-cost path from a given initial node to any goal node. First published by Hans Berliner in 1979, it is related to the A* search algorithm.

Zillions of Games is a commercial general game playing system developed by Jeff Mallett and Mark Lefler in 1998. The game rules are specified with S-expressions, Zillions rule language. It was designed to handle mostly abstract strategy board games or puzzles. After parsing the rules of the game, the system's artificial intelligence can automatically play one or more players. It treats puzzles as solitaire games and its AI can be used to solve them.

In combinatorial game theory, a two-player deterministic perfect information turn-based game is a first-player-win if with perfect play the first player to move can always force a win. Similarly, a game is second-player-win if with perfect play the second player to move can always force a win. With perfect play, if neither side can force a win, the game is a draw.

In computer science, Monte Carlo tree search (MCTS) is a heuristic search algorithm for some kinds of decision processes, most notably those employed in software that plays board games. In that context MCTS is used to solve the game tree.

Ultimate tic-tac-toe is a board game composed of nine tic-tac-toe boards arranged in a 3 × 3 grid. Players take turns playing on the smaller tic-tac-toe boards until one of them wins on the larger board. Compared to traditional tic-tac-toe, strategy in this game is conceptually more difficult and has proven more challenging for computers.

Tic-tac-toe is an instance of an m,n,k-game, where two players alternate taking turns on an m×n board until one of them gets k in a row. Harary's generalized tic-tac-toe is an even broader generalization. The game can also be generalized as a nd game. The game can be generalised even further from the above variants by playing on an arbitrary hypergraph where rows are hyperedges and cells are vertices.