Quantum decoherence is the loss of quantum coherence, the process in which a system's behaviour changes from that which can be explained by quantum mechanics to that which can be explained by classical mechanics. Beginning out of attempts to extend the understanding of quantum mechanics, the theory has developed in several directions and experimental studies have confirmed some of the key issues. Quantum computing relies on quantum coherence and is the primary practical applications of the concept.

In theoretical physics, the term renormalization group (RG) refers to a formal apparatus that allows systematic investigation of the changes of a physical system as viewed at different scales. In particle physics, it reflects the changes in the underlying force laws as the energy scale at which physical processes occur varies, energy/momentum and resolution distance scales being effectively conjugate under the uncertainty principle.

In mathematical physics, a lattice model is a mathematical model of a physical system that is defined on a lattice, as opposed to a continuum, such as the continuum of space or spacetime. Lattice models originally occurred in the context of condensed matter physics, where the atoms of a crystal automatically form a lattice. Currently, lattice models are quite popular in theoretical physics, for many reasons. Some models are exactly solvable, and thus offer insight into physics beyond what can be learned from perturbation theory. Lattice models are also ideal for study by the methods of computational physics, as the discretization of any continuum model automatically turns it into a lattice model. The exact solution to many of these models includes the presence of solitons. Techniques for solving these include the inverse scattering transform and the method of Lax pairs, the Yang–Baxter equation and quantum groups. The solution of these models has given insights into the nature of phase transitions, magnetization and scaling behaviour, as well as insights into the nature of quantum field theory. Physical lattice models frequently occur as an approximation to a continuum theory, either to give an ultraviolet cutoff to the theory to prevent divergences or to perform numerical computations. An example of a continuum theory that is widely studied by lattice models is the QCD lattice model, a discretization of quantum chromodynamics. However, digital physics considers nature fundamentally discrete at the Planck scale, which imposes upper limit to the density of information, aka Holographic principle. More generally, lattice gauge theory and lattice field theory are areas of study. Lattice models are also used to simulate the structure and dynamics of polymers.

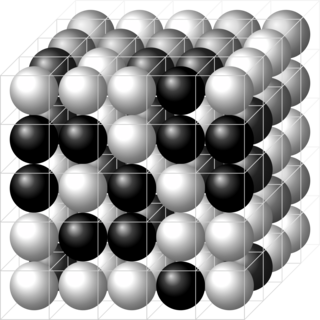

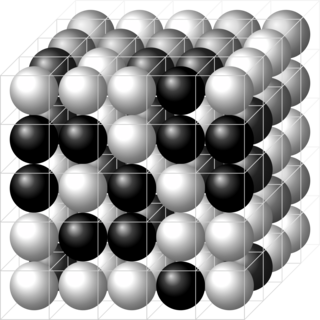

The Ising model, named after the physicists Ernst Ising and Wilhelm Lenz, is a mathematical model of ferromagnetism in statistical mechanics. The model consists of discrete variables that represent magnetic dipole moments of atomic "spins" that can be in one of two states. The spins are arranged in a graph, usually a lattice, allowing each spin to interact with its neighbors. Neighboring spins that agree have a lower energy than those that disagree; the system tends to the lowest energy but heat disturbs this tendency, thus creating the possibility of different structural phases. The model allows the identification of phase transitions as a simplified model of reality. The two-dimensional square-lattice Ising model is one of the simplest statistical models to show a phase transition.

A conformal field theory (CFT) is a quantum field theory that is invariant under conformal transformations. In two dimensions, there is an infinite-dimensional algebra of local conformal transformations, and conformal field theories can sometimes be exactly solved or classified.

In physics and probability theory, Mean-field theory (MFT) or Self-consistent field theory studies the behavior of high-dimensional random (stochastic) models by studying a simpler model that approximates the original by averaging over degrees of freedom. Such models consider many individual components that interact with each other.

In particle and condensed matter physics, Goldstone bosons or Nambu–Goldstone bosons (NGBs) are bosons that appear necessarily in models exhibiting spontaneous breakdown of continuous symmetries. They were discovered by Yoichiro Nambu in particle physics within the context of the BCS superconductivity mechanism, and subsequently elucidated by Jeffrey Goldstone, and systematically generalized in the context of quantum field theory. In condensed matter physics such bosons are quasiparticles and are known as Anderson–Bogoliubov modes.

In physics, mathematics and statistics, scale invariance is a feature of objects or laws that do not change if scales of length, energy, or other variables, are multiplied by a common factor, and thus represent a universality.

In quantum mechanics, superselection extends the concept of selection rules.

The classical XY model is a lattice model of statistical mechanics. In general, the XY model can be seen as a specialization of Stanley's n-vector model for n = 2.

Landau theory in physics is a theory that Lev Landau introduced in an attempt to formulate a general theory of continuous phase transitions. It can also be adapted to systems under externally-applied fields, and used as a quantitative model for discontinuous transitions. Although the theory has now been superseded by the renormalization group and scaling theory formulations, it remains an exceptionally broad and powerful framework for phase transitions, and the associated concept of the order parameter as a descriptor of the essential character of the transition has proven transformative.

In statistical mechanics, the correlation function is a measure of the order in a system, as characterized by a mathematical correlation function. Correlation functions describe how microscopic variables, such as spin and density, at different positions are related. More specifically, correlation functions measure quantitatively the extent to which microscopic variables fluctuate together, on average, across space and/or time. Keep in mind that correlation doesn’t automatically equate to causation. So, even if there’s a non-zero correlation between two points in space or time, it doesn’t mean there is a direct causal link between them. Sometimes, a correlation can exist without any causal relationship. This could be purely coincidental or due to other underlying factors, known as confounding variables, which cause both points to covary (statistically).

In the renormalization group analysis of phase transitions in physics, a critical dimension is the dimensionality of space at which the character of the phase transition changes. Below the lower critical dimension there is no phase transition. Above the upper critical dimension the critical exponents of the theory become the same as that in mean field theory. An elegant criterion to obtain the critical dimension within mean field theory is due to V. Ginzburg.

Critical exponents describe the behavior of physical quantities near continuous phase transitions. It is believed, though not proven, that they are universal, i.e. they do not depend on the details of the physical system, but only on some of its general features. For instance, for ferromagnetic systems, the critical exponents depend only on:

In quantum field theory and statistical mechanics, the Hohenberg–Mermin–Wagner theorem or Mermin–Wagner theorem states that continuous symmetries cannot be spontaneously broken at finite temperature in systems with sufficiently short-range interactions in dimensions d ≤ 2. Intuitively, this means that long-range fluctuations can be created with little energy cost, and since they increase the entropy, they are favored.

The Coleman–Weinberg model represents quantum electrodynamics of a scalar field in four-dimensions. The Lagrangian for the model is

In statistical mechanics and quantum field theory, a dangerously irrelevant operator is an operator which is irrelevant at a renormalization group fixed point, yet affects the infrared (IR) physics significantly.

In fluid dynamics, eddy diffusion, eddy dispersion, or turbulent diffusion is a process by which fluid substances mix together due to eddy motion. These eddies can vary widely in size, from subtropical ocean gyres down to the small Kolmogorov microscales, and occur as a result of turbulence. The theory of eddy diffusion was first developed by Sir Geoffrey Ingram Taylor.

In statistical mechanics, Lee–Yang theory, sometimes also known as Yang–Lee theory, is a scientific theory which seeks to describe phase transitions in large physical systems in the thermodynamic limit based on the properties of small, finite-size systems. The theory revolves around the complex zeros of partition functions of finite-size systems and how these may reveal the existence of phase transitions in the thermodynamic limit.

In quantum field theory, the Polyakov loop is the thermal analogue of the Wilson loop, acting as an order parameter for confinement in pure gauge theories at nonzero temperatures. In particular, it is a Wilson loop that winds around the compactified Euclidean temporal direction of a thermal quantum field theory. It indicates confinement because its vacuum expectation value must vanish in the confined phase due to its non-invariance under center gauge transformations. This also follows from the fact that the expectation value is related to the free energy of individual quarks, which diverges in this phase. Introduced by Alexander M. Polyakov in 1975, they can also be used to study the potential between pairs of quarks at nonzero temperatures.