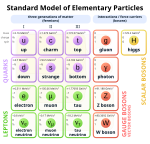

In theoretical physics, quantum field theory (QFT) is a theoretical framework that combines classical field theory, special relativity, and quantum mechanics. QFT is used in particle physics to construct physical models of subatomic particles and in condensed matter physics to construct models of quasiparticles. The current standard model of particle physics is based on quantum field theory.

Supersymmetry is a theoretical framework in physics that suggests the existence of a symmetry between particles with integer spin (bosons) and particles with half-integer spin (fermions). It proposes that for every known particle, there exists a partner particle with different spin properties. There have been multiple experiments on supersymmetry that have failed to provide evidence that it exists in nature. If evidence is found, supersymmetry could help explain certain phenomena, such as the nature of dark matter and the hierarchy problem in particle physics.

Technicolor theories are models of physics beyond the Standard Model that address electroweak gauge symmetry breaking, the mechanism through which W and Z bosons acquire masses. Early technicolor theories were modelled on quantum chromodynamics (QCD), the "color" theory of the strong nuclear force, which inspired their name.

The Friedmann–Lemaître–Robertson–Walker metric is a metric based on an exact solution of the Einstein field equations of general relativity. The metric describes a homogeneous, isotropic, expanding universe that is path-connected, but not necessarily simply connected. The general form of the metric follows from the geometric properties of homogeneity and isotropy; Einstein's field equations are only needed to derive the scale factor of the universe as a function of time. Depending on geographical or historical preferences, the set of the four scientists – Alexander Friedmann, Georges Lemaître, Howard P. Robertson and Arthur Geoffrey Walker – are variously grouped as Friedmann, Friedmann–Robertson–Walker (FRW), Robertson–Walker (RW), or Friedmann–Lemaître (FL). This model is sometimes called the Standard Model of modern cosmology, although such a description is also associated with the further developed Lambda-CDM model. The FLRW model was developed independently by the named authors in the 1920s and 1930s.

In theoretical physics, supergravity is a modern field theory that combines the principles of supersymmetry and general relativity; this is in contrast to non-gravitational supersymmetric theories such as the Minimal Supersymmetric Standard Model. Supergravity is the gauge theory of local supersymmetry. Since the supersymmetry (SUSY) generators form together with the Poincaré algebra a superalgebra, called the super-Poincaré algebra, supersymmetry as a gauge theory makes gravity arise in a natural way.

Brane cosmology refers to several theories in particle physics and cosmology related to string theory, superstring theory and M-theory.

In particle physics, the hypothetical dilaton particle is a particle of a scalar field that appears in theories with extra dimensions when the volume of the compactified dimensions varies. It appears as a radion in Kaluza–Klein theory's compactifications of extra dimensions. In Brans–Dicke theory of gravity, Newton's constant is not presumed to be constant but instead 1/G is replaced by a scalar field and the associated particle is the dilaton.

In theoretical physics, the Einstein–Cartan theory, also known as the Einstein–Cartan–Sciama–Kibble theory, is a classical theory of gravitation, one of several alternatives to general relativity. The theory was first proposed by Élie Cartan in 1922.

In theoretical physics, Euclidean quantum gravity is a version of quantum gravity. It seeks to use the Wick rotation to describe the force of gravity according to the principles of quantum mechanics.

In string theory, the string theory landscape is the collection of possible false vacua, together comprising a collective "landscape" of choices of parameters governing compactifications.

In theoretical physics, massive gravity is a theory of gravity that modifies general relativity by endowing the graviton with a nonzero mass. In the classical theory, this means that gravitational waves obey a massive wave equation and hence travel at speeds below the speed of light.

In theoretical physics, a scalar–tensor theory is a field theory that includes both a scalar field and a tensor field to represent a certain interaction. For example, the Brans–Dicke theory of gravitation uses both a scalar field and a tensor field to mediate the gravitational interaction.

Physics beyond the Standard Model (BSM) refers to the theoretical developments needed to explain the deficiencies of the Standard Model, such as the inability to explain the fundamental parameters of the standard model, the strong CP problem, neutrino oscillations, matter–antimatter asymmetry, and the nature of dark matter and dark energy. Another problem lies within the mathematical framework of the Standard Model itself: the Standard Model is inconsistent with that of general relativity, and one or both theories break down under certain conditions, such as spacetime singularities like the Big Bang and black hole event horizons.

In physics, naturalness is the aesthetic property that the dimensionless ratios between free parameters or physical constants appearing in a physical theory should take values "of order 1" and that free parameters are not fine-tuned. That is, a natural theory would have parameter ratios with values like 2.34 rather than 234000 or 0.000234.

In physics, a quantum vortex represents a quantized flux circulation of some physical quantity. In most cases, quantum vortices are a type of topological defect exhibited in superfluids and superconductors. The existence of quantum vortices was first predicted by Lars Onsager in 1949 in connection with superfluid helium. Onsager reasoned that quantisation of vorticity is a direct consequence of the existence of a superfluid order parameter as a spatially continuous wavefunction. Onsager also pointed out that quantum vortices describe the circulation of superfluid and conjectured that their excitations are responsible for superfluid phase transitions. These ideas of Onsager were further developed by Richard Feynman in 1955 and in 1957 were applied to describe the magnetic phase diagram of type-II superconductors by Alexei Alexeyevich Abrikosov. In 1935 Fritz London published a very closely related work on magnetic flux quantization in superconductors. London's fluxoid can also be viewed as a quantum vortex.

In particle physics and string theory (M-theory), the ADD model, also known as the model with large extra dimensions (LED), is a model framework that attempts to solve the hierarchy problem. The model tries to explain this problem by postulating that our universe, with its four dimensions, exists on a membrane in a higher dimensional space. It is then suggested that the other forces of nature operate within this membrane and its four dimensions, while the hypothetical gravity-bearing particle, the graviton, can propagate across the extra dimensions. This would explain why gravity is very weak compared to the other fundamental forces. The size of the dimensions in ADD is around the order of the TeV scale, which results in it being experimentally probeable by current colliders, unlike many exotic extra dimensional hypotheses that have the relevant size around the Planck scale.

f(R) is a type of modified gravity theory which generalizes Einstein's general relativity. f(R) gravity is actually a family of theories, each one defined by a different function, f, of the Ricci scalar, R. The simplest case is just the function being equal to the scalar; this is general relativity. As a consequence of introducing an arbitrary function, there may be freedom to explain the accelerated expansion and structure formation of the Universe without adding unknown forms of dark energy or dark matter. Some functional forms may be inspired by corrections arising from a quantum theory of gravity. f(R) gravity was first proposed in 1970 by Hans Adolph Buchdahl. It has become an active field of research following work by Starobinsky on cosmic inflation. A wide range of phenomena can be produced from this theory by adopting different functions; however, many functional forms can now be ruled out on observational grounds, or because of pathological theoretical problems.

In general relativity, the Hamilton–Jacobi–Einstein equation (HJEE) or Einstein–Hamilton–Jacobi equation (EHJE) is an equation in the Hamiltonian formulation of geometrodynamics in superspace, cast in the "geometrodynamics era" around the 1960s, by Asher Peres in 1962 and others. It is an attempt to reformulate general relativity in such a way that it resembles quantum theory within a semiclassical approximation, much like the correspondence between quantum mechanics and classical mechanics.

The asymptotic safety approach to quantum gravity provides a nonperturbative notion of renormalization in order to find a consistent and predictive quantum field theory of the gravitational interaction and spacetime geometry. It is based upon a nontrivial fixed point of the corresponding renormalization group (RG) flow such that the running coupling constants approach this fixed point in the ultraviolet (UV) limit. This suffices to avoid divergences in physical observables. Moreover, it has predictive power: Generically an arbitrary starting configuration of coupling constants given at some RG scale does not run into the fixed point for increasing scale, but a subset of configurations might have the desired UV properties. For this reason it is possible that — assuming a particular set of couplings has been measured in an experiment — the requirement of asymptotic safety fixes all remaining couplings in such a way that the UV fixed point is approached.