In physics, quintessence is a hypothetical form of dark energy, more precisely a scalar field, postulated as an explanation of the observation of an accelerating rate of expansion of the universe. The first example of this scenario was proposed by Ratra and Peebles (1988) and Wetterich (1988). The concept was expanded to more general types of time-varying dark energy, and the term "quintessence" was first introduced in a 1998 paper by Robert R. Caldwell, Rahul Dave and Paul Steinhardt. It has been proposed by some physicists to be a fifth fundamental force. Quintessence differs from the cosmological constant explanation of dark energy in that it is dynamic; that is, it changes over time, unlike the cosmological constant which, by definition, does not change. Quintessence can be either attractive or repulsive depending on the ratio of its kinetic and potential energy. Those working with this postulate believe that quintessence became repulsive about ten billion years ago, about 3.5 billion years after the Big Bang.

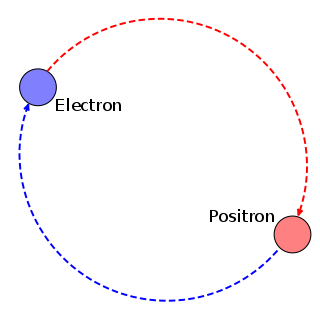

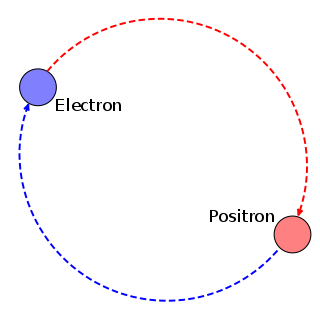

Positronium (Ps) is a system consisting of an electron and its anti-particle, a positron, bound together into an exotic atom, specifically an onium. Unlike hydrogen, the system has no protons. The system is unstable: the two particles annihilate each other to predominantly produce two or three gamma-rays, depending on the relative spin states. The energy levels of the two particles are similar to that of the hydrogen atom. However, because of the reduced mass, the frequencies of the spectral lines are less than half of those for the corresponding hydrogen lines.

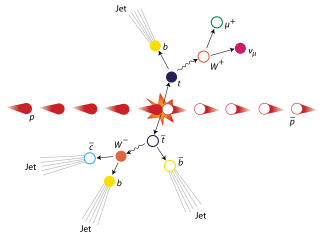

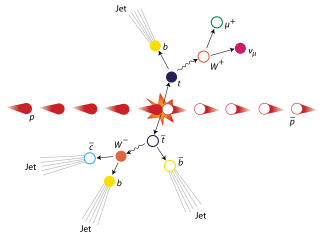

The top quark, sometimes also referred to as the truth quark, is the most massive of all observed elementary particles. It derives its mass from its coupling to the Higgs Boson. This coupling is very close to unity; in the Standard Model of particle physics, it is the largest (strongest) coupling at the scale of the weak interactions and above. The top quark was discovered in 1995 by the CDF and DØ experiments at Fermilab.

Technicolor theories are models of physics beyond the Standard Model that address electroweak gauge symmetry breaking, the mechanism through which W and Z bosons acquire masses. Early technicolor theories were modelled on quantum chromodynamics (QCD), the "color" theory of the strong nuclear force, which inspired their name.

In physics, mirror matter, also called shadow matter or Alice matter, is a hypothetical counterpart to ordinary matter.

In particle physics, the hypothetical dilaton particle is a particle of a scalar field that appears in theories with extra dimensions when the volume of the compactified dimensions varies. It appears as a radion in Kaluza–Klein theory's compactifications of extra dimensions. In Brans–Dicke theory of gravity, Newton's constant is not presumed to be constant but instead 1/G is replaced by a scalar field and the associated particle is the dilaton.

In theoretical physics, the hierarchy problem is the problem concerning the large discrepancy between aspects of the weak force and gravity. There is no scientific consensus on why, for example, the weak force is 1024 times stronger than gravity.

In quantum electrodynamics, the anomalous magnetic moment of a particle is a contribution of effects of quantum mechanics, expressed by Feynman diagrams with loops, to the magnetic moment of that particle. The magnetic moment, also called magnetic dipole moment, is a measure of the strength of a magnetic source.

In mathematical physics, a caloron is the finite temperature generalization of an instanton.

Objective-collapse theories, also known as models of spontaneous wave function collapse or dynamical reduction models, are proposed solutions to the measurement problem in quantum mechanics. As with other theories called interpretations of quantum mechanics, they are possible explanations of why and how quantum measurements always give definite outcomes, not a superposition of them as predicted by the Schrödinger equation, and more generally how the classical world emerges from quantum theory. The fundamental idea is that the unitary evolution of the wave function describing the state of a quantum system is approximate. It works well for microscopic systems, but progressively loses its validity when the mass / complexity of the system increases.

Christopher T. Hill is an American theoretical physicist at the Fermi National Accelerator Laboratory who did undergraduate work in physics at M.I.T., and graduate work at Caltech. Hill's Ph.D. thesis, "Higgs Scalars and the Nonleptonic Weak Interactions" (1977) contains one of the first detailed discussions of the two-Higgs-doublet model and its impact upon weak interactions.

In particle physics and string theory (M-theory), the ADD model, also known as the model with large extra dimensions (LED), is a model framework that attempts to solve the hierarchy problem. The model tries to explain this problem by postulating that our universe, with its four dimensions, exists on a membrane in a higher dimensional space. It is then suggested that the other forces of nature operate within this membrane and its four dimensions, while the hypothetical gravity-bearing particle graviton can propagate across the extra dimensions. This would explain why gravity is very weak compared to the other fundamental forces. The size of the dimensions in ADD is around the order of the TeV scale, which results in it being experimentally probeable by current colliders, unlike many exotic extra dimensional hypotheses that have the relevant size around the Planck scale.

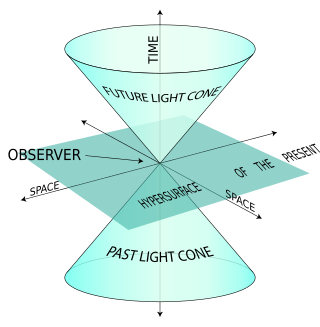

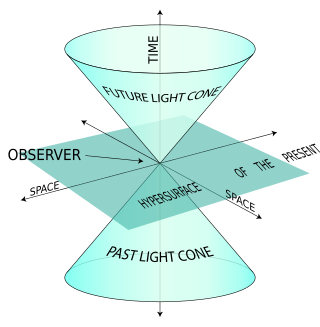

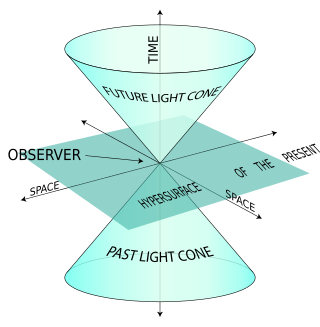

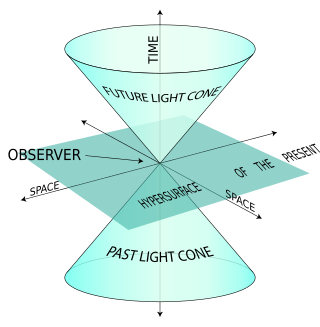

The light-front quantization of quantum field theories provides a useful alternative to ordinary equal-time quantization. In particular, it can lead to a relativistic description of bound systems in terms of quantum-mechanical wave functions. The quantization is based on the choice of light-front coordinates, where plays the role of time and the corresponding spatial coordinate is . Here, is the ordinary time, is one Cartesian coordinate, and is the speed of light. The other two Cartesian coordinates, and , are untouched and often called transverse or perpendicular, denoted by symbols of the type . The choice of the frame of reference where the time and -axis are defined can be left unspecified in an exactly soluble relativistic theory, but in practical calculations some choices may be more suitable than others.

Thomas Carlos Mehen is an American physicist. His research has consisted of primarily Quantum chromodynamics (QCD) and the application of effective field theory to problems in hadronic physics. He has also worked on effective field theory for non-relativistic particles whose short range interactions are characterized by a large scattering length, as well as novel field theories which arise from unusual limits of string theory.

In particle physics, W′ and Z′ bosons refer to hypothetical gauge bosons that arise from extensions of the electroweak symmetry of the Standard Model. They are named in analogy with the Standard Model W and Z bosons.

In strong interaction physics, light front holography or light front holographic QCD is an approximate version of the theory of quantum chromodynamics (QCD) which results from mapping the gauge theory of QCD to a higher-dimensional anti-de Sitter space (AdS) inspired by the AdS/CFT correspondence proposed for string theory. This procedure makes it possible to find analytic solutions in situations where strong coupling occurs, improving predictions of the masses of hadrons and their internal structure revealed by high-energy accelerator experiments. The most widely used approach to finding approximate solutions to the QCD equations, lattice QCD, has had many successful applications; however, it is a numerical approach formulated in Euclidean space rather than physical Minkowski space-time.

The light-front quantization of quantum field theories provides a useful alternative to ordinary equal-time quantization. In particular, it can lead to a relativistic description of bound systems in terms of quantum-mechanical wave functions. The quantization is based on the choice of light-front coordinates, where plays the role of time and the corresponding spatial coordinate is . Here, is the ordinary time, is a Cartesian coordinate, and is the speed of light. The other two Cartesian coordinates, and , are untouched and often called transverse or perpendicular, denoted by symbols of the type . The choice of the frame of reference where the time and -axis are defined can be left unspecified in an exactly soluble relativistic theory, but in practical calculations some choices may be more suitable than others. The basic formalism is discussed elsewhere.

The light-front quantization of quantum field theories provides a useful alternative to ordinary equal-time quantization. In particular, it can lead to a relativistic description of bound systems in terms of quantum-mechanical wave functions. The quantization is based on the choice of light-front coordinates, where plays the role of time and the corresponding spatial coordinate is . Here, is the ordinary time, is one Cartesian coordinate, and is the speed of light. The other two Cartesian coordinates, and , are untouched and often called transverse or perpendicular, denoted by symbols of the type . The choice of the frame of reference where the time and -axis are defined can be left unspecified in an exactly soluble relativistic theory, but in practical calculations some choices may be more suitable than others.

The Kundu equation is a general form of integrable system that is gauge-equivalent to the mixed nonlinear Schrödinger equation. It was proposed by Anjan Kundu as

Starobinsky inflation is a modification of general relativity used to explain cosmological inflation.