Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, textures, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

In mechanics and geometry, the 3D rotation group, often denoted SO(3), is the group of all rotations about the origin of three-dimensional Euclidean space under the operation of composition.

A Bézier triangle is a special type of Bézier surface that is created by interpolation of control points.

A directional derivative is a concept in multivariable calculus that measures the rate at which a function changes in a particular direction at a given point.

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

In nuclear physics, the chiral model, introduced by Feza Gürsey in 1960, is a phenomenological model describing effective interactions of mesons in the chiral limit (where the masses of the quarks go to zero), but without necessarily mentioning quarks at all. It is a nonlinear sigma model with the principal homogeneous space of a Lie group as its target manifold. When the model was originally introduced, this Lie group was the SU(N), where N is the number of quark flavors. The Riemannian metric of the target manifold is given by a positive constant multiplied by the Killing form acting upon the Maurer–Cartan form of SU(N).

The computer graphics pipeline, also known as the rendering pipeline or graphics pipeline, is a framework within computer graphics that outlines the necessary procedures for transforming a three-dimensional (3D) scene into a two-dimensional (2D) representation on a screen. Once a 3D model is generated, the graphics pipeline converts the model into a visually perceivable format on the computer display. Due to the dependence on specific software, hardware configurations, and desired display attributes, a universally applicable graphics pipeline does not exist. Nevertheless, graphics application programming interfaces (APIs), such as Direct3D, OpenGL and Vulkan were developed to standardize common procedures and oversee the graphics pipeline of a given hardware accelerator. These APIs provide an abstraction layer over the underlying hardware, relieving programmers from the need to write code explicitly targeting various graphics hardware accelerators like AMD, Intel, Nvidia, and others.

In differential geometry, the four-gradient is the four-vector analogue of the gradient from vector calculus.

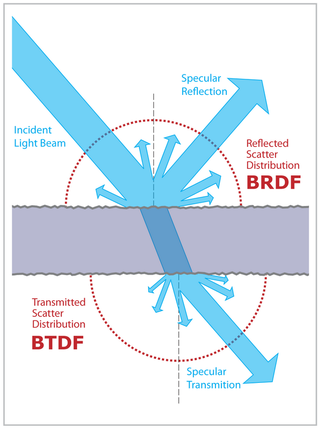

A specular highlight is the bright spot of light that appears on shiny objects when illuminated. Specular highlights are important in 3D computer graphics, as they provide a strong visual cue for the shape of an object and its location with respect to light sources in the scene.

Geometry processing is an area of research that uses concepts from applied mathematics, computer science and engineering to design efficient algorithms for the acquisition, reconstruction, analysis, manipulation, simulation and transmission of complex 3D models. As the name implies, many of the concepts, data structures, and algorithms are directly analogous to signal processing and image processing. For example, where image smoothing might convolve an intensity signal with a blur kernel formed using the Laplace operator, geometric smoothing might be achieved by convolving a surface geometry with a blur kernel formed using the Laplace-Beltrami operator.

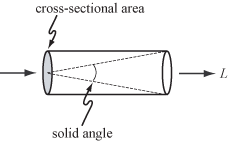

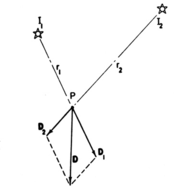

A light field camera, also known as a plenoptic camera, is a camera that captures information about the light field emanating from a scene; that is, the intensity of light in a scene, and also the precise direction that the light rays are traveling in space. This contrasts with conventional cameras, which record only light intensity at various wavelengths.

Autostereoscopy is any method of displaying stereoscopic images without the use of special headgear, glasses, something that affects vision, or anything for eyes on the part of the viewer. Because headgear is not required, it is also called "glasses-free 3D" or "glassesless 3D". There are two broad approaches currently used to accommodate motion parallax and wider viewing angles: eye-tracking, and multiple views so that the display does not need to sense where the viewer's eyes are located. Examples of autostereoscopic displays technology include lenticular lens, parallax barrier, and may include Integral imaging, but notably do not include volumetric display or holographic displays.

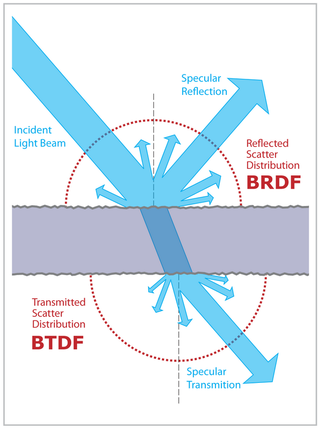

The definition of the BSDF is not well standardized. The term was probably introduced in 1980 by Bartell, Dereniak, and Wolfe. Most often it is used to name the general mathematical function which describes the way in which the light is scattered by a surface. However, in practice, this phenomenon is usually split into the reflected and transmitted components, which are then treated separately as BRDF and BTDF.

Marc Levoy is a computer graphics researcher and Professor Emeritus of Computer Science and Electrical Engineering at Stanford University, a vice president and Fellow at Adobe Inc., and a Distinguished Engineer at Google. He is noted for pioneering work in volume rendering, light fields, and computational photography.

Camera resectioning is the process of estimating the parameters of a pinhole camera model approximating the camera that produced a given photograph or video; it determines which incoming light ray is associated with each pixel on the resulting image. Basically, the process determines the pose of the pinhole camera.

Photon polarization is the quantum mechanical description of the classical polarized sinusoidal plane electromagnetic wave. An individual photon can be described as having right or left circular polarization, or a superposition of the two. Equivalently, a photon can be described as having horizontal or vertical linear polarization, or a superposition of the two.

In computer vision and computer graphics, 3D reconstruction is the process of capturing the shape and appearance of real objects. This process can be accomplished either by active or passive methods. If the model is allowed to change its shape in time, this is referred to as non-rigid or spatio-temporal reconstruction.

The derivatives of scalars, vectors, and second-order tensors with respect to second-order tensors are of considerable use in continuum mechanics. These derivatives are used in the theories of nonlinear elasticity and plasticity, particularly in the design of algorithms for numerical simulations.

In continuum mechanics, a compatible deformation tensor field in a body is that unique tensor field that is obtained when the body is subjected to a continuous, single-valued, displacement field. Compatibility is the study of the conditions under which such a displacement field can be guaranteed. Compatibility conditions are particular cases of integrability conditions and were first derived for linear elasticity by Barré de Saint-Venant in 1864 and proved rigorously by Beltrami in 1886.

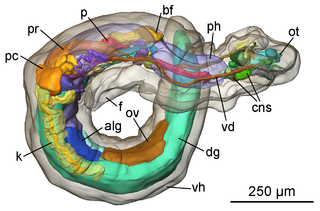

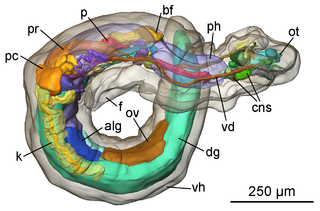

Lightfieldmicroscopy (LFM) is a scanning-free 3-dimensional (3D) microscopic imaging method based on the theory of light field. This technique allows sub-second (~10 Hz) large volumetric imaging with ~1 μm spatial resolution in the condition of weak scattering and semi-transparence, which has never been achieved by other methods. Just as in traditional light field rendering, there are two steps for LFM imaging: light field capture and processing. In most setups, a microlens array is used to capture the light field. As for processing, it can be based on two kinds of representations of light propagation: the ray optics picture and the wave optics picture. The Stanford University Computer Graphics Laboratory published their first prototype LFM in 2006 and has been working on the cutting edge since then.