Complexity characterises the behaviour of a system or model whose components interact in multiple ways and follow local rules, leading to non-linearity, randomness, collective dynamics, hierarchy, and emergence.

The carrying capacity of an environment is the maximum population size of a biological species that can be sustained by that specific environment, given the food, habitat, water, and other resources available. The carrying capacity is defined as the environment's maximal load, which in population ecology corresponds to the population equilibrium, when the number of deaths in a population equals the number of births. The effect of carrying capacity on population dynamics is modelled with a logistic function. Carrying capacity is applied to the maximum population an environment can support in ecology, agriculture and fisheries. The term carrying capacity has been applied to a few different processes in the past before finally being applied to population limits in the 1950s. The notion of carrying capacity for humans is covered by the notion of sustainable population.

The Limits to Growth (LTG) is a 1972 report that discussed the possibility of exponential economic and population growth with finite supply of resources, studied by computer simulation. The study used the World3 computer model to simulate the consequence of interactions between the Earth and human systems. The model was based on the work of Jay Forrester of MIT, as described in his book World Dynamics.

Economic growth can be defined as the increase or improvement in the inflation-adjusted market value of the goods and services produced by an economy in a financial year. Statisticians conventionally measure such growth as the percent rate of increase in the real and nominal gross domestic product (GDP).

A logistic function or logistic curve is a common S-shaped curve with the equation

Exponential growth is a process that increases quantity over time at an ever-increasing rate. It occurs when the instantaneous rate of change of a quantity with respect to time is proportional to the quantity itself. Described as a function, a quantity undergoing exponential growth is an exponential function of time, that is, the variable representing time is the exponent. Exponential growth is the inverse of logarithmic growth.

Productivity is the efficiency of production of goods or services expressed by some measure. Measurements of productivity are often expressed as a ratio of an aggregate output to a single input or an aggregate input used in a production process, i.e. output per unit of input, typically over a specific period of time. The most common example is the (aggregate) labour productivity measure, one example of which is GDP per worker. There are many different definitions of productivity and the choice among them depends on the purpose of the productivity measurement and data availability. The key source of difference between various productivity measures is also usually related to how the outputs and the inputs are aggregated to obtain such a ratio-type measure of productivity.

The Hubbert peak theory says that for any given geographical area, from an individual oil-producing region to the planet as a whole, the rate of petroleum production tends to follow a bell-shaped curve. It is one of the primary theories on peak oil.

Population ecology is a sub-field of ecology that deals with the dynamics of species populations and how these populations interact with the environment, such as birth and death rates, and by immigration and emigration.

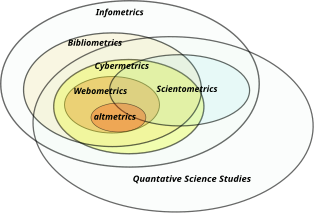

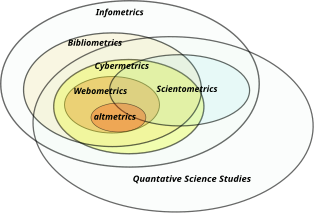

Bibliometrics is the use of statistical methods to analyse books, articles and other publications, especially in scientific contents. Bibliometric methods are frequently used in the field of library and information science. Bibliometrics is closely associated with scientometrics, the analysis of scientific metrics and indicators, to the point that both fields largely overlap.

Derek John de Solla Price was a British physicist, historian of science, and information scientist. He was known for his investigation of the Antikythera mechanism, an ancient Greek planetary computer, and for quantitative studies on scientific publications, which led to his being described as the "Herald of scientometrics".

Scientometrics is the field of study which concerns itself with measuring and analysing scholarly literature. Scientometrics is a sub-field of informetrics. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts. In practice there is a significant overlap between scientometrics and other scientific fields such as information systems, information science, science of science policy, sociology of science, and metascience. Critics have argued that over-reliance on scientometrics has created a system of perverse incentives, producing a publish or perish environment that leads to low-quality research.

Citation analysis is the examination of the frequency, patterns, and graphs of citations in documents. It uses the directed graph of citations — links from one document to another document — to reveal properties of the documents. A typical aim would be to identify the most important documents in a collection. A classic example is that of the citations between academic articles and books. For another example, judges of law support their judgements by referring back to judgements made in earlier cases. An additional example is provided by patents which contain prior art, citation of earlier patents relevant to the current claim. The digitization of patent data and increasing computing power have led to a community of practice that uses these citation data to measure innovation attributes, trace knowledge flows, and map innovation networks.

Informetrics is the study of quantitative aspects of information, it is an extension and evolution of traditional bibliometrics and scientometrics. Informetrics uses bibliometrics and scientometrics methods to study mainly the problems of literature information management and evaluation of science and technology. Informetrics is an independent discipline that uses quantitative methods from mathematics and statistics to study the process, phenomena, and law of informetrics. Informetrics has gained more attention as it is a common scientific method for academic evaluation, research hotspots in discipline, and trend analysis.

Intraspecific competition is an interaction in population ecology, whereby members of the same species compete for limited resources. This leads to a reduction in fitness for both individuals, but the more fit individual survives and is able to reproduce. By contrast, interspecific competition occurs when members of different species compete for a shared resource. Members of the same species have rather similar requirements for resources, whereas different species have a smaller contested resource overlap, resulting in intraspecific competition generally being a stronger force than interspecific competition.

Citation impact or citation rate is a measure of how many times an academic journal article or book or author is cited by other articles, books or authors. Citation counts are interpreted as measures of the impact or influence of academic work and have given rise to the field of bibliometrics or scientometrics, specializing in the study of patterns of academic impact through citation analysis. The importance of journals can be measured by the average citation rate, the ratio of number of citations to number articles published within a given time period and in a given index, such as the journal impact factor or the citescore. It is used by academic institutions in decisions about academic tenure, promotion and hiring, and hence also used by authors in deciding which journal to publish in. Citation-like measures are also used in other fields that do ranking, such as Google's PageRank algorithm, software metrics, college and university rankings, and business performance indicators.

The h-index is an author-level metric that measures both the productivity and citation impact of the publications, initially used for an individual scientist or scholar. The h-index correlates with success indicators such as winning the Nobel Prize, being accepted for research fellowships and holding positions at top universities. The index is based on the set of the scientist's most cited papers and the number of citations that they have received in other publications. The index has more recently been applied to the productivity and impact of a scholarly journal as well as a group of scientists, such as a department or university or country. The index was suggested in 2005 by Jorge E. Hirsch, a physicist at UC San Diego, as a tool for determining theoretical physicists' relative quality and is sometimes called the Hirsch index or Hirsch number.

A fishery is an area with an associated fish or aquatic population which is harvested for its commercial or recreational value. Fisheries can be wild or farmed. Population dynamics describes the ways in which a given population grows and shrinks over time, as controlled by birth, death, and migration. It is the basis for understanding changing fishery patterns and issues such as habitat destruction, predation and optimal harvesting rates. The population dynamics of fisheries is used by fisheries scientists to determine sustainable yields.

The Eigenfactor score, developed by Jevin West and Carl Bergstrom at the University of Washington, is a rating of the total importance of a scientific journal. Journals are rated according to the number of incoming citations, with citations from highly ranked journals weighted to make a larger contribution to the eigenfactor than those from poorly ranked journals. As a measure of importance, the Eigenfactor score scales with the total impact of a journal. All else equal, journals generating higher impact to the field have larger Eigenfactor scores. Citation metrics like eigenfactor or PageRank-based scores reduce the effect of self-referential groups.

Author-level metrics are citation metrics that measure the bibliometric impact of individual authors, researchers, academics, and scholars. Many metrics have been developed that take into account varying numbers of factors.