In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm.

In computational complexity theory, the class NC is the set of decision problems decidable in polylogarithmic time on a parallel computer with a polynomial number of processors. In other words, a problem with input size n is in NC if there exist constants c and k such that it can be solved in time O(logc n) using O(nk) parallel processors. Stephen Cook coined the name "Nick's class" after Nick Pippenger, who had done extensive research on circuits with polylogarithmic depth and polynomial size.

In computational complexity theory, PSPACE is the set of all decision problems that can be solved by a Turing machine using a polynomial amount of space.

In computational complexity theory, a decision problem is P-complete if it is in P and every problem in P can be reduced to it by an appropriate reduction.

In theoretical computer science, a probabilistic Turing machine is a non-deterministic Turing machine that chooses between the available transitions at each point according to some probability distribution. As a consequence, a probabilistic Turing machine can—unlike a deterministic Turing Machine—have stochastic results; that is, on a given input and instruction state machine, it may have different run times, or it may not halt at all; furthermore, it may accept an input in one execution and reject the same input in another execution.

In computational complexity theory, a complexity class is a set of computational problems of related resource-based complexity. The two most commonly analyzed resources are time and memory.

In computational complexity theory, a probabilistically checkable proof (PCP) is a type of proof that can be checked by a randomized algorithm using a bounded amount of randomness and reading a bounded number of bits of the proof. The algorithm is then required to accept correct proofs and reject incorrect proofs with very high probability. A standard proof, as used in the verifier-based definition of the complexity class NP, also satisfies these requirements, since the checking procedure deterministically reads the whole proof, always accepts correct proofs and rejects incorrect proofs. However, what makes them interesting is the existence of probabilistically checkable proofs that can be checked by reading only a few bits of the proof using randomness in an essential way.

In computational complexity theory, Savitch's theorem, proved by Walter Savitch in 1970, gives a relationship between deterministic and non-deterministic space complexity. It states that for any function ,

In computational complexity theory, DSPACE or SPACE is the computational resource describing the resource of memory space for a deterministic Turing machine. It represents the total amount of memory space that a "normal" physical computer would need to solve a given computational problem with a given algorithm.

In computational complexity theory, an advice string is an extra input to a Turing machine that is allowed to depend on the length n of the input, but not on the input itself. A decision problem is in the complexity class P/f(n) if there is a polynomial time Turing machine M with the following property: for any n, there is an advice string A of length f(n) such that, for any input x of length n, the machine M correctly decides the problem on the input x, given x and A.

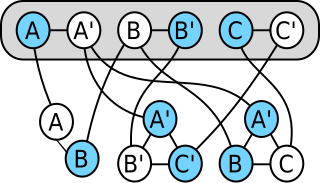

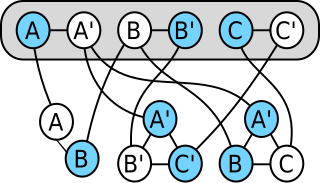

In computability theory and computational complexity theory, a reduction is an algorithm for transforming one problem into another problem. A sufficiently efficient reduction from one problem to another may be used to show that the second problem is at least as difficult as the first.

In computational complexity theory, NL is the complexity class containing decision problems that can be solved by a nondeterministic Turing machine using a logarithmic amount of memory space.

In computational complexity theory, L is the complexity class containing decision problems that can be solved by a deterministic Turing machine using a logarithmic amount of writable memory space. Formally, the Turing machine has two tapes, one of which encodes the input and can only be read, whereas the other tape has logarithmic size but can be read as well as written. Logarithmic space is sufficient to hold a constant number of pointers into the input and a logarithmic number of boolean flags, and many basic logspace algorithms use the memory in this way.

In computational complexity theory, SL is the complexity class of problems log-space reducible to USTCON, which is the problem of determining whether there exists a path between two vertices in an undirected graph, otherwise described as the problem of determining whether two vertices are in the same connected component. This problem is also called the undirected reachability problem. It does not matter whether many-one reducibility or Turing reducibility is used. Although originally described in terms of symmetric Turing machines, that equivalent formulation is very complex, and the reducibility definition is what is used in practice.

Randomized Logarithmic-space (RL), sometimes called RLP, is the complexity class of computational complexity theory problems solvable in logarithmic space and polynomial time with probabilistic Turing machines with one-sided error. It is named in analogy with RP, which is similar but has no logarithmic space restriction.

In computer science, st-connectivity or STCON is a decision problem asking, for vertices s and t in a directed graph, if t is reachable from s.

In computational complexity theory, NL-complete is a complexity class containing the languages that are complete for NL, the class of decision problems that can be solved by a nondeterministic Turing machine using a logarithmic amount of memory space. The NL-complete languages are the most "difficult" or "expressive" problems in NL. If a deterministic algorithm exists for solving any one of the NL-complete problems in logarithmic memory space, then NL = L.

AC0 is a complexity class used in circuit complexity. It is the smallest class in the AC hierarchy, and consists of all families of circuits of depth O(1) and polynomial size, with unlimited-fanin AND gates and OR gates (we allow NOT gates only at the inputs). It thus contains NC0, which has only bounded-fanin AND and OR gates.

In computational complexity theory, a certificate is a string that certifies the answer to a computation, or certifies the membership of some string in a language. A certificate is often thought of as a solution path within a verification process, which is used to check whether a problem gives the answer "Yes" or "No".

In computational complexity theory, a problem is NP-complete when:

- it is a problem for which the correctness of each solution can be verified quickly and a brute-force search algorithm can find a solution by trying all possible solutions.

- the problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. In this sense, NP-complete problems are the hardest of the problems to which solutions can be verified quickly. If we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified.