In computability theory and computational complexity theory, a decision problem is a computational problem that can be posed as a yes–no question of the input values. An example of a decision problem is deciding by means of an algorithm whether a given natural number is prime. Another is the problem "given two numbers x and y, does x evenly divide y?". The answer is either 'yes' or 'no' depending upon the values of x and y. A method for solving a decision problem, given in the form of an algorithm, is called a decision procedure for that problem. A decision procedure for the decision problem "given two numbers x and y, does x evenly divide y?" would give the steps for determining whether x evenly divides y. One such algorithm is long division. If the remainder is zero the answer is 'yes', otherwise it is 'no'. A decision problem which can be solved by an algorithm is called decidable.

In complexity theory and computability theory, an oracle machine is an abstract machine used to study decision problems. It can be visualized as a Turing machine with a black box, called an oracle, which is able to solve certain problems in a single operation. The problem can be of any complexity class. Even undecidable problems, such as the halting problem, can be used.

In computability theory, Rice's theorem states that all non-trivial semantic properties of programs are undecidable. A semantic property is one about the program's behavior, unlike a syntactic property. A non-trivial property is one which is neither true for every program, nor false for every program.

In theoretical computer science and mathematics, the theory of computation is the branch that deals with what problems can be solved on a model of computation, using an algorithm, how efficiently they can be solved or to what degree. The field is divided into three major branches: automata theory and formal languages, computability theory, and computational complexity theory, which are linked by the question: "What are the fundamental capabilities and limitations of computers?".

In mathematics, logic and computer science, a formal language is called recursively enumerable if it is a recursively enumerable subset in the set of all possible words over the alphabet of the language, i.e., if there exists a Turing machine which will enumerate all valid strings of the language.

Computability theory, also known as recursion theory, is a branch of mathematical logic, computer science, and the theory of computation that originated in the 1930s with the study of computable functions and Turing degrees. The field has since expanded to include the study of generalized computability and definability. In these areas, computability theory overlaps with proof theory and effective descriptive set theory.

In computability theory, a set S of natural numbers is called computably enumerable (c.e.), recursively enumerable (r.e.), semidecidable, partially decidable, listable, provable or Turing-recognizable if:

In computability theory, a set of natural numbers is called computable, recursive, or decidable if there is an algorithm which takes a number as input, terminates after a finite amount of time and correctly decides whether the number belongs to the set or not.

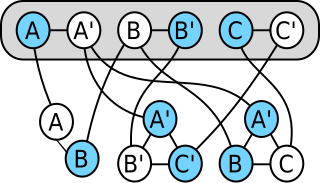

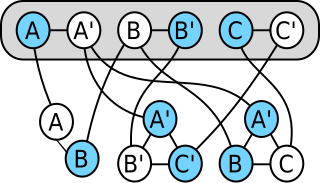

In computability theory and computational complexity theory, a many-one reduction is a reduction that converts instances of one decision problem to another decision problem using a computable function. The reduced instance is in the language if and only if the initial instance is in its language . Thus if we can decide whether instances are in the language , we can decide whether instances are in its language by applying the reduction and solving for . Thus, reductions can be used to measure the relative computational difficulty of two problems. It is said that reduces to if, in layman's terms is at least as hard to solve as . This means that any algorithm that solves can also be used as part of a program that solves .

Computability is the ability to solve a problem in an effective manner. It is a key topic of the field of computability theory within mathematical logic and the theory of computation within computer science. The computability of a problem is closely linked to the existence of an algorithm to solve the problem.

In computer science and mathematical logic the Turing degree or degree of unsolvability of a set of natural numbers measures the level of algorithmic unsolvability of the set.

In computability theory and computational complexity theory, a reduction is an algorithm for transforming one problem into another problem. A sufficiently efficient reduction from one problem to another may be used to show that the second problem is at least as difficult as the first.

Computable functions are the basic objects of study in computability theory. Computable functions are the formalized analogue of the intuitive notion of algorithms, in the sense that a function is computable if there exists an algorithm that can do the job of the function, i.e. given an input of the function domain it can return the corresponding output. Computable functions are used to discuss computability without referring to any concrete model of computation such as Turing machines or register machines. Any definition, however, must make reference to some specific model of computation but all valid definitions yield the same class of functions. Particular models of computability that give rise to the set of computable functions are the Turing-computable functions and the general recursive functions.

In computability theory, a decider is a Turing machine that halts for every input. A decider is also called a total Turing machine as it represents a total function.

In computability theory, a truth-table reduction is a reduction from one set of natural numbers to another. As a "tool", it is weaker than Turing reduction, since not every Turing reduction between sets can be performed by a truth-table reduction, but every truth-table reduction can be performed by a Turing reduction. For the same reason it is said to be a stronger reducibility than Turing reducibility, because it implies Turing reducibility. A weak truth-table reduction is a related type of reduction, which is so named because it weakens the constraints placed on a truth-table reduction, and provides a weaker equivalence classification; as such, a "weak truth-table reduction" can actually be more powerful than a truth-table reduction as a "tool", and perform a reduction that is not performable by truth table.

In logic, finite model theory, and computability theory, Trakhtenbrot's theorem states that the problem of validity in first-order logic on the class of all finite models is undecidable. In fact, the class of valid sentences over finite models is not recursively enumerable.

In computability theory, many reducibility relations are studied. They are motivated by the question: given sets and of natural numbers, is it possible to effectively convert a method for deciding membership in into a method for deciding membership in ? If the answer to this question is affirmative then is said to be reducible to.

In computability theory, the halting problem is the problem of determining, from a description of an arbitrary computer program and an input, whether the program will finish running, or continue to run forever. The halting problem is undecidable, meaning that no general algorithm exists that solves the halting problem for all possible program–input pairs.

Generic-case complexity is a subfield of computational complexity theory that studies the complexity of computational problems on "most inputs".

In computability theory and computational complexity theory, enumeration reducibility is a method of reduction that determines if there is some effective procedure for determining enumerability between sets of natural numbers. An enumeration in the context of e-reducibility is a listing of the elements in a particular set, or collection of items, though not necessarily ordered or complete.