Computer science is the study of computation, information, and automation. Computer science spans theoretical disciplines to applied disciplines. Though more often considered an academic discipline, computer science is closely related to computer programming.

In programming language theory, lazy evaluation, or call-by-need, is an evaluation strategy which delays the evaluation of an expression until its value is needed and which also avoids repeated evaluations.

In computer science, an abstract machine is a theoretical model that allows for a detailed and precise analysis of how a computer system functions. It is similar to a mathematical function in that it receives inputs and produces outputs based on predefined rules. Abstract machines vary from literal machines in that they are expected to perform correctly and independently of hardware. Abstract machines are "machines" because they allow step-by-step execution of programmes; they are "abstract" because they ignore many aspects of actual (hardware) machines. A typical abstract machine consists of a definition in terms of input, output, and the set of allowable operations used to turn the former into the latter. They can be used for purely theoretical reasons as well as models for real-world computer systems. In the theory of computation, abstract machines are often used in thought experiments regarding computability or to analyse the complexity of algorithms. This use of abstract machines is fundamental to the field of computational complexity theory, such as finite state machines, Mealy machines, push-down automata, and Turing machines.

In computing, partial evaluation is a technique for several different types of program optimization by specialization. The most straightforward application is to produce new programs that run faster than the originals while being guaranteed to behave in the same way.

Alan Jay Perlis was an American computer scientist and professor at Purdue University, Carnegie Mellon University and Yale University. He is best known for his pioneering work in programming languages and was the first recipient of the Turing Award.

Speculative execution is an optimization technique where a computer system performs some task that may not be needed. Work is done before it is known whether it is actually needed, so as to prevent a delay that would have to be incurred by doing the work after it is known that it is needed. If it turns out the work was not needed after all, most changes made by the work are reverted and the results are ignored.

In theoretical computer science, an algorithm is correct with respect to a specification if it behaves as specified. Best explored is functional correctness, which refers to the input-output behavior of the algorithm.

Datalog is a declarative logic programming language. While it is syntactically a subset of Prolog, Datalog generally uses a bottom-up rather than top-down evaluation model. This difference yields significantly different behavior and properties from Prolog. It is often used as a query language for deductive databases. Datalog has been applied to problems in data integration, networking, program analysis, and more.

Peter James Denning is an American computer scientist and writer. He is best known for pioneering work in virtual memory, especially for inventing the working-set model for program behavior, which addressed thrashing in operating systems and became the reference standard for all memory management policies. He is also known for his works on principles of operating systems, operational analysis of queueing network systems, design and implementation of CSNET, the ACM digital library, and codifying the great principles of computing. He has written numerous influential articles and books, including an overview of fundamental computer science principles, computational thinking, and his thoughts on innovation as a set of learnable practices.

Ben Shneiderman is an American computer scientist, a Distinguished University Professor in the University of Maryland Department of Computer Science, which is part of the University of Maryland College of Computer, Mathematical, and Natural Sciences at the University of Maryland, College Park, and the founding director (1983-2000) of the University of Maryland Human-Computer Interaction Lab. He conducted fundamental research in the field of human–computer interaction, developing new ideas, methods, and tools such as the direct manipulation interface, and his eight rules of design.

In computer science, unbounded nondeterminism or unbounded indeterminacy is a property of concurrency by which the amount of delay in servicing a request can become unbounded as a result of arbitration of contention for shared resources while still guaranteeing that the request will eventually be serviced. Unbounded nondeterminism became an important issue in the development of the denotational semantics of concurrency, and later became part of research into the theoretical concept of hypercomputation.

Oscar Peter Buneman, is a British computer scientist who works in the areas of database systems and database theory.

Georg Gottlob FRS is an Austrian-Italian computer scientist who works in the areas of database theory, logic, and artificial intelligence and is Professor of Informatics at the University of Calabria. He was Professor at the University of Oxford.

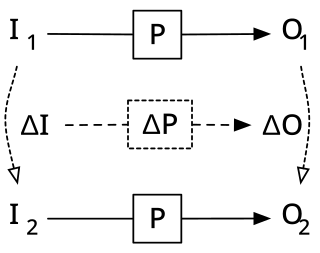

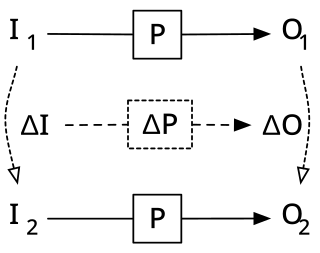

Incremental computing, also known as incremental computation, is a software feature which, whenever a piece of data changes, attempts to save time by only recomputing those outputs which depend on the changed data. When incremental computing is successful, it can be significantly faster than computing new outputs naively. For example, a spreadsheet software package might use incremental computation in its recalculation feature, to update only those cells containing formulas which depend on the changed cells.

Larry Joseph Stockmeyer was an American computer scientist. He was one of the pioneers in the field of computational complexity theory, and he also worked in the field of distributed computing. He died of pancreatic cancer.

Peter A. Wegner was a professor of computer science at Brown University from 1969 to 1999. He made significant contributions to both the theory of object-oriented programming during the 1980s and to the relevance of the Church–Turing thesis for empirical aspects of computer science during the 1990s and present. In 2016, Wegner wrote a brief autobiography for Conduit, the annual Brown University Computer Science department magazine.

Inductive programming (IP) is a special area of automatic programming, covering research from artificial intelligence and programming, which addresses learning of typically declarative and often recursive programs from incomplete specifications, such as input/output examples or constraints.

The size-change termination principle (SCT) guarantees termination for a computer program by proving that infinite computations always trigger infinite descent in data values that are well-founded. Size-change termination analysis utilizes this principle in order to solve the universal halting problem for a certain class of programs. When applied to general programs, the principle is intended to be used conservatively, which means that if the analysis determines that a program is terminating, the answer is sound, but a negative answer means "don't know". The decision problem for SCT is PSPACE-complete; however, there exists an algorithm that computes an approximation of the decision problem in polynomial time. Size-change analysis is applicable to both first-order and higher-order functional programs, as well as imperative programs and logic programs. The latter application preceded by four years the general formulation of the principle by Lee et al.