Related Research Articles

Code-division multiple access (CDMA) is a channel access method used by various radio communication technologies. CDMA is an example of multiple access, where several transmitters can send information simultaneously over a single communication channel. This allows several users to share a band of frequencies. To permit this without undue interference between the users, CDMA employs spread spectrum technology and a special coding scheme.

In electronics and telecommunications, modulation is the process of varying one or more properties of a periodic waveform, called the carrier signal, with a separate signal called the modulation signal that typically contains information to be transmitted. For example, the modulation signal might be an audio signal representing sound from a microphone, a video signal representing moving images from a video camera, or a digital signal representing a sequence of binary digits, a bitstream from a computer.

A quantum computer is a computer that exploits quantum mechanical phenomena. At small scales, physical matter exhibits properties of both particles and waves, and quantum computing leverages this behavior using specialized hardware. Classical physics cannot explain the operation of these quantum devices, and a scalable quantum computer could perform some calculations exponentially faster than any modern "classical" computer. In particular, a large-scale quantum computer could break widely used encryption schemes and aid physicists in performing physical simulations; however, the current state of the art is still largely experimental and impractical.

In quantum computing, a qubit or quantum bit is a basic storage/symbol, into which a bit of quantum information is stored/encoded-the quantum version of the classic binary bit physically realized with a two-state device. A storage is preferred terminology in computing whereas a symbol is preferred terminology in digital communication.

Pink noise, 1⁄f noise or fractal noise is a signal or process with a frequency spectrum such that the power spectral density is inversely proportional to the frequency of the signal. In pink noise, each octave interval carries an equal amount of noise energy.

A qutrit is a unit of quantum information that is realized by a 3-level quantum system, that may be in a superposition of three mutually orthogonal quantum states.

Landauer's principle is a physical principle pertaining to the lower theoretical limit of energy consumption of computation. It holds that an irreversible change in information stored in a computer, such as merging two computational paths, dissipates a minimum amount of heat to its surroundings.

BB84 is a quantum key distribution scheme developed by Charles Bennett and Gilles Brassard in 1984. It is the first quantum cryptography protocol. The protocol is provably secure, relying on two conditions: (1) the quantum property that information gain is only possible at the expense of disturbing the signal if the two states one is trying to distinguish are not orthogonal ; and (2) the existence of an authenticated public classical channel. It is usually explained as a method of securely communicating a private key from one party to another for use in one-time pad encryption.

Objective-collapse theories, also known as models of spontaneous wave function collapse or dynamical reduction models, are proposed solutions to the measurement problem in quantum mechanics. As with other theories called interpretations of quantum mechanics, they are possible explanations of why and how quantum measurements always give definite outcomes, not a superposition of them as predicted by the Schrödinger equation, and more generally how the classical world emerges from quantum theory. The fundamental idea is that the unitary evolution of the wave function describing the state of a quantum system is approximate. It works well for microscopic systems, but progressively loses its validity when the mass / complexity of the system increases.

In statistical signal processing, the goal of spectral density estimation (SDE) or simply spectral estimation is to estimate the spectral density of a signal from a sequence of time samples of the signal. Intuitively speaking, the spectral density characterizes the frequency content of the signal. One purpose of estimating the spectral density is to detect any periodicities in the data, by observing peaks at the frequencies corresponding to these periodicities.

Amplitude and phase-shift keying (APSK) is a digital modulation scheme that conveys data by modulating both the amplitude and the phase of a carrier wave. In other words, it combines both amplitude-shift keying (ASK) and phase-shift keying (PSK). This allows for a lower bit error rate for a given modulation order and signal-to-noise ratio, at the cost of increased complexity, compared to ASK or PSK alone.

The Hilbert–Huang transform (HHT) is a way to decompose a signal into so-called intrinsic mode functions (IMF) along with a trend, and obtain instantaneous frequency data. It is designed to work well for data that is nonstationary and nonlinear. In contrast to other common transforms like the Fourier transform, the HHT is an algorithm that can be applied to a data set, rather than a theoretical tool.

Laszlo Bela Kish is a physicist and professor of Electrical and Computer Engineering at Texas A&M University. His activities include a wide range of issues surrounding the physics and technical applications of stochastic fluctuations (noises) in physical, biological and technological systems, including nanotechnology. His earlier long-term positions include the Department of Experimental Physics, University of Szeged, Hungary, and Angstrom Laboratory, Uppsala University, Sweden (1997–2001). During the same periods he had also conducted scientific research in short-term positions, such as at the Eindhoven University of Technology, University of Cologne, National Research Laboratory of Metrology, University of Birmingham, and others.

Stochastic computing is a collection of techniques that represent continuous values by streams of random bits. Complex computations can then be computed by simple bit-wise operations on the streams. Stochastic computing is distinct from the study of randomized algorithms.

A digital signal is a signal that represents data as a sequence of discrete values; at any given time it can only take on, at most, one of a finite number of values. This contrasts with an analog signal, which represents continuous values; at any given time it represents a real number within a continuous range of values.

Sensing of phage-triggered ion cascades (SEPTIC) is a prompt bacterium identification method based on fluctuation-enhanced sensing in fluid medium. The advantages of SEPTIC are the specificity and speed offered by the characteristics of phage infection, the sensitivity due to fluctuation-enhanced sensing, and durability originating from the robustness of phages. An idealistic SEPTIC device may be as small as a pen and maybe able to identify a library of different bacteria within a few minutes measurement window.

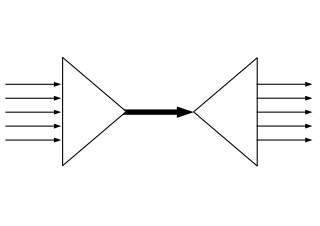

Fluctuation-enhanced sensing (FES) is a specific type of chemical or biological sensing where the stochastic component, noise, of the sensor signal is analyzed. The stages following the sensor in a FES system typically contain filters and preamplifier(s) to extract and amplify the stochastic signal components, which are usually microscopic temporal fluctuations that are orders of magnitude weaker than the sensor signal. Then selected statistical properties of the amplified noise are analyzed, and a corresponding pattern is generated as the stochastic fingerprint of the sensed agent. Often the power density spectrum of the stochastic signal is used as output pattern however FES has been proven effective with more advanced methods, too, such as higher-order statistics.

In mathematics and telecommunications, stochastic geometry models of wireless networks refer to mathematical models based on stochastic geometry that are designed to represent aspects of wireless networks. The related research consists of analyzing these models with the aim of better understanding wireless communication networks in order to predict and control various network performance metrics. The models require using techniques from stochastic geometry and related fields including point processes, spatial statistics, geometric probability, percolation theory, as well as methods from more general mathematical disciplines such as geometry, probability theory, stochastic processes, queueing theory, information theory, and Fourier analysis.

Quantum machine learning is the integration of quantum algorithms within machine learning programs. The most common use of the term refers to machine learning algorithms for the analysis of classical data executed on a quantum computer, i.e. quantum-enhanced machine learning. While machine learning algorithms are used to compute immense quantities of data, quantum machine learning utilizes qubits and quantum operations or specialized quantum systems to improve computational speed and data storage done by algorithms in a program. This includes hybrid methods that involve both classical and quantum processing, where computationally difficult subroutines are outsourced to a quantum device. These routines can be more complex in nature and executed faster on a quantum computer. Furthermore, quantum algorithms can be used to analyze quantum states instead of classical data. Beyond quantum computing, the term "quantum machine learning" is also associated with classical machine learning methods applied to data generated from quantum experiments, such as learning the phase transitions of a quantum system or creating new quantum experiments. Quantum machine learning also extends to a branch of research that explores methodological and structural similarities between certain physical systems and learning systems, in particular neural networks. For example, some mathematical and numerical techniques from quantum physics are applicable to classical deep learning and vice versa. Furthermore, researchers investigate more abstract notions of learning theory with respect to quantum information, sometimes referred to as "quantum learning theory".

This glossary of quantum computing is a list of definitions of terms and concepts used in quantum computing, its sub-disciplines, and related fields.

References

- ↑ David Boothroyd (22 February 2011). "Cover Story: What's this noise all about?". New Electronics.

- ↑ Justin Mullins (7 October 2010). "Breaking the Noise Barrier: Enter the phonon computer". New Scientist. Archived from the original on 2016-04-13.

- 1 2 3 4 5 Laszlo B. Kish (2009). "Noise-based logic: Binary, multi-valued, or fuzzy, with optional superposition of logic states". Physics Letters A. 373 (10): 911–918. arXiv: 0808.3162 . Bibcode:2009PhLA..373..911K. doi:10.1016/j.physleta.2008.12.068. S2CID 17537255.

- 1 2 3 4 Laszlo B. Kish; Sunil Khatri; Swaminathan Sethuraman (2009). "Noise-based logic hyperspace with the superposition of 2^N states in a single wire". Physics Letters A. 373 (22): 1928–1934. arXiv: 0901.3947 . Bibcode:2009PhLA..373.1928K. doi:10.1016/j.physleta.2009.03.059. S2CID 15254977.

- 1 2 3 Sergey M. Bezrukov; Laszlo B. Kish (2009). "Deterministic multivalued logic scheme for information processing and routing in the brain". Physics Letters A. 373 (27–28): 2338–2342. arXiv: 0902.2033 . Bibcode:2009PhLA..373.2338B. doi:10.1016/j.physleta.2009.04.073. S2CID 119241496.

- 1 2 3 4 5 Laszlo B. Kish; Sunil Khatri; Ferdinand Peper (2010). "Instantaneous noise-based logic". Fluctuation and Noise Letters. 09 (4): 323–330. arXiv: 1004.2652 . doi:10.1142/S0219477510000253. S2CID 17034438.

- 1 2 3 4 Peper, Ferdinand; Kish, Laszlo B. (2011). "Instantaneous, Non-Squeezed, Noise-Based Logic" (PDF). Fluctuation and Noise Letters. 10 (2): 231–237. arXiv: 1012.3531 . doi:10.1142/S0219477511000521. S2CID 1610981.

- 1 2 3 4 5 Laszlo B. Kish; Sunil Khatri; Tamas Horvath (2011). "Computation using Noise-based Logic: Efficient String Verification over a Slow Communication Channel". The European Physical Journal B. 79 (1): 85–90. arXiv: 1005.1560 . Bibcode:2011EPJB...79...85K. doi:10.1140/epjb/e2010-10399-x. S2CID 15608951.