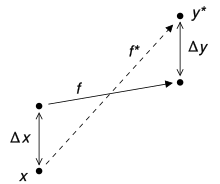

In numerical analysis, the condition number of a function measures how much the output value of the function can change for a small change in the input argument. This is used to measure how sensitive a function is to changes or errors in the input, and how much error in the output results from an error in the input. Very frequently, one is solving the inverse problem: given one is solving for x, and thus the condition number of the (local) inverse must be used.

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis. It is the study of numerical methods that attempt at finding approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics, numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine and biology.

Levinson recursion or Levinson–Durbin recursion is a procedure in linear algebra to recursively calculate the solution to an equation involving a Toeplitz matrix. The algorithm runs in Θ(n2) time, which is a strong improvement over Gauss–Jordan elimination, which runs in Θ(n3).

Numerical methods for ordinary differential equations are methods used to find numerical approximations to the solutions of ordinary differential equations (ODEs). Their use is also known as "numerical integration", although this term can also refer to the computation of integrals.

Numerical methods for partial differential equations is the branch of numerical analysis that studies the numerical solution of partial differential equations (PDEs).

In mathematics, a stiff equation is a differential equation for which certain numerical methods for solving the equation are numerically unstable, unless the step size is taken to be extremely small. It has proven difficult to formulate a precise definition of stiffness, but the main idea is that the equation includes some terms that can lead to rapid variation in the solution.

In mathematics and computational science, the Euler method is a first-order numerical procedure for solving ordinary differential equations (ODEs) with a given initial value. It is the most basic explicit method for numerical integration of ordinary differential equations and is the simplest Runge–Kutta method. The Euler method is named after Leonhard Euler, who first proposed it in his book Institutionum calculi integralis.

Explicit and implicit methods are approaches used in numerical analysis for obtaining numerical approximations to the solutions of time-dependent ordinary and partial differential equations, as is required in computer simulations of physical processes. Explicit methods calculate the state of a system at a later time from the state of the system at the current time, while implicit methods find a solution by solving an equation involving both the current state of the system and the later one. Mathematically, if is the current system state and is the state at the later time, then, for an explicit method

In numerical analysis and scientific computing, the backward Euler method is one of the most basic numerical methods for the solution of ordinary differential equations. It is similar to the (standard) Euler method, but differs in that it is an implicit method. The backward Euler method has error of order one in time.

In numerical analysis, finite-difference methods (FDM) are a class of numerical techniques for solving differential equations by approximating derivatives with finite differences. Both the spatial domain and time interval are discretized, or broken into a finite number of steps, and the value of the solution at these discrete points is approximated by solving algebraic equations containing finite differences and values from nearby points.

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible.

Leslie Fox was a British mathematician noted for his contribution to numerical analysis.

A digital differential analyzer (DDA), also sometimes called a digital integrating computer, is a digital implementation of a differential analyzer. The integrators in a DDA are implemented as accumulators, with the numeric result converted back to a pulse rate by the overflow of the accumulator.

In numerical analysis, von Neumann stability analysis is a procedure used to check the stability of finite difference schemes as applied to linear partial differential equations. The analysis is based on the Fourier decomposition of numerical error and was developed at Los Alamos National Laboratory after having been briefly described in a 1947 article by British researchers Crank and Nicolson. This method is an example of explicit time integration where the function that defines governing equation is evaluated at the current time. Later, the method was given a more rigorous treatment in an article co-authored by John von Neumann.

In calculus, the differential represents the principal part of the change in a function with respect to changes in the independent variable. The differential is defined by

In mathematics, an ordinary differential equation (ODE) is a differential equation (DE) dependent on only a single independent variable. As with other DE, its unknown(s) consists of one function(s) and involves the derivatives of those functions. The term "ordinary" is used in contrast with partial differential equations which may be with respect to more than one independent variable.

In numerical analysis and scientific computing, the trapezoidal rule is a numerical method to solve ordinary differential equations derived from the trapezoidal rule for computing integrals. The trapezoidal rule is an implicit second-order method, which can be considered as both a Runge–Kutta method and a linear multistep method.

Fluid motion is governed by the Navier–Stokes equations, a set of coupled and nonlinear partial differential equations derived from the basic laws of conservation of mass, momentum and energy. The unknowns are usually the flow velocity, the pressure and density and temperature. The analytical solution of this equation is impossible hence scientists resort to laboratory experiments in such situations. The answers delivered are, however, usually qualitatively different since dynamical and geometric similitude are difficult to enforce simultaneously between the lab experiment and the prototype. Furthermore, the design and construction of these experiments can be difficult, particularly for stratified rotating flows. Computational fluid dynamics (CFD) is an additional tool in the arsenal of scientists. In its early days CFD was often controversial, as it involved additional approximation to the governing equations and raised additional (legitimate) issues. Nowadays CFD is an established discipline alongside theoretical and experimental methods. This position is in large part due to the exponential growth of computer power which has allowed us to tackle ever larger and more complex problems.

Zero-stability, also known as D-stability in honor of Germund Dahlquist, refers to the stability of a numerical scheme applied to the simple initial value problem .