Arithmetic expressions

Arithmetic expressions involving operations such as additions, subtractions, multiplications, divisions, minima, maxima, powers, exponentials, logarithms, square roots, absolute values, etc., are commonly used in risk analyses and uncertainty modeling. Convolution is the operation of finding the probability distribution of a sum of independent random variables specified by probability distributions. We can extend the term to finding distributions of other mathematical functions (products, differences, quotients, and more complex functions) and other assumptions about the intervariable dependencies. There are convenient algorithms for computing these generalized convolutions under a variety of assumptions about the dependencies among the inputs. [5] [9] [10] [14]

Mathematical details

Let denote the space of distribution functions on the real numbers i.e.,

A p-box is a quintuple

where are real intervals, and This quintuple denotes the set of distribution functions such that:

If a function satisfies all the conditions above it is said to be inside the p-box. In some cases, there may be no information about the moments or distribution family other than what is encoded in the two distribution functions that constitute the edges of the p-box. Then the quintuple representing the p-box can be denoted more compactly as [B1, B2]. This notation harkens to that of intervals on the real line, except that the endpoints are distributions rather than points.

The notation denotes the fact that is a random variable governed by the distribution function F, that is,

Let us generalize the tilde notation for use with p-boxes. We will write X ~ B to mean that X is a random variable whose distribution function is unknown except that it is inside B. Thus, X ~ F ∈ B can be contracted to X ~ B without mentioning the distribution function explicitly.

If X and Y are independent random variables with distributions F and G respectively, then X + Y = Z ~ H given by

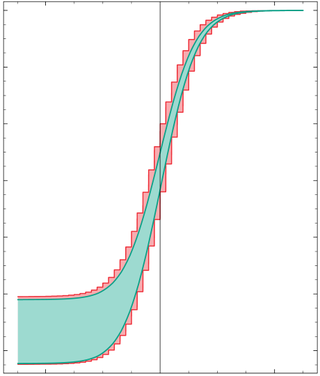

This operation is called a convolution on F and G. The analogous operation on p-boxes is straightforward for sums. Suppose

If X and Y are stochastically independent, then the distribution of Z = X + Y is inside the p-box

Finding bounds on the distribution of sums Z = X + Ywithout making any assumption about the dependence between X and Y is actually easier than the problem assuming independence. Makarov [6] [8] [9] showed that

These bounds are implied by the Fréchet–Hoeffding copula bounds. The problem can also be solved using the methods of mathematical programming. [13]

The convolution under the intermediate assumption that X and Y have positive dependence is likewise easy to compute, as is the convolution under the extreme assumptions of perfect positive or perfect negative dependency between X and Y. [14]

Generalized convolutions for other operations such as subtraction, multiplication, division, etc., can be derived using transformations. For instance, p-box subtraction A − B can be defined as A + (−B), where the negative of a p-box B = [B1, B2] is [B2(−x), B1(−x)].